For all the criticism that rating models and credit rating agencies have had through the years, they are still the most pragmatic and realistic approach for assessing default risk for your counterparties. Of course, the quality of the assessment depends to a large extent on the quality of the model used to determine the credit rating, capturing both the quantitative and qualitative factors determining counterparty credit risk. A sound credit rating model strikes a balance between these two aspects. Relying too much on quantitative outcomes ignores valuable ‘unstructured’ information, whereas an expert judgement based approach ignores the value of empirical data, and their explanatory power.

In this white paper we will outline some best practice approaches to assessing default risk of a company through a credit rating. We will explore the ratios that are crucial factors in the model and provide guidance for the expert judgement aspects of the model.

Zanders has applied these best practices while designing several Credit Rating models for many years. These models are already being used at over 400 companies and have been tested both in practice and against empirical data. Do you want to know more about our Credit Rating models, click here.

Credit ratings and their applications

Credit ratings are widely used throughout the financial industry, for a variety of applications. This includes the corporate finance, risk and treasury domains and beyond. While it is hardly ever a sole factor driving management decisions, the availability of a point estimation to describe something as complex as counterparty credit risk has proven a very useful piece of information for making informed decisions, without the need for a full due diligence into the books of the counterparty.

Some of the specific use cases are:

- Internal assessment of the creditworthiness of counterparties

- Transparency of the creditworthiness of counterparties

- Monitoring trends in the quality of credit portfolios

- Monitoring concentration risk

- Performance measurement

- Determination of risk-adjusted credit approval levels and frequency of credit reviews

- Formulation of credit policies, risk appetite, collateral policies, etc.

- Loan pricing based on Risk Adjusted Return on Capital (RAROC) and Economic Profit (EP)

- Arm’s length pricing of intercompany transactions, in line with OECD guidelines

- Regulatory Capital (RC) and Economic Capital (EC) calculations

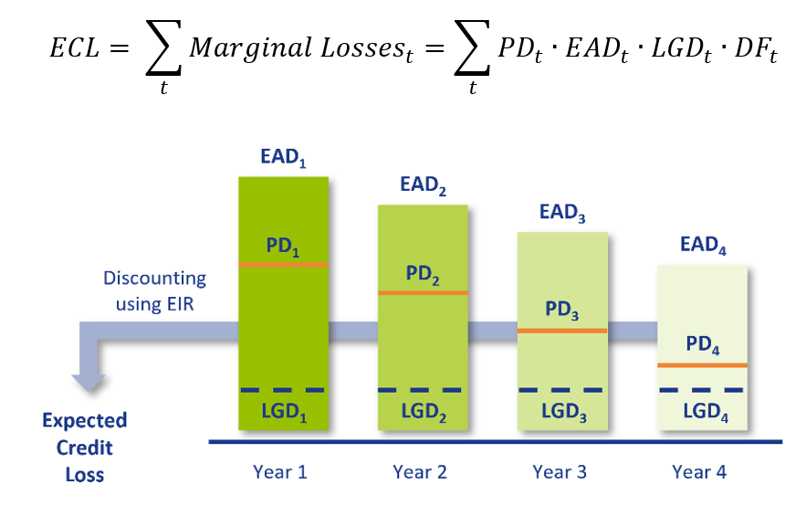

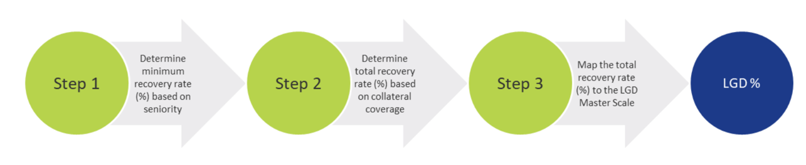

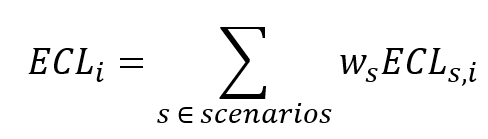

- Expected Credit Loss (ECL) IFRS 9 calculations

- Active Credit Portfolio Management on both portfolio and (individual) counterparty level

Credit rating philosophy

A fundamental starting point when applying credit ratings, is the credit rating philosophy that is followed. In general, two distinct approaches are recognized:

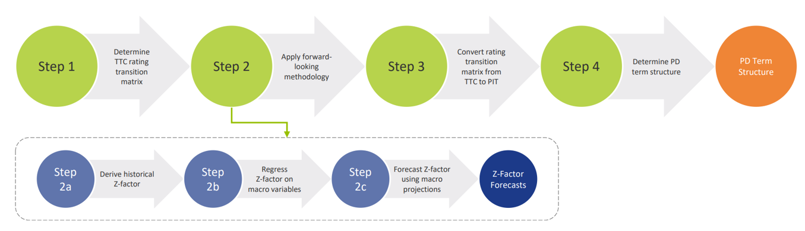

- Through-the-Cycle (TtC) rating systems measure default risk of a counterparty by taking permanent factors, like a full economic cycle, into account based on a worst-case scenario. TtC ratings change only if there is a fundamental change in the counterparty’s situation and outlook. The models employed for the public ratings published by e.g. S&P, Fitch and Moody’s are generally more TtC focused. They tend to assign more weight to qualitative features and incorporate longer trends in the financial ratios, both of which increase stability over time.

- Point-in-Time (PiT) rating systems measure default risk of a counterparty taking current, temporary factors into account. PiT ratings tend to adjust quickly to changes in the (financial) conditions of a counterparty and/or its economic environment. PiT models are more suited for shorter term risk assessments, like Expected Credit Losses. They are more focused on financial ratios, thereby capturing the more dynamic variables. Furthermore, they incorporate a shorter trend which adjusts faster over time. Most models incorporate a blend between the two approaches, acknowledging that both short term and long term effects may impact creditworthiness.

Rating methodology

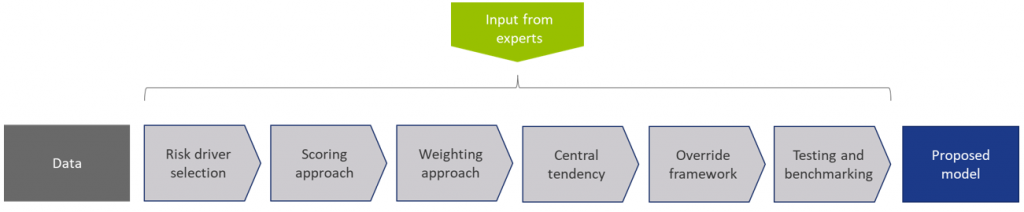

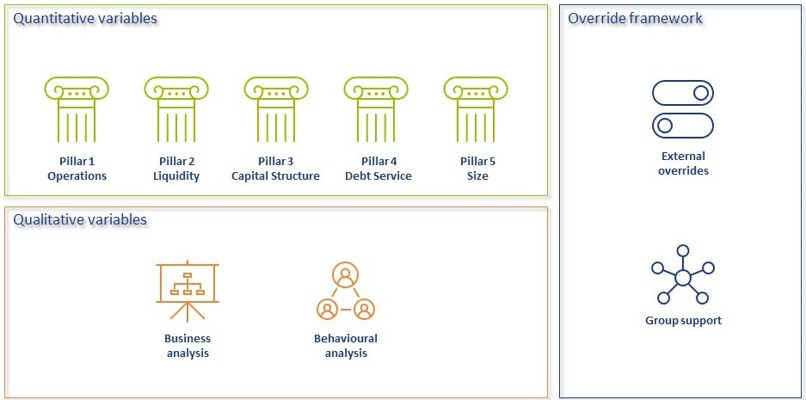

Modelling credit ratings is very complex, due to the wide variety of events and exposures that companies are exposed to. Operational risk, liquidity risk, poor management, a perishing business model, an external negative event, failing governments and technological innovation can all have very significant influence on the creditworthiness of companies in the short and long run. Most credit rating models therefore distinguish a range of different factors that are modelled separately and then combined into a single credit rating. The exact factors will differ per rating model. The overview below presents the factors included the Corporate Rating Model, which is used in some of the cloud-based solutions of the Zanders applications.

The remainder of this article will detail the different factors, explaining the rationale behind including them.

Quantitative factors

Quantitative risk factors are crucial to credit rating models, as they are ‘objective’ and therefore generate a large degree of comparability between different companies. Their objective nature also makes them easier to incorporate in a model on a large scale. While financials alone do not tell the whole story about a company, accounting standards have developed over time to provide a more and more comparable view of the financial state of a company, making them a more and more thrustworthy source for determining creditworthiness. To better enable comparisons of companies with different sizes, financials are often represented as ratios.

Financial Ratios

Financial ratios are being used for credit risk analyses throughout the financial industry and present the basic characteristics of companies. A number of these ratios represent (directly or indirectly) creditworthiness. Zanders’ Corporate Credit Rating model uses the most common of these financial ratios, which can be categorised in five pillars:

Pillar 1 - Operations

The Operations pillar consists of variables that consider the profitability and ability of a company to influence its profitability. Earnings power is a main determinant of the success or failure of a company. It measures the ability of a company to create economic value and the ability to give risk protection to its creditors. Recurrent profitability is a main line of defense against debtor-, market-, operational- and business risk losses.

Turnover Growth

Turnover growth is defined as the annual percentage change in Turnover, expressed as a percentage. It indicates the growth rate of a company. Both very low and very high values tend to indicate low credit quality. For low turnover growth this is clear. High turnover growth can be an indication for a risky business strategy or a start-up company with a business model that has not been tested over time.

Gross Margin

Gross margin is defined as Gross profit divided by Turnover, expressed as a percentage. The gross margin indicates the profitability of a company. It measures how much a company earns, taking into consideration the costs that it incurs for producing its products and/or services. A higher Gross margin implies a lower default probability.

Operating Margin

Operating margin is defined as Earnings before Interest and Taxes (EBIT) divided by Turnover, expressed as a percentage. This ratio indicates the profitability of the company. Operating margin is a measurement of what proportion of a company's revenue is left over after paying for variable costs of production such as wages, raw materials, etc. A healthy Operating margin is required for a company to be able to pay for its fixed costs, such as interest on debt. A higher Operating margin implies a lower default probability.

Return on Sales

Return on sales is defined as P/L for period (Net income) divided by Turnover, expressed as a percentage. Return on sales = P/L for period (Net income) / Turnover x 100%. Return on sales indicates how much profit, net of all expenses, is being produced per pound of sales. Return on sales is also known as net profit margin. A higher Return on sales implies a lower default probability.

Return on Capital Employed

Return on capital employed (ROCE) is defined as Earnings before Interest and Taxes (EBIT) divided by Total assets minus Current liabilities, expressed as a percentage. This ratio indicates how successful management has been in generating profits (before Financing costs) with all of the cash resources provided to them which carry a cost, i.e. equity plus debt. It is a basic measure of the overall performance, combining margins and efficiency in asset utilization. A higher ROCE implies a lower default probability.

Pillar 2 - Liquidity

The Liquidity pillar assesses the ability of a company to become liquid in the short-term. Illiquidity is almost always a direct cause of a failure, while a strong liquidity helps a company to remain sufficiently funded in times of distress. The liquidity pillar consists of variables that consider the ability of a company to convert an asset into cash quickly and without any price discount to meet its obligations.

Current Ratio

Current ratio is defined as Current assets, including Cash and Cash equivalents, divided by Current liabilities, expressed as a number. This ratio is a rough indication of a firm's ability to service its current obligations. Generally, the higher the Current ratio, the greater the cushion between current obligations and a firm's ability to pay them. A stronger ratio reflects a numerical superiority of Current assets over Current liabilities. However, the composition and quality of Current assets are a critical factor in the analysis of an individual firm's liquidity, which is why the current ratio assessment should be considered in conjunction with the overall liquidity assessment. A higher Current ratio implies a lower default probability.

Quick Ratio

The Quick ratio (also known as the Acid test ratio) is defined as Current assets, including Cash and Cash equivalents, minus Stock divided by Current liabilities, expressed as a number. The ratio indicates the degree to which a company's Current liabilities are covered by the most liquid Current assets. It is a refinement of the Current ratio and is a more conservative measure of liquidity. Generally, any value of less than 1 to 1 implies a reciprocal dependency on inventory or other current assets to liquidate short-term debt. A higher Quick ratio implies a lower default probability.

Stock Days

Stock days is defined as the average Stock during the year times the number of days in a year divided by the Cost of goods sold, expressed as a number. This ratio indicates the average length of time that units are in stock. A low ratio is a sign of good liquidity or superior merchandising. A high ratio can be a sign of poor liquidity, possible overstocking, obsolescence, or, in contrast to these negative interpretations, a planned stock build-up in the case of material shortages. A higher Stock days ratio implies a higher default probability.

Debtor Days

Debtor days is defined as the average Debtors during the year times the number of days in a year divided by Turnover. Debtor days indicates the average number of days that trade debtors are outstanding. Generally, the greater number of days outstanding, the greater the probability of delinquencies in trade debtors and the more cash resources are absorbed. If a company's debtors appear to be turning slower than the industry, further research is needed and the quality of the debtors should be examined closely. A higher Debtors days ratio implies a higher default probability.

Creditor Days

Creditor days is defined as the average Creditors during the year as a fraction of the Cost of goods sold times the number of days in a year. It indicates the average length of time the company's trade debt is outstanding. If a company's Creditors days appear to be turning more slowly than the industry, then the company may be experiencing cash shortages, disputing invoices with suppliers, enjoying extended terms, or deliberately expanding its trade credit. The ratio comparison of company to industry suggests the existence of these or other causes. A higher Creditors days ratio implies a higher default probability.

Pillar 3 - Capital Structure

The Capital pillar considers how a company is financed. Capital should be sufficient to cover expected and unexpected losses. Strong capital levels provide management with financial flexibility to take advantage of certain acquisition opportunities or allow discontinuation of business lines with associated write offs.

Gearing

Gearing is defined as Total debt divided by Tangible net worth, expressed as a percentage. It indicates the company’s reliance on (often expensive) interest bearing debt. In smaller companies, it also highlights the owners' stake in the business relative to the banks. A higher Gearing ratio implies a higher default probability.

Solvency

Solvency is defined as Tangible net worth (Shareholder funds – Intangibles) divided by Total assets – Intangibles, expressed as a percentage. It indicates the financial leverage of a company, i.e. it measures how much a company is relying on creditors to fund assets. The lower the ratio, the greater the financial risk. The amount of risk considered acceptable for a company depends on the nature of the business and the skills of its management, the liquidity of the assets and speed of the asset conversion cycle, and the stability of revenues and cash flows. A higher Solvency ratio implies a lower default probability.

Pillar 4 - Debt Service

The debt service pillar considers the capability of a company to meet its financial obligations in the form of debt. It ties the debt obligation a company has to its earnings potential.

Total Debt / EBITDA

The debt service pillar considers the capability of a company to meet its financial obligations. This ratio is defined as Total debt divided by Earnings before Interest, Taxes, Depreciation, and Amortization (EBITDA). Total debt comprises Loans + Noncurrent liabilities. It indicates the total debt run-off period by showing the number of years it would take to repay all of the company's interest-bearing debt from operating profit adjusted for Depreciation and Amortization. However, EBITDA should not, of course, be considered as cash available to pay off debt. A higher Debt service ratio implies a higher default probability.

Interest Coverage Ratio

Interest coverage ratio is defined as Earnings before interest and taxes (EBIT) divided by interest expenses (Gross and Capitalized). It indicates the firm's ability to meet interest payments from earnings. A high ratio indicates that the borrower should have little difficulty in meeting the interest obligations of loans. This ratio also serves as an indicator of a firm's ability to service current debt and its capacity for taking on additional debt. A higher Interest coverage ratio implies a lower default probability.

Pillar 5 - Size

In general, the larger a company is, the less vulnerable the company is, as there is, usually, more diversification in turnover. Turnover is considered the best indicator of size. In general, turnover is related to vulnerability. The higher the turnover, the less vulnerable a company (generally) is.

Ratio Scoring and Mapping

While these financial ratios provide some very useful information regarding the current state of a company, it is difficult to assess them on a stand-alone basis. They are only useful in a credit rating determination if we can compare them to the same ratios for a group of peers. Ratio scoring deals with the process of translating the financials to a score that gives an indication of the relative creditworthiness of a company against its peers.

The ratios are assessed against a peer group of companies. This provides more discriminatory power during the calibration process and hence a better estimation of the risk that a company will default. Research has shown that there are two factors that are most fundamental when determining a comparable peer group. These two factors are industry type and size. The financial ratios tend to behave ‘most alike’ within these segmentations. The industry type is a good way to separate, for example, companies with a lot of tangible assets on their balance sheet (e.g. retail) versus companies with very few tangible assets (e.g. service based industries). The size reflects that larger companies are generally more robust and less likely to default in the short to medium term, as compared to smaller, less mature companies.

Since ratios tend to behave differently over different industries and sizes, the ratio value score has to be calibrated for each peer group segment.

When scoring a ratio, both the latest value and the long-term trend should be taken into account. The trend reflects whether a company’s financials are improving or deteriorating over time, which may be an indication of their long-term perspective. Hence, trends are also taken into account as a separate factor in the scoring function.

To arrive to a total score, a set of weights needs to be determined, which indicates the relative importance of the different components. This total score is then mapped to a ordinal rating scale, which usually runs from AAA (excellent creditworthiness) to D (defaulted) to indicate the creditworthiness. Note that at this stage, the rating only incorporates the quantitative factors. It will serve as a starting point to include the qualitative factors and the overrides.

"A sound credit rating model strikes a balance between quantitative and qualitative aspects. Relying too much on quantitative outcomes ignores valuable ‘unstructured’ information, whereas an expert judgement based approach ignores the value of empirical data, and their explanatory power."

Qualitative Factors

Qualitative factors are crucial to include in the model. They capture the ‘softer’ criteria underlying creditworthiness. They relate, among others, to the track record, management capabilities, accounting standards and access to capital of a company. These can be hard to capture in concrete criteria, and they will differ between different credit rating models.

Note that due to their qualitative nature, these factors will rely more on expert opinion and industry insights. Furthermore, some of these factors will affect larger companies more than smaller companies and vice versa. In larger companies, management structures are far more complex, track records will tend to be more extensive and access to capital is a more prominent consideration.

All factors are generally assigned an ordinal scoring scale and relative weights, to arrive at a total score for the qualitative part of the assessment.

A categorisation can be made between business analysis and behavioural analysis.

Business Analysis

Business analysis deals with all aspects of a company that relate to the way they operate in the markets. Some of the factors that can be included in a credit rating model are the following:

Years in Same Business

Companies that have operated in the same line of business for a prolonged period of time have increased credibility of staying around for the foreseeable future. Their business model is sound enough to generate stable financials.

Customer Risk

Customer risk is an assessment to what extent a company is dependent on one or a small group of customers for their turnover. A large customer taking its business to a competitor can have a significant impact on such a company.

Accounting Risk

The companies internal accounting standards are generally a good indicator of the quality of management and internal controls. Recent or frequent incidents, delayed annual reports and a lack of detail are clear red flags.

Track record with Corporate

This is mostly relevant for counterparties with whom a standing relationship exists. The track record of previous years is useful first hand experience to take into account when assessing the creditworthiness.

Continuity of Management

A company that has been under the same management for an extended period of time tends to reflect a stable company, with few internal struggles. Furthermore, this reflects a positive assessment of management by the shareholders.

Operating Activities Area

Companies operating on a global scale are generally more diversified and therefore less affected by most political and regulatory risks. This reflects well in their credit rating. Additionally, companies that serve a large market have a solid base that provides some security against adverse events.

Access to Capital

Access to capital is a crucial element of the qualitative assessment. Companies with a good access to the capital markets can raise debt and equity as needed. An actively traded stock, a public rating and frequent and recent debt issuances are all signals that a company has access to capital.

Behavioral Analysis

Behavioural analysis aims to incorporate prior behaviour of a company in the credit rating. A separation can be made between external and internal indicators

External indicators

External indicators are all information that can be acquired from external parties, relating to the behaviour of a company where it comes to honouring obligations. This could be a credit rapport from a credit rating agency, payment details from a bank, public news items, etcetera.

Internal Indicators

Internal indicators concern all prior interactions you have had with a company. This includes payment delay, litigation, breaches of financial covenants etcetera.

Override Framework

Many models allow for an override of the credit rating resulting from the prior analysis. This is a more discretionary step, which should be properly substantiated and documented. Overrides generally only allow for adjusting the credit rating with one notch upward, while downward adjustment can be more sizable.

Overrides can be made due to a variety of reasons, which is generally carefully separated in the model. Reasons for overrides generally include adjusting for country risk, industry adjustments, company specific risk and group support.

It should be noted that some overrides are mandated by governing bodies. As an example, the OECD prescribes the overrides to be applied based on a country risk mapping table, for the purpose of arm’s length pricing of intercompany contracts.

Combining all the factors and considerations mentioned in this article, applying weights and scoring functions and applying overrides, a final credit rating results.

Model Quality and Fit

The model quality determines whether the model is appropriate to be used in a practical setting. From a statistical modelling perspective, a lot of considerations can be made with regard to model quality, which are outside of the scope of this article, so we will stick to a high level consideration here.

The AUC (area under the ROC curve) metric is one of the most popular metrics to quantify the model fit (note this is not necessarily the same as the model quality, just as correlation does not equal causation). The AUC metric indicates, very simply put, the number of correct and incorrect predictions and plots them in a graph. The area under that graph then indicates the explanatory power of the model. A more extensive guide to the AUC metric can be found here.

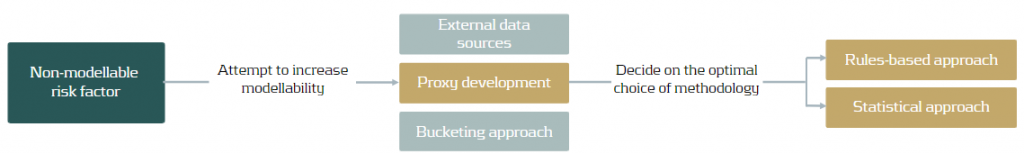

Alternative Modelling Approaches

The model structure described above is one specific way to model credit ratings. While models may widely vary, most of these components would typically be included. During recent years, there has been an increase in the use of payment data, which is disclosed through the PSD2 regulation. This can provide a more up-to-date overview of the state of the company and can definitely be considered as an additional factor in the analysis. However, the main disadvantage of this approach is that it requires explicit approval from the counterparty to use the data, which makes it more challenging to apply on a portfolio basis.

Another approach is a purely machine learning based modelling approach. If applied well, this will give the best model in terms of the AUC (area under the curve) metric, which measures the explanatory power of the model. One major disadvantage of this approach, however, is that the interpretability of the resulting model is very limited. This is something that is generally not preferred by auditors and regulatory bodies as the primary model for creditworthiness. In practice, we see these models most often as challenger models, to benchmark the explanatory power of models based on economic rationale. They can serve to spot deficiencies in the explanatory power of existing models and trigger a re-assessment of the factors included in these models. In some cases, they may also be used to create additional comfort regarding the inclusion of some factors.

Furthermore, the degree to which the model depends on expert opinion is to a large extent dependent on the data available to the model developer. Most notably, the financials and historical default data of a representative group of companies is needed to properly fit the model to the empirical data. Since this data can be hard to come by, many credit rating models are based more on expert opinion than actual quantitative data. Our Corporate Credit Rating model was calibrated on a database containing the financials and default data of an extensive set of companies. This provides a solid quantitative basis for the model outcomes.

Closing Remarks

Model credit risk and credit ratings is a complex affair. Zanders provides advice, standardized and customizable models and software solutions to tackle these challenges. Do you want to learn more about credit rating modelling? Reach out for a free consultation. Looking for a tailor made and flexible solution to become IFRS 9 compliant, find out about our Condor Credit Risk Suite, the IFRS9 compliance solution.