Expanding the Utility of AI in Risk Management with AI Agents

Discover how AI agents are transforming risk management by making advanced analytics more accessible, efficient, and intelligent.

Artificial intelligence (AI) is advancing rapidly, particularly with the emergence of large language models (LLMs) such as Generative Pre-trained Transformers (GPTs). Yet, in quantitative risk management, the perceived utility of these technologies remains relatively narrow. Most current applications focus on technical use cases, such as code autocompletion within Integrated Development Environments (IDEs), to boost productivity for developers and quantitative analysts. While valuable, these uses only hint at AI’s broader potential. Limiting AI to technically knowledgeable users overlooks opportunities to empower a wider range of stakeholders, including those without programming skills.

In this article, we explore AI agent frameworks, highlight their potential to enhance various banking functions, and share our thoughts on key design considerations when using agents in risk-sensitive environments.

What exactly are AI agents?

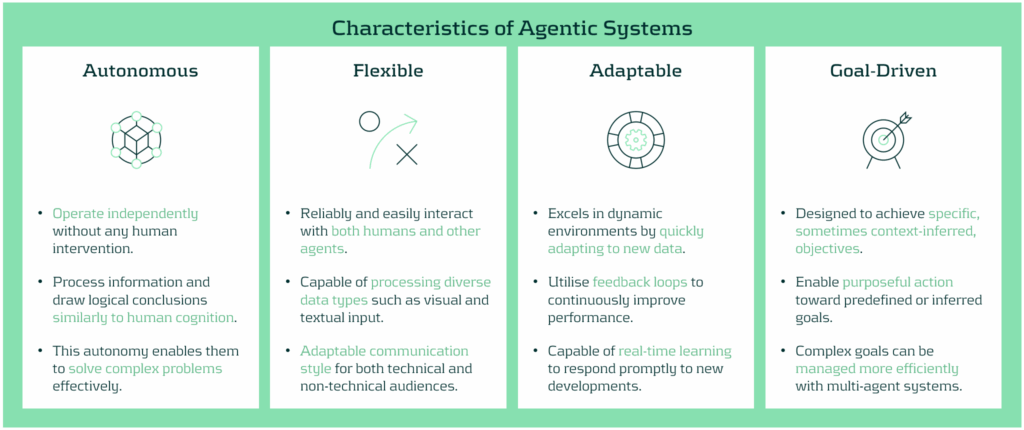

While the definition of an AI agent may vary depending on the specific use case, they are generally characterised by a high degree of autonomy and a goal-oriented design. A recurring theme in the development of these systems is their ability to operate independently across entire analytical workflows. This marks a shift in how AI is utilised - transforming models from passive tools or advanced search engines into active, decision-making agents capable of driving end-to-end processes.

In the context of risk management, these workflows might include executing models, validating outputs, conducting ongoing monitoring and review, running sensitivity analyses or stress tests, and generating model performance reports. Interestingly, these actions can all be initiated by a simple trigger, often in the form of a natural language prompt - a method that has become increasingly familiar. Unlike conventional systems designed for single tasks, agents are built to reason, decide, and act across multiple steps, adapting to requirements with flexibility.

A typical agent architecture consists of five core components:

1- Interface Layer / Trigger: Translates business-level questions (e.g., “What’s the impact of a 25% increase of default probability on risk-weighted assets?”) into executable workflows, enabling non-technical users to trigger complex analyses.

2- Input and Data Processing: Preprocesses and transforms input data or outputs from other checkpoints into structured data that can be used in the agent’s decision-making process.

3- Memory & Context Manager: Maintains a record of prior steps, decisions, and user inputs to guide multi-stage processes intelligently and retain context over series of interactions.

4- Tool Integrator: Connects to and uses various tools (such as Python environments, databases, APIs, and model libraries) to perform technical tasks. The agent dynamically works out which tool is relevant for executing specific tasks based on predefined instructions.

5- Large Language Model (LLM): Determines the sequence of actions needed to achieve a specific goal, e.g. “Backtest this IRB model under a recession scenario”. In this example, the LLM would identify actual periods spanning a receding economy and uses the corresponding data to execute the model and return the results.

This architecture allows AI agents to operate like digital collaborators, fetching and processing data, visualising results, and helping to explain patterns that we may otherwise miss - all without requiring users to interact with a single line of code.

Benefits of AI Agents Across Different Banking Functions

Beyond improving information access, AI agents deliver strategic benefits through automation, broader access to analytical tools, faster decision-making, and the removal of process bottlenecks. Here’s how agentic solutions support various stakeholders:

- Front Office (Trading, Structuring, Portfolio Management): Enhance front-office functions including pre-trade analysis, data acquisition and processing, continuous market monitoring, and rapid trade execution. By integrating structured and unstructured data, ranging from market sentiment to fundamental and technical indicators, the agents allow trading and investment professionals to make faster, more informed decisions that are grounded in a holistic view of market conditions.

- Risk Modelling and Analytics Teams: Aid modelers to accelerate prototyping and calibration by offloading repetitive tasks such as parameter sweeps, benchmarking, or sensitivity runs. Agents can also assist with documentation and help iterate on design logic more efficiently, freeing up time for more complex problem solving.

- Model Risk Management (MRM): Streamline model validation through automation and a system designed to enhance efficiency and reliability. The system can independently replicate results from model execution, generate challenger models, and document testing steps, offering a transparent and auditable workflow that strengthens governance and reduces approval timelines.

- Risk Control and Regulatory Reporting: Automate aspects of stress testing and capital reporting for control functions. By having the ability to recalculate model results under different model assumptions, maintaining traceable logic, and generating consistent documentation, the agents help ensure model results align with regulatory standards.

Ensuring Trust and Quality with AI Agent Solutions: Oversight, Governance, and Guardrails

As promising as AI agents are, their deployment in risk-sensitive environments must be accompanied by robust controls. Key design considerations include:

- Security: Solutions are restricted to rely only on credible and approved AI models or models that have demonstrated high safety for institutional use. All other components are designed in Python, eliminating risks that may be posed from third-party systems and processes.

- Data Governance: Agents only access approved, secure data sources, with permissions and version control strictly enforced. Where privacy is critical, data anonymisation or summarisation techniques can be applied.

- Explainability: Transparency is ensured through well-defined workflows, step-by-step process documentation, and audit trails to help stakeholders understand how decisions are made.

- Scope Boundaries: Agents operate within clearly defined limits (e.g., executing but not creating new models). A human-in-the-loop approach is used for material-risk decisions, while lower-risk processes may be fully autonomous.

- Validation: Like any model, agents undergo rigorous testing for accuracy, consistency, and robustness, especially in edge cases. By applying the traditional “three lines of defense” model to AI agents, oversight and accountability is embedded into their lifecycle.

Conclusion

AI agents offer enormous potential to drive automation and expand access to analytical tools, especially for non-technical stakeholders. This facilitates deeper integration of business and regulatory expertise into processes across trading, reporting, and model development. When built with strong governance, robust data controls, and transparent logic, AI agents don’t just support critical workflows, they improve them.

At Zanders, we support clients in understanding how AI agents can benefit their risk management frameworks to unlock operational efficiency and expand access to advanced analytics. For more information on how Zanders can help you to utilize the power of AI agents, contact Dilbagh Kalsi (Partner) or Stanley Nwanekezie (Manager).