Machine learning in CRR-compliant IRB models

Even though machine learning is rapidly transforming the financial risk landscape, it is underused within internal ratings based-models. Why is it uncommon within this field, how will this change in the near future, and who will take the lead?

Machine learning (ML) models have proven to be highly effective in the field of credit risk,

outperforming traditional regression models in their predictive power. Thanks to the exponential growth in the data availability, storage capacity and computational power, these models can be effectively trained on vast amounts of complex, unstructured data.

Despite these advantages, however, ML models have yet to be integrated into internal rating-based (IRB) modeling methodologies used by banks. This is mainly due to the fact that existing methods for calculating regulatory capital have remained largely unchanged for over 15 years, and the complexity of ML models can make it difficult to comply with the Capital Requirements Regulation (CRR). Nonetheless, the European Banking Authority (EBA) recognizes the potential of ML in the future of IRB modeling and is considering providing a set of principle-based recommendations to ensure its appropriate use.

In sight of these recommendations, the EBA published a discussion paper (EBA/DP/2021/04) to seek stakeholders’ feedback on the practical use of ML in the context of IRB modeling, aiming to provide clarity on supervisory expectations. This paper outlines the challenges and opportunities in using ML to develop CRR-compliant IRB models, and presents the point of view for various banking stakeholders on the topic.

Current use and potential benefits of ML in IRB models

According to research conducted by the Institute of International Finance (IIF) in 2019, the most

common uses of ML within credit risk are credit approval, credit monitoring and collections, and

restructuring and recovery. However, the use of ML within other regulatory areas, such as capital

requirements, stress testing and provisioning is highly limited. For IRB models, ML is used to

complement standard models used for capital requirement calculation. Examples of current uses of ML to complement standard models include (i) model validation, where the ML model serves as a challenger model, (ii) data improvements, where ML is used for more efficient data preparation and exploration, and (iii) variable selection, where ML is used to detect explanatory variables.

ML has the potential to provide a range of benefits for risk differentiation, including improvements in the model discriminatory power 1 , identification of relevant risk drivers 2 , and optimization of the portfolio segmentation. Based on their superior predictive ability and capacity to detect bias , ML models can also help to improve risk quantification 3 . Furthermore, ML can be used to enhance the data collection and preparation process, leading to improved data quality. Finally, ML models can enable the use of unstructured data, expanding the possible data sets allowing for the use of new parameters and estimation of these parameters.

Challenges to CRR compliance

The following table summarizes the challenges involved in using ML to develop IRB models that are compliant to prudential requirements.

| Area | Topic | Article ref. | Challenge |

| Risk differentiation | The definition and assignment criteria to grades or pools | CRR 171(1)(a) and (b) RTS on AM for IRB 24(1) | Use of ML is constrained when no clear economic relation between the input and the output variables. Institutions should explore suitable tools to interpret complex ML models. |

| Risk differentiation | Complementing human judgement | CRR 172(3) and 174(e) GL on PD and LGD 58 | Complexity in ML models may make it more difficult to take into account expert involvement and analyze the impact of human judgement on the performance of the model. |

| Risk differentiation | Documentation of modeling assumptions and theory behind the model | CRR 175(1) and (2), 175(4)(a) RTS on AM for IRB 41(d) | To document a clear outline of the theory, assumptions and mathematical basis of the final assignment of estimates to grades, exposures or pools may be difficult in complex ML models. Also, the institution’s relevant staff should fully understand the model’s capabilities and limitations. |

| Risk quantification | Plausibility and intuitiveness of the estimates | CRR 179(1)(a) | ML models can result in non-intuitive estimates, particularly when the structure of the model is not easily interpretable. |

| Risk quantification | Underlying historical observation period | CRR 180(1)(a) and (h), 180(2)(a) and (e), and 181(1)(j) and 181(2). | For PD and LGD estimation, the minimum length of the historical observation period is five years. This can be a challenge for the use of big data, which might not be available for a sufficient time horizon. |

| Validation | Interpreting and resolving validation findings | CRR 185(b) | Difficulties may arise in ML models in explaining material differences between the realized default rates and the expected range of variability of the PD estimates per grade. This also holds for assessing the effect of the economic cycle on the logic of the model. |

| Validation | Validation tasks | CRR 185 | It may be more difficult to assess the representativeness and to fulfill operational data requirements (e.g. data quality and maintenance). Furthermore, the validation function is expected to challenge the model design, assumptions and methodology, whereas a more complex model will be harder to challenge efficiently. |

| Governance | Corporate governance | CRR 189 | The institution’s management body is required to possess a general understanding of the rating systems of the institution and detailed comprehension of the associated management reports. |

| Operational | Implementation process | CRR 144, 171 RTS on AM for IRB 11(2)(b) | The complexity of ML models may make it more difficult to verify the correct implementation of internal ratings and risk parameters in IT systems. In particular, the heavy utilization of different packages will become challenging. |

| Operational | Categorization of model changes | CRR 143(3) | If models are updated at a high frequency with time-varying weights associated to variables, it may be difficult to categorize the model changes. For the validation function it is unfeasible to validate each model iteration. |

Expectations for a possible and prudent use of ML in IRB modeling

In January 2020, the EBA published a report on the recent trends of big data and advanced analytics (BD&AA) in the banking sector. In order to support ongoing technological neutrality – the freedom to choose the most appropriate technology adequate to their needs and requirements - the BD&AA report recommends the use of ML and suggests safeguards to ensure compliance. Meanwhile, the EBA has provided the following principles to clarify how to adhere to the regulatory requirements set out in the CRR for IRB models.

- All relevant stakeholders should have an appropriate level of knowledge of the model’s functioning. This includes the model development unit, credit risk control unit and validation unit, but also the management body and senior management, to a lesser extent.

- Zanders believes that appropriate trainings on the use of ML to the relevant stakeholders ensures the appropriate level of knowledge.

- Institutions should avoid unnecessary complexity in the modeling approach if it is not justified by a significant improvement in the predictive capabilities.

- Zanders advocates to focus on the use of explanatory drivers with significant predictive information to avoid including an excessive number of drivers. In addition, Zanders advises on which data type (unstructured or more conventional) and what modeling choice (simplistic or sophisticated) is appropriate for the institution to avoid unnecessary complexity.

- Institutions should ensure that the model is correctly interpreted and understood by relevant stakeholders.

- Zanders assesses the relationship of each single risk driver with the output variable, ceteris paribus, the weight of each risk driver to detect the level of influence on the model prediction, the economic relationship to ensure plausible and intuitive estimates, and the potential biases in the model, such that the model is correctly interpreted and understood.

- The application of human judgement in the development of the model and in performing overrides should be understood in terms of economic meaning, model logic, and model behavior.

- Zanders provides best market practice expertise in applying human judgement in the development and application of the model.

- The parameters of the model should generally be stable. Therefore, institutions should perform sensitivity analysis and identify/monitor reasons for regular updates.

- Zanders analyses whether a break in the economic conditions or in the institution’s processes or in the underlying data might justify a model update. Furthermore, Zanders evaluates the changes required to obtain stable parameters over a longer time horizon.

- Institutions should have a reliable validation, which covers overfitting issues, challenging the model design, reviewing representativeness and data quality issues, and analyzing the stability of estimates.

- Zanders provides validation activities following regulatory compliance.

Survey responses

The following stakeholders provided responses to the questions posed in the EBA paper (EBA/DP/2021/04):

- Asociación Española de Banca (AEB) – Spanish Banking Association

- Assilea – Italian Leasing Association

- Association for Financial Markets in Europe (AFME)

- European Association of Co-operative Banks (EACB)

- European Savings and Retail Banking Group (ESBG)

- Fédération Bancaire Française - French Banking Federation (FBF)

- Die deutsche Kreditwirtschaft – The German Banking Industry Committee (GBIC)

- Institute of International Finance (IIF)

- Mazars – audit, tax and advisory firm

- Prometeia SpA – advisory and tech solutions firm (SpA)

- Banca Intesa Sanpaolo – Italian international banking group (IIBG)

A summary of the responses to key questions is provided below.

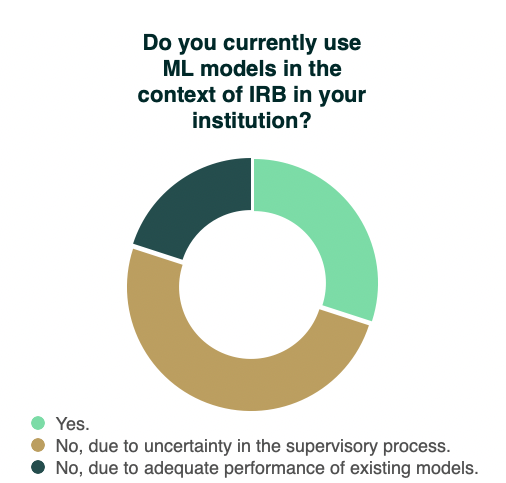

The vast majority of respondents does not currently apply ML for IRB purposes. The respondents argue that they would like to use ML for regulatory capital modeling, but that this is not deemed feasible without explicit regulatory guidelines and certainty in the supervisory process. AEB refers to the report published by the Bank of Spain in February 2021, where it was concluded that ML models perform better than traditional models in estimating the default rate, and that the potential economic benefits would be significant for financial institutions. AECB and GBIC indicate that the need for ML in regulatory capital modeling is currently not necessary as the predictive power of traditional models prove to be satisfactory. Three respondents presently use ML to some extent within IRB, such as for risk driver selection and risk differentiation. Only IIBG has actually developed a complete ML model for the estimation of PD of an SME retail portfolio, which has been validated by the supervisor in 2021.

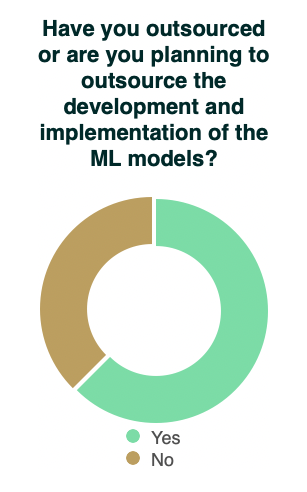

Five respondents answer that they would outsource the ML modeling for IRB to different degrees. AEB states that most of the work will be done internally and consulting services will be required at peak planning times. According to Mazars, the outsourcing is mainly performed on the development phase as banks would take over the ownership of the model and implement it in its IT infrastructure internally. The other respondents that plan to outsource foresee that external support is required for all phases. The remaining respondents state that they have not noticed any intention to outsource any of the parts or phases of the process on the development and implementation of the ML models.

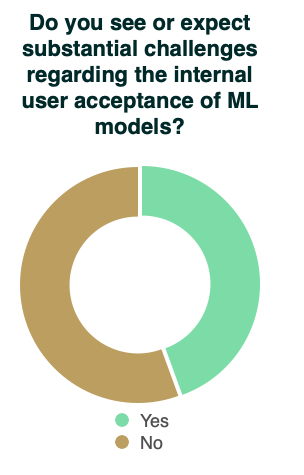

The respondents are split almost evenly on the topic of challenges regarding internal user acceptance of ML models. The respondents that see substantial challenges attribute this to the low explanatory power of ML-driven models and concerns on business representatives and credit officers being comfortable with the understanding and interactions of the standard approaches. However, for the latter it is recognized that specific trainings on ML methods are beneficial in this respect. The respondents that do not expect considerable challenges argue that ML models should be treated in the same manner as traditional methods as the same fundamental principles apply, where it is key to ensure that all Lines of Defense have the appropriate skills and responsibilities. Furthermore, the respondents state that existing ML applications, such as in AML, can be leveraged. The AFM explains that the techniques used to explain the results that are already available in those contexts are proving to be effective to understand the outcomes. SpA shares this sentiment and refers to Shapley values and the Lime test as techniques for model interpretability.

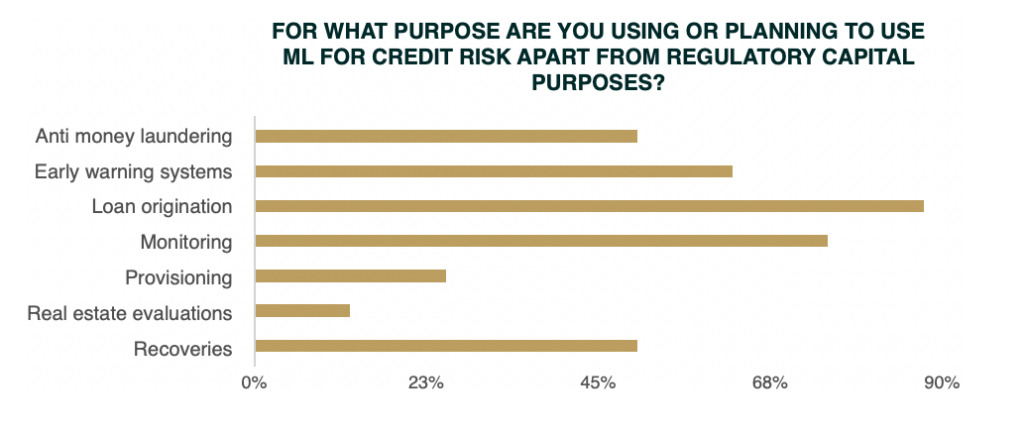

There exists a consensus among the respondents that ML is suitable for various areas within credit risk. For example, ESBG outlines that the opportunities for using ML models within the credit risk area and their advantages are endless. In particular for loan origination (admission), monitoring and early warning systems, the respondents are mutually in favor of applying ML. In general, the application of ML for these purposes is already being adopted by institutions far more than for IRB modeling.

To conclude

Banks are keen to use ML in the context of IRB modeling given the benefits achievable in both risk differentiation and risk quantification processes. The main reason for the limited use of ML in IRB modeling is the uncertainty in the supervisory process. The ball is currently in EBA’s court. The discussion paper and prospective set of principle-based recommendations to bridge the gap in institutional and regulatory expectations show EBA’s interest in making ML in IRB modeling a more common reality.

Zanders believes that institutions are best prepared for this transition by already applying ML to different fields, such as AML, application models and KYC. The EBA defines the enhancement of capacity to combat money laundering in the EU as one of its five main priorities for 2023 (EBA/REP/2022/20). This includes supporting the implementation of robust approaches to advance AML. Zanders anticipates that the technical EBA support in AML will spill over to IRB modeling in the coming three years. Zanders supports institutions in the application of ML in aforementioned fields, which will ensure that those institutions are adequately prepared to fully reap the rewards when ML in the context of IRB modeling is commonly accepted.

What can Zanders offer?

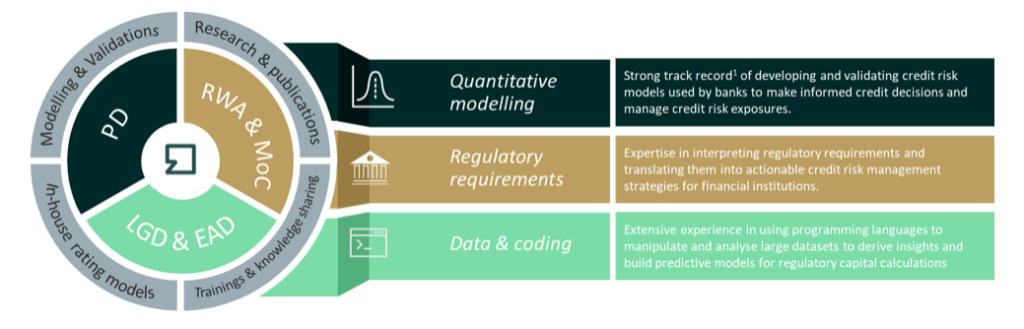

We combine deep credit risk modeling expertise with relevant experience in regulation and programming

- A Risk Advisory Team consisting of 70+ consultants with quantitative backgrounds (e.g., Econometrics and Physics)

- Strong knowledge of credit risk models

- Extensive experience with calibration and implementation of credit risk models

- We offer ready-to-use rating models, Credit Risk Academy modules and expert sessions that can be tailored to you specific needs.

Interested in ML in credit risk, Credit Risk Academy, and other regulatory capital modeling services? Please feel free to contact Jimmy Tang or Elena Paniagua-Avila.

Footnotes

1 CRR article 170(1)(f) and (3)(c), and RTS on AM of IRB articles 36(1)(a) and 37(1)(c)

2 CRR articles 170(3)(a) and (4) and 171(2) and GL on PD and LGD paragraphs 21, 25, and 121

3 RTS on AM of IRB articles 36(1)(a) and 37(1)(c)