PLA and the RFET: A Perfect FRTB Storm

Banks face challenges with PLA and RFET under FRTB; a unified approach can reduce capital requirements and improve outcomes by addressing shared risk factors.

Despite the several global delays to FRTB go-live, many banks are still struggling to be prepared for the implementation of profit and loss attribution (PLA) and the risk factor eligibility test (RFET). As both tests have the potential to considerably increase capital requirements, they are high on the agenda for most banks which are attempting to use the internal models approach (IMA).

In this article, we explore the difficulties with both tests and also highlight some underlying similarities. By leveraging these similarities to develop a unified PLA and RFET system, we describe how PLA and RFET failures can be avoided to reduce the potential capital requirements for IMA banks.

Difficulties with PLA

Since its introduction into the FRTB framework by the Basel Committee on Banking Supervision (BCBS), the PLA test has been a consistent cause for concern for banks attempting to use the IMA. The test is designed to ensure that Front Office (FO) and Risk P&Ls are sufficiently aligned. As such, it ensures that banks’ internal models for market risk accurately reflect the risk they are exposed to. To assess this alignment, the PLA test compares the Hypothetical P&L (HPL) from the FO with the risk-theoretical P&L (RTPL) from Risk using two statistical tests - the Spearman correlation and the Kolmogorov-Smirnov (KS) test.

There are potentially significant consequences of trading desks not passing the test. At best, the desk will incur capital add-ons. At worst, the desk will be forced to use the more punitive standardised approach (SA), which may increase capital requirements even more.

There are several difficulties with PLA:

- No existing systems: As the test has never before been a regulatory requirement, many banks do not have suitable existing systems and processes which can be leveraged to identify the causes of PLA failures. Although the KS and Spearman tests are easy to implement, isolating the causes of PLA failures can be difficult.

- Risk factor mapping: Banks often do not have accurate and reliable mapping between the risk factors in the FO and Risk models. Remediation of the inaccurate mapping can often be a slow and manual process, making it extremely difficult to identify the risk factors which are causing the PLA failure.

- Data inconsistency: As the data feeds between Risk and FO models can be different, there can be a large number of potential causes of P&L differences. Even small differences in data granularity, convexity capture or even holiday calendars can cause misalignments which may result in PLA failures.

- Hedged portfolios: Well-hedged portfolios often find it more challenging to pass the PLA test. When portfolios are hedged, the total P&L of the portfolio is reduced, leading to a larger relative error than that of an unhedged portfolio, potentially causing PLA failures. You can read more about this topic on our other blog post – ‘To Hedge or Not to Hedge: Navigating the Catch-22 of FRTB’s PLA Test’

Issues with the RFET

The RFET ensures that all risk factors in the internal model have a minimum level of liquidity and enough market data to be accurately used. Liquidity is measured by the number of real price observations which have been observed in the past 12 months. Any risk factors that do not meet the minimum liquidity standards outlined in FRTB are known as non-modellable risk factors (NMRFs). Similar to the consequences of failing the PLA test and having to use the SA, NMRFs must use the more conservative stressed expected shortfall (SES) capital calculations, leading to higher capital requirements. Research shows that NMRFs can account for over 30% of capital requirements, making them one of the most punitive drivers of increased capital within the IMA. The impact of NMRFs is often considered to be disproportionately large and also unpredictable.

There are several difficulties with the RFET:

- Wide scope: The RFET requires all risk factors to be collected across multiple desks and systems. Mapping instruments to risk factors can be a complicated and lengthy process. Consequently, implementing and operationalizing the RFET can be difficult.

- Diversification benefit: Modellable risk factors are capitalised using the expected shortfall (ES) which allows for diversification benefits. However, NMRFs are capitalised using the stressed expected shortfall (SES) which does not provide the same benefits, resulting in larger capital.

- Proxy development: Although proxies can be used to overcome a lack of data, developing them can be time-consuming and require considerable effort. Determining proxies requires exploratory work which often has uncertain outcomes. Furthermore, all proxies need to be validated and justified to the regulator.

- Vendor data: It can be difficult for banks to quantify the cost benefit of purchasing external data to increase the number of real price observations versus the cost of more NMRFs. Ultimately, the result of the RFET is based on a bank’s access to real price observation data. Although two banks may have identical exposures and risk, they may have completely different capital requirements due to their access to the correct data.

The interconnectedness of both tests

Despite their individual difficulties, there are a number of similarities between PLA and the RFET which can be leveraged to ensure efficient implementation of the IMA:

- Although PLA is performed at the desk-level, the underlying risk factors are the same as those which are used for the RFET.

- Both tests potentially impact the ES model as the PLA/RFET outcomes may instigate modifications to the model in order to improve the results. For example, any changes in data source to increase the liquidity of NMRFs (which is a common way to overcome RFET issues) would require PLA to be rerun.

- Ultimately, if any changes are made to the underlying risk factors, both tests must be performed again.

- Hence, although they are relatively simple tests (Spearman Correlation and KS, and a count of real price observations for the RFET), banks must develop a reliable architecture to dynamically change risk factors and efficiently rerun PLA and RFET tests.

Zanders’ recommendation

As they greatly impact one another, a unified system allows both components to be run together. Due to their interdependencies, a unified PLA-RFET system makes it easier for banks to dynamically modify risk factors and improve results for both tests.

- In order to truly have a unified PLA-RFET system, the PLA results must also be brought down to the risk factor level. This is done by understanding and quantifying which risk factors are causing the discrepancies between RTPL and HPL and causing poor PLA statistics. More information about this can be found in our other blog post ‘FRTB: Profit and Loss Attribution (PLA) Analytics’.

- Once the risk factors causing PLA failures have been identified, a unified approach can prioritise risk factors which, if remediated, improve PLA statistics and also efficiently reduce NMRF SES capitalisation.

Conclusion

While PLA is crucial for IMA approval, it presents numerous operational and technical challenges. Similarly, the RFET introduces additional complexities by enforcing strict liquidity and data standards for risk factors, with failing risk factors subject to harsher capital treatments. The interconnected nature of both tests highlights the need for a cohesive strategy, where adjustments to one test can directly influence outcomes in the other. Ultimately, banks need to invest in robust systems that allow for dynamic adjustments to risk factors and efficient reruns of both tests. A unified PLA-RFET approach can streamline processes, reduce capital penalties, and improve test results by focusing on the underlying risk factors common to both assessments.

For more information about this topic and how Zanders can help you design and implement a unified PLA and RFET system, please contact Dilbagh Kalsi (Partner) or Hardial Kalsi (Manager).

Insights into FX Risk in Business Planning and Analysis

Strengthen strategic decision-making by bridging the FX impact gap. Empower Treasury as a proactive partner in predicting and minimizing global and local FX risks through advanced analytics

In a world of persistent market and economic volatility, the Corporate Treasury function is increasingly taking on a more strategic role in navigating the uncertainties and driving corporate success.

Even in the most mature organizations, the involvement of the Treasury center in FX risk management often begins with collecting forecasted exposures from subsidiaries. However, to fundamentally enhance the performance of the FX risk management process, it is crucial to understand the nature of these FX exposures and their impacts on the upstream business processes where they originate.

Enabling this requires the optimization of the end-to-end FX hedging lifecycle, from subsidiary financial planning and analysis (FP&A) that identifies the exposure to Treasury hedging. Improvements in the exposure identification process and FX impact analytics necessitate the use of intelligent systems and closer cooperation between Treasury and business functions.

Traditional models

While the primary goal of local business units is to enhance the performance of their respective operations, fluctuating FX rates will always directly impact the overall financial results and, in many cases, obscure the true business performance of the entity. A common strategy to separate business performance from FX impacts is to use constant budgeting and planning rates for management reporting, where the FX impact is nullified. These budgeting and planning rates typically reflect the most likely hedged rates achieved by Treasury, considering the hedging policies and forecasted hedging horizons. However, this strategy can lead to unexpected shocks in financial reporting and obscure the impacts of FX exposure forecasting and hedging performance.

When these shocks occur, conclusions about their causes, such as over or under-hedging or unrealistic planning rates, can only be drawn through retrospective analysis of the results. Unfortunately, this analysis often comes too late to address the underlying issues.

The most common Treasury tools used to measure the accuracy of business forecasting are Forecast vs. Forecast and Actual vs. Forecast accuracy reporting. These tools help identify recurring trouble areas that may need improvement. However, while these metrics indicate where forecasting accuracy can be improved, they do not easily translate into a quantification of the predicted or actual financial impact required for business planning purposes.

End-to-End FX risk management in a Treasury 4.x environment

Finance transformation projects, paired with system centralization and standardization, may offer an opportunity to create better integration between Treasury and its business partners, bridging the information gap and providing better insight and early analysis of future FX results. Treasury systems data related to hedging performance, together with improved up-to-date exposure forecasting, can paint a clearer picture of the up-to-date performance against the plan.

While some principles may remain the same, such as using planning and budgeting rates to isolate the business performance for analysis, the expected FX impacts at a business level can equally be analyzed and accounted for as part of the regular FP&A processes, answering questions such as:

- What is the expected impact of over- or under-hedging on the P&L?

- What is the expected impact from late hedging of exposures?

- What is the expected impact from misaligned budgeting and planning rates compared to the achieved hedging rates?

The Zanders Whitepaper, "Treasury 4.x – The Age of Productivity, Performance, and Steering," outlines the enablers for Treasury to fulfill its strategic potential, identifying Productivity, Performance, and Steering as key areas of focus.

In the area of Performance, the benefits of enhanced insights and up-to-date metrics for forecasting the P&L impacts of FX are clear. Early identification of expected FX impacts in the FP&A processes provides both time and opportunity to respond to risks sooner. Improved insights into the causes of FX impacts offer direction on where issues should be addressed. The outcome should be enhanced predictability of the overall financial results.

In addition to increased Performance, there are additional benefits in clearer accountability for the results. In the three questions above, the first two address timely forecasting accuracy, while the third pertains to the Treasury team's ability to achieve the rates set by the organization. With transparent accountability for the FX impact, Treasury gains an additional tool to steer the organization toward improved budgeting processes and create KPIs to ensure effective strategy implementation. This provides a valuable addition to the commonly used forecast vs. forecast exposure analysis, as the FX impacts resulting from that performance can be easily identified.

Conclusion

Although FP&A processes are crucial for clear strategic decision-making around business operations and financial planning, the FX impact—potentially a significant driver of financial results—is not commonly monitored with the same extent and detail as business operations metrics.

Improving the FX analytics of these processes can largely bridge the information gap between business performance and financial performance. This also allows Treasury to be utilized as a more engaged business partner to the rest of the operations in the prediction and explanation of FX impact, while providing strategic direction on how these impacts can be minimized, both globally and at local operations levels.

Implementing such an end-to-end process may be intimidating, but data and technology improvements embraced in the context of finance transformation projects may open the door to exploring these ideas. With cooperation between Treasury and the business, a true end-to-end FX risk management process may be within reach.

Optimizing Global Payments: The Growing Role of EBICS

Discover the full potential of bank connectivity with EBICS. From strategies for secure and cost-efficient bank connectivity to tips and quick wins and an update on EBICS 3.0, this article provides food for thought for sustainable and secure banking architecture.

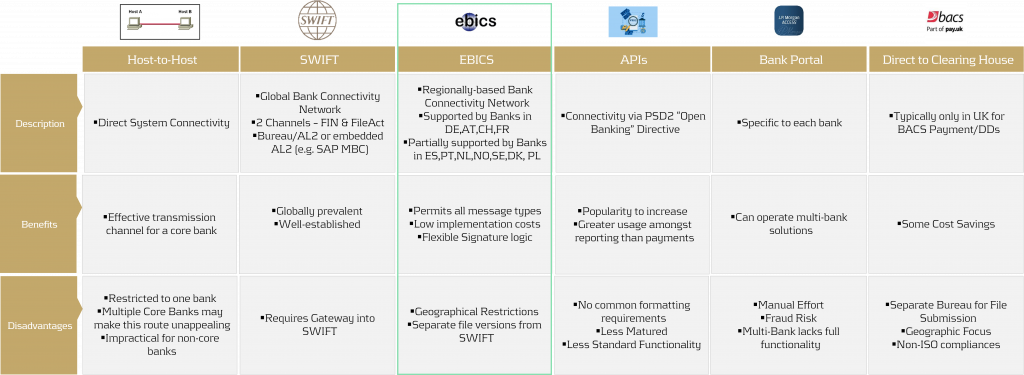

Security in payments is a priority that no corporation can afford to overlook. But how can bank connectivity be designed to be secure, seamless, and cost-effective? What role do local connectivity methods play today, and how sustainable are they? This article provides an overview of various bank connectivity methods, focusing specifically on the Electronic Banking Internet Communication Standard (EBICS). We'll examine how EBICS can be integrated into global bank connectivity strategies, while comparing it to alternative methods. The following section offers a comparison of EBICS with other connectivity solutions.

For a comprehensive overview of bank connectivity methods, including insights from an SAP perspective, we recommend the article Bank connectivity – Making the right choices.

Compared to alternatives, EBICS contracts are cost-effective, and EBICS connectors, along with supporting online banking software, are equally affordable. Whether through standalone solutions provided directly by banks or SAP ERP-integrated systems, EBICS consistently proves to be the most cost-effective option when compared to SWIFT or individual host-to-host connections.

The downside of EBICS? Outside the GSA region (Germany, Switzerland, Austria) and France, there are significant variations and a more diverse range of offerings due to EBICS' regional focus. In this article, we explore potential use cases and opportunities for EBICS, offering insights on how you can optimize your payment connectivity and security.

EBICS at a Glance

EBICS, as a communication standard, comes with three layers of encryption based on Hypertext Transfer Protocol Secure (HTTPS). In addition to a public and a private key, so-called EBICS users are initialized, which can present a significant advantage over alternative connection forms. Unlike Host-to-Host (H2H) and SWIFT, which are pure communication forms, EBICS has an intelligent signature process integrated into its logic, following the signing process logic in the GSA region. EBICS, developed by the German banking industry in 2006, is gaining increasing popularity as a standardized communication protocol between banks and corporates. The reason for this is simple—the unbeatable price-performance ratio achieved through high standardization.

Furthermore, EBICS offers a user-specific signature logic. Primary and secondary signatures can be designated and stored in the EBICS contract as so-called EBICS users. Additionally, deliveries can be carried out with so-called transport users (T-transport signature users).

In practice, the (T) transport signature user is used for tasks such as retrieving account statements, protocols, or sending payments as a file without authorization.

It is worth noting that for the intelligent connectivity of third-party systems or even service providers that create payments on behalf of clients, the T user can be utilized. For example, an HR service provider can send an encrypted payment file using the provided T user to the bank server. The payment file can be viewed and signed separately on the bank server via the relevant treasury or EBICS-compatible banking software.

Furthermore, through EBICS, individual records and thus personal data in the case of HR payments can be technically hidden. Only header data, such as the amount and the number of items, will be visible for approver.

Is the signature logic too maintenance-intensive? Fortunately, there is an alternative available to the maintenance of individual users. A so-called Corporate Seal User can be agreed upon with the bank. In this case, the bank issues an EBICS user based on company-related data in the (E) Single signature version. The (E) signature is transmitted directly to the bank for every internally approved payment, which is comparable to connectivity via SWIFT or Host-to-Host.

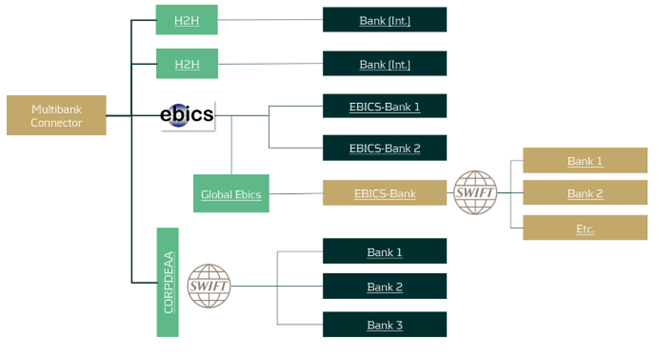

Strategic Adjustments with EBICS

Regional standards like EBICS can be used to connect regional banks and send or receive messages over the bank's internal SWIFT network through a so-called request for forwarding, also known as European Gateway or SWIFT Forwarding. Using this service, it is not necessary to connect every bank directly via Host-to-Host or SWIFT in order to become cost-efficient in your corporate banking.

A SWIFT Forwarding agreement is drafted and signed with your individual bank. Payment files are sent to the bank via a defined order type intended solely for forwarding. The bank acts here as a mere transmitter of the message. Incidentally, the same principle can also be adapted for account statements. Several banks in the GSA-region and France proactively market the service as an additional cash management service to their corporate customers. Account statements are centrally collected via the bank's SWIFT network and sent to the corporate via the existing EBICS channel. This procedure saves implementation efforts and simplifies the maintenance.

We like to summarize the advantages and disadvantages of integrating SWIFT forwarding via the EBICS channel:

Advantages

- High maintenance Host-to-Host connections are avoided.

- A dedicated SWIFT connection can be avoided without neglecting the benefits.

Disadvantages

- A bank with a well-developed interbank network is required.

- Transport fees per message may apply.

In general, corporates can take advantage of this specific EBICS setup when dealing with banks that manage a small portion of their transaction volume or when the technical connections with certain banks are more challenging. This approach is especially beneficial for banks that are difficult to access through local connectivity methods and have medium-to-low transaction volumes. However, for banks with high transaction volumes, connectivity via Host-to-Host or even a dedicated SWIFT connection may be more appropriate. Each situation is unique, and we recommend evaluating the best banking connectivity setup on a case-by-case basis to ensure optimal performance and cost-efficiency.

Future of EBICS: Changes Until 11/2025

Since November 2023, banks have been offering EBICS 3.0 as the most recent and up-to-date version. This version is binding in the GSA (Germany, Switzerland, Austria) region until approximately November 2025.

Here is a summary of the most important changes:

- Increased Standardization: Local EBICS “flavors” are unified to simplify implementation.

- Enhanced Encryption: Since version 2.5, the minimum encryption level has been 2048 bits. This is continuously increased with the EBICS 3.X version.

- XML Only for EBICS-specifics: Protocols like PTK are migrated to pain.002 HAC.

The new version of EBICS increases the security of the communication standard and makes it more attractive in the EU given its updates. In addition to Germany, Austria, Switzerland, and France, we observe the communication standard is increasingly offered by banks in Spain, Portugal, the Netherlands, as well as in the Nordic countries. Recently, the first banks in Poland have started to offer this communication standard–a rising trend.

In summary, EBICS is a cost-effective and powerful standard that can do much more than just bank connectivity. For companies that mainly use Host-to-Host or SWIFT for bank connectivity within the Eurozone, it may be worthwhile to look at EBICS and consider switching their connectivity method, provided their banking partner offers EBICS.

Uncertainty meets its match

We explore six common challenges facing treasuries today and how Zanders’ Treasury Business Services could help you to ride out the storm.

In brief

- Despite an upturn in the economic outlook, uncertainty remains ingrained into business operations today.

- As a result, most corporate treasuries are experiencing operational challenges that are beyond their control.

- Treasuries that adapt by embedding more resilience and flexibility into their operations will be the ones that forge ahead.

- Zanders created Treasury Business Services to provide a customizable suite of niche expertise and flexible resourcing options for treasuries.

Treasuries today are expected to adapt faster than ever to challenges that are largely beyond their control. Here we outline the six most pressing issues affecting treasuries today that you not only need to be aware of, but also proactively planning for.

The financial headwinds that have weighed down on investment activity, liquidity, and returns in recent times are gradually easing. And while optimism is creeping back into our outlooks, it’s not necessarily an end to uncertainty for treasury teams. That appears to be here to stay. Talent shortages, a more transient workforce, an expanding treasury role, large-scale digitalization, the lingering impact of recent global crises and unexpected opportunities too. Deeply engrained uncertainty means there are a lot of plates to keep spinning when you’re running a treasury function today. The following six challenges are making this balancing act more arduous for the corporate treasurer, emphasizing the urgent need to build greater resilience and agility into their operations.

1. Peak load scenarios where performance is non-negotiable

There are situations where treasury simply must deliver. Period. Even when it does not have the resources. M&A and IT-related projects are good examples but there are many more. When these peak load scenarios occur, treasuries need to be prepared to handle them in a fast, flexible, and efficient way.

2. Demand for specialist treasury IT knowledge

A 2023 Treasury Technology survey found 53% of respondents were already using a TMS and a further 16% planned to implement within the next two years. It’s undeniable that technology now commands a dominant role in treasury processes, with investment in ERPs, TMSs, payment factories and e-banking portals a priority across the industry. However, the talent to implement and manage these complex IT systems is scarce, and costly to recruit and retain. And even when someone with the right skills and experience is identified and convinced to join, it often becomes apparent that a dedicated full-time employee might be excessive for the requirements of the role. This makes it even more difficult to fill this critical skills gap effectively and cost-efficiently.

3. The paradox of lowering labor costs while delivering more

Treasury has never been a department with a high headcount, but it’s still not immune to company-wide edicts to reduce labor expenditure. In this cost-cutting environment, the best most treasuries can hope for is a cap on their existing headcount. So, while treasuries are increasingly called on to deliver more and faster, they’re required to perform this expanded role without increasing headcount.

4. The evolution from a cost center to a performance-oriented business partner

Like the rest of your company, treasury must show a tangible contribution to the improvement of productivity and performance. This was the subject of our recent white paper – Treasury 4.x, the Age of Productivity, Performance & Steering. There are lots of ways to enable treasury to transition into this new more value-driven role – from introducing more automation, improving methodologies, and increasing use of data, to outsourcing certain activities. But all this comes with additional demands on budget, skills, and resources.

5. The talent pool isn’t sufficient to keep treasuries today afloat

The 2023 Association for Financial Professionals (AFP) Compensation Report suggests it has become increasingly difficult to fill open treasury positions. According to the survey, almost 60% of treasury and finance professionals said their organization was tackling a talent shortage. There are many reasons given for this. A competitive job market is certainly a dominant cause (as stated by 73% of organizations in the survey). But treasury is also facing a dearth of candidates with the necessary skills for their roles (indicated by 47% of organizations in the survey). As a result, treasury talent shortages are not only due to the general demographic challenges affecting all companies but also because treasury remains underrepresented in the higher education system.

6. A continually expanding treasury agenda

ESG, increased regulatory demands, the burden of administering digital payments – a constantly shifting treasury landscape not only requires additional resources but also niche skillsets and significant cross-departmental collaboration. In addition, the unprecedented challenges businesses have faced in recent years have placed a spotlight on treasury management as a critical resource for businesses. In 2022, an AFP survey found 35-43% treasury professionals were reporting a consistent increase in communication between treasury and the CFO. Further to this, a 2023 TIS survey found almost 50% of treasury professionals have become strategic partners with the CFO. This is further fueling an expanded and more complex treasury agenda, creating another pressure on skillsets and resources.

Uncertainty meets its match

These challenges are triggering uncertainty in treasuries. Do you have the resources and skill profiles you need? How can you give your team more bandwidth to deliver a constantly expanding treasury role? Who is going to manage the TMS you’ve just implemented? And what would happen if you unexpectedly lost a member of your team? We created our Treasury Business Services solution to support you as you maneuver uncertainty, enabling you to execute rapid performance improvements when you need them most.

TBS is a special unit of Zanders offering a wide range of niche treasury expertise and flexible resourcing options to treasuries. From running your treasury-IT platform and covering routine back-office tasks, to taking care of highly specialized activities and filling your temporary resource needs – our service is deliberately broad to give you optimal flexibility and more control to shape the support you need.

To find out more contact Carsten Jäkel.

Calibrating deposit models: Using historical data or forward-looking information?

Historical data is losing its edge. How can banks rely on forward-looking scenarios to future-proof non-maturing deposit models?

After a long period of negative policy rates within Europe, the past two years marked a period with multiple hikes of the overnight rate by central banks in Europe, such as the European Central Bank (ECB), in an effort to combat the high inflation levels in Europe. These increases led to tumult in the financial markets and caused banks to adjust the pricing of consumer products to reflect the new circumstances. These developments have given rise to a variety of challenges in modeling non-maturing deposits (NMDs). While accurate and robust models for non-maturing deposits are now more important than ever. These models generally consist of multiple building blocks, which together provide a full picture on the expected portfolio behavior. One of these building blocks is the calibration approach for parametrizing the relevant model elements, which is covered in this blog post.

One of the main puzzles risk modelers currently face is the definition of the expected repricing profile of non-maturing deposits. This repricing profile is essential for proper risk management of the portfolio. Moreover, banks need to substantiate modeling choices and subsequent parametrization of the models to both internal and external validation and regulatory bodies. Traditionally, banks used historically observed relationships between behavioral deposit components and their drivers for the parametrization. Because of the significant change in market circumstances, historical data has lost (part of) its forecasting power. As an alternative, many banks are now considering the use of forward-looking scenario analysis instead of, or in addition to, historical data.

The problem with using historical observations

In many European markets, the degree to which customer deposit rates track market rates (repricing) has decreased over the last decade. Repricing first decreased because banks were hesitant to lower rates below zero. And currently we still observe slower repricing when compared to past rising interest cycles, since interest rate hikes were not directly reflected in deposit rates. Therefore, the long period of low and even negative interest rates creates a bias in the historical data available for calibration, making the information less representative. Especially since the historical data does not cover all parts of the economic cycle. On the other hand, the historical data still contains relevant information on client and pricing behavior, such that fully ignoring observed behavior also does not seem sensible.

Therefore, to overcome these issues, Risk and ALM managers should analyze to what extent the historically repricing behavior is still representative for the coming years and whether it aligns with the banks’ current pricing strategy. Here, it could be beneficial for banks to challenge model forecasts by expectations following from economic rationale. Given the strategic relevance of the topic, and the impact of the portfolio on the total balance sheet, the bank’s senior management is typically highly involved in this process.

Improving models through forward looking information

Common sense and understanding deposit model dynamics are an integral part of the modeling process. Best practice deposit modeling includes forming a comprehensive set of possible (interest rate) scenarios for the future. To create a proper representation of all possible future market developments, both downward and upward scenarios should be included. The slope of the interest rate scenarios can be adjusted to reflect gradual changes over time, or sudden steepening or flattening of the curve. Pricing experts should be consulted to determine the expected deposit rate developments over time for each of the interest rate scenarios. Deposit model parameters should be chosen in such a way that its estimations on average provide the best fit for the scenario analysis.

When going through this process in your organization, be aware that the effects of consulting pricing experts go both ways. Risk and ALM managers will improve deposit models by using forward-looking business opinions and the business’ understanding of the market will improve through model forecasts.

Trying to define the most suitable calibration approach for your NMD model?

Would you like to know more about the challenges related to the calibration of NMD models based on historical data? Or would you like a comprehensive overview of the relevant considerations when applying forward-looking information in the calibration process?

Read our whitepaper on this topic: 'A comprehensive overview of deposit modelling concepts'

Supporting Your Treasury Processes with SAP S/4HANA: Cash and Banking First

A webinar by SAP and Zanders explored optimizing treasury processes with SAP S/4HANA, focusing on enhanced cash management, automation, and compliance.

On the 22nd of August, SAP and Zanders hosted a webinar on the topic of optimizing your treasury processes with SAP S/4HANA, with the focus on how to benefit from S/4HANA for the cash & banking processes at a corporate. In this article, we summarize the main topics discussed during this webinar. The speakers came from both SAP, the software supplier of SAP S/4HANA, and from Zanders, which is providing advisory services in Treasury, Risk and Finance.

The ever-evolving Treasury landscape demands modern solutions to address complex challenges such as real-time visibility, regulatory compliance, and efficient cash management. Recognizing this need, the webinar offered an informative platform to discuss how SAP S/4HANA can be a game-changer for Treasury operations and, in specific, to bring efficiency and security to cash & banking processes.

To set the stage, the pressing issues faced by today's Treasury departments are navigating an increasingly complex regulatory environment, achieving real-time cash visibility, automating repetitive tasks, and managing banking communications efficiently. This introduction underscored the indispensable role that a robust technology platform like SAP S/4HANA can play in overcoming these challenges. The maintenance of consistent bank master data was given as an example of how challenging this management can be with a scattered ERP landscape.

Available below: Webinar Slides & Recording.

SAP S/4HANA: A New Era in Treasury Management

SAP S/4HANA, a next-generation enterprise resource planning (ERP) suite, stands out by offering integrated modules designed to handle various facets of treasury management, thus providing a consolidated view of financial data and enabling a single source of truth.

SAP S/4HANA's Treasury and Risk Management capabilities encompass cash management, financial risk management, payment processing, and liquidity forecasting. These tools are critical for a contemporary Treasury function looking to enhance visibility and control over financial operations.

Streamlined Cash Management

The core of the webinar focused on how SAP S/4HANA revolutionizes cash management. Real-time data analytics and predictive modelling were emphasized as the cornerstones of the platform’s cash management capabilities. The session elaborated on:

- Enhanced Cash Positioning: SAP S/4HANA provides real-time cash positioning, allowing Treasury departments to track cash flows across multiple bank accounts instantly. With the development of the new Fiori app, instant balances can be retrieved directly into the Cash Management Dashboard. This immediate visibility helps in making informed decisions regarding investments or borrowing needs.

- Liquidity Planning and Forecasting: By leveraging historical data and machine learning algorithms, SAP S/4HANA can provide accurate liquidity forecasts. The use of advanced analytics ensures you can anticipate cash shortages and surpluses well ahead of time, thereby optimizing working capital.

Efficient Banking Communications & Payment Processing

Managing communications with multiple banking partners can be a daunting task. SAP S/4HANA’s capabilities in automating and streamlining these communications through seamless integration. In addition to this integration, SAP S/4HANA facilitates efficient payment processing by consolidating payment requests and transmitting them to relevant banks through secure channels. This integration not only accelerates transaction execution but also ensures compliance with global payment standards.

Security and Compliance

Data security and compliance with regulatory standards are pivotal in Treasury operations. The experts detailed SAP S/4HANA’s robust security protocols and compliance tools designed to safeguard sensitive financial information. The features highlighted were:

- Data Encryption: End-to-end data encryption ensures that financial data remains secure both in transit and at rest. This is critical for protecting against data breaches and unauthorized access.

- Compliance Monitoring: The platform includes built-in compliance monitoring tools that help organizations adhere to regulatory requirements. Automated compliance checks and audit trails ensure that all Treasury activities are conducted within the legal framework.

S/4HANA sidecar for C&B processes

But how to make use of all these new functionalities in a scattered landscape corporates often have and how to efficiently execute such a project. By integrating with existing ERP systems, the sidecar facilitates centralized bank statement processing, automatic reconciliation, and efficient payment processing. Without disrupting the core functionality in the underlying ERP systems, it supports bank account and cash management, as well as Treasury operations. The sidecar's scalability and enhanced data insights help businesses optimize cash utilization, maintain compliance, and make informed financial decisions, ultimately leading to more streamlined and efficient cash and banking operations. The sidecar allows for a step-stone approach supporting an ultimate full migration to S/4HANA. This was explained again by a business case on how users can now update the posting rules themselves in S/4HANA, supported by AI, running in the background, making suggestions for an improved posting rule.

Conclusion & Next Steps

The webinar concluded with a strong message: SAP S/4HANA provides a transformative solution for Treasury departments striving to enhance their cash and banking processes. By leveraging its comprehensive suite of tools, organizations can achieve greater efficiency, enhanced security, and improved strategic insight into their financial operations.

To explore further how SAP S/4HANA can support your Treasury processes, we encourage you to reach out for personalized consultations. Embrace the future of treasury management with SAP S/4HANA and elevate your cash and banking operations to unprecedented levels of efficiency and control. If you want to further discuss how to make use of SAP S/4HANA or to discuss deployment options and how to get there, please contact Eliane Eysackers.

Why Banks are Shifting to Open-Source Model Software for Financial Risk Management

Model software is essential for financial risk management.

Banks perform data analytics, statistical modelling, and automate financial processes using model software, making model software essential for financial risk management.

Why banks are recently moving their model software to open-source1:

- Volume dependent costs: Open-source comes with flexible storage space and computation power and corresponding costs and capacity.

- Popularity: Graduates are often trained in open-source software, whereas the pool of skilled professionals in some specific software is decreasing.

- Customization: You can tailor it exactly to your own needs. Nothing more, nothing less.

- Collaboration: Required level of version control and collaboration with other software available within the bank.

- Performance: Customization and flexibility allow for enhanced performance.

Often there is a need to reconsider the model software solution the Bank is using; open-source solutions are cost effective and can be set up in such a way that both internal and external (e.g. regulatory) requirements are satisfied.

How Zanders can support in moving to open-source model software.

Proper implementation of open-source model software is compliant with regulation.

- Replication of historical situations is possible via version control, containerization and release notes within the open-source model software. Allowing third parties to replicate historical model outcomes and model development steps.

- Governance setup via open-source ensures that everything is auditable and is released in a governed way; this can be done through releases pipelines and by setting roles and responsibilities for software users.

- This ensures that the model goes from development to production only after governed review and approval by the correct stakeholders.

Migration to open-source software is achievable.

- The open-source model software is first implemented including governance (release processes and roles and responsibilities).

- Then all current (data and) models are refactored from the current model software to the new one.

- Only after an extensive period of successful (shadow) testing the migration to the new model software is completed.

Zanders has a wealth of experience performing these exercises at several major banks, often in parallel to (or combined with) model change projects. Hence, if you have interest, we will be happy to share some further insights into our latest experiences/solutions in this specific area.

Therefore, we welcome you to reach out to Ward Broeders (Senior Manager).

- 80% of Dutch banks are currently using either Python or R-Studio as model software. ↩︎

Unlocking Value in Private Equity: Treasury Optimization as the Strategic Lever in the New Era of Operational Value Creation

In the high-stakes world of private equity, where the pressure to deliver exceptional returns is relentless, the playbook is evolving.

In the high-stakes world of private equity, where the pressure to deliver exceptional returns is relentless, the playbook is evolving. Gone are the days when financial engineering—relying heavily on leveraged buyouts and cost-cutting—was the silver bullet for value creation. Today, the narrative is shifting toward a more sustainable, operationally driven approach, with treasury and finance optimization emerging as pivotal levers in this transformation.

In this article, we blend fictional examples, use cases and other real-world examples to vividly illustrate key concepts and drive our points home.

The Disconnect Between Promised and Delivered Operational Value

Limited Partners (LPs) are becoming more discerning in their investment decisions, increasingly demanding more than just financial returns. They expect General Partners (GPs) to deliver on promises of operational improvements that go beyond mere financial engineering. However, there is often a significant disconnect between the operational value creation promised by GPs and the reality, which frequently relies too heavily on short-term financial tactics.

A report by McKinsey highlights that while 60% of GPs claim to focus on operational improvements, only 40% of LPs feel that these efforts significantly impact portfolio performance. This gap between intention and execution underscores the need for GPs to align their value creation strategies with LP expectations. LPs are particularly focused on consistent investment strategies, strong management teams, and robust operational processes that drive sustainable growth. They view genuine operational value creation as the cornerstone of a repeatable and sustainable investment strategy, offering reassurance that future fund generations will perform consistently.

Treasury: The Unsung Hero of Value Creation

Treasury functions, once seen as mere back-office operations, are increasingly recognized as crucial drivers of value in private equity. These functions—ranging from cash management to financial risk mitigation—are the lifeblood of any portfolio company.

The evolution of treasury functions, now known as Treasury 4.x, has transformed them into pivotal drivers of value. These modernized treasury roles—encompassing advanced cash management and risk mitigation—now align financial operations with broader strategic goals, leveraging technology and data analytics to optimize performance.

Yet, many firms struggleto appreciate just how much inefficiencies in these areas can erode value. Poor liquidity management, fragmented cash operations, and outdated financial processes can strangle a company's ability to invest in growth and hamstring its potential to capitalize on market opportunities.

Consider the example of a mid-sized European manufacturing firm acquired by a private equity investor. Initially, the focus was solely on scaling revenue by entering new markets. However, it soon became apparent that fragmented treasury operations were hemorrhaging resources, particularly due to decentralized cash management systems across multiple jurisdictions.

By centralizing these operations into a single source of truth, like a treasury management system (TMS), the company was able to cut down on redundant processes, improve visibility and central control on cash, reduce external borrowing cost and cash related operational costs by 20%. This freed up capital that was then reinvested into R&D and expansion efforts, positioning the firm to seize new growth opportunities with agility.

Cash Flow Forecasting: The Financial Crystal Ball

In the realm of private equity, where every dollar counts, cash flow forecasting is not just a routine exercise—it’s a strategic imperative. Accurate cash flow forecasting provides a clear window into a company's financial future, offering transparency that is invaluable for both internal decision-makers and external stakeholders, especially Limited Partners (LPs) who demand rigorous insights into their investments.

Take, for example, a mid-market technology firm backed by private equity, poised to launch a groundbreaking product. Initially, the firm’s cash flow forecasts were rudimentary, lacking the sophistication needed to anticipate the working capital needs for the product launch phase. As a result, the company nearly ran into a liquidity crisis that could have delayed the launch.

By overhauling its cash flow forecasting processes and incorporating scenario analysis, the company was able to better anticipate cash needs, secure bridge financing, and ensure a successful product rollout, which ultimately boosted investor confidence.

Navigating Financial Risks in a Volatile World

In today’s unpredictable economic landscape, managing financial risks such as currency fluctuations and interest rate spikes is more critical than ever. As private equity firms increasingly engage in cross-border acquisitions, the exposure to foreign exchange risk has become a significant concern. Similarly, the current high-interest-rate environment complicates debt management, adding layers of complexity to financial operations.

Consider a global consumer goods company within a private equity portfolio, operating in regions with volatile currencies like Brazil or South Africa. Without a robust FX hedging strategy, the company was previously exposed to unpredictable swings in cash flows due to exchange rate fluctuations, which affected its ability to meet debt obligations denominated in foreign currencies.

By implementing a comprehensive FX hedging strategy, including the use of natural hedges, the firm was able to lock in favorable exchange rates, stabilize its cash flows, and protect its margins. This not only ensured financial stability but also allowed the company to reinvest profits into expanding its footprint in emerging markets.

Treasury in M&A: A Crucial Integration Component

Treasury management is often the linchpin in the success or failure of mergers and acquisitions (M&A). The ability to seamlessly integrate treasury operations is essential for realizing the synergies promised by a merger. Failure to do so can lead to significant financial inefficiencies, eroding the anticipated value.

A cautionary tale can be seen in General Electric’s acquisition of Alstom Power, where unforeseen integration challenges led to substantial restructuring costs. The treasury teams faced difficulties in aligning the cash management systems and integrating different financial cultures, which delayed synergies realization and led to missed financial targets.

Conversely, in another M&A scenario involving the merger of two mid-sized logistics firms, a pre-emptive focus on treasury integration—such as harmonizing cash pooling arrangements and consolidating banking relationships—enabled the new entity to achieve cost synergies ahead of schedule, saving millions in operational expenses and improving free cash flow.

Streamlined Treasury and Finance: Driving Strategic Value and Returns in Private Equity

Streamlined treasury and finance operations are crucial for maximizing value in private equity. These enhancements go beyond cost savings, improving a company's agility and resilience by ensuring financial resources are available precisely when needed. This empowers portfolio companies to seize growth opportunities while driving cost savings and better resource utilization through operational efficiency. Optimizing cash management and liquidity also enables companies to better navigate market volatility, reducing risks such as poor cash flow forecasting.

Enhanced transparency and real-time data visibility lead to more informed decision-making, aligning with long-term value creation strategies. This not only strengthens investor confidence but also prepares companies for successful exits by making them more attractive to potential buyers. Improved free cash flow directly boosts the money-on-money multiple, enhancing financial outcomes for private equity investors.

Conclusion

In today’s private equity landscape, the strategic importance of treasury and finance optimization is undeniable. The era of relying solely on financial engineering is over. A comprehensive approach that includes robust treasury management and operational efficiency is now essential for driving sustainable growth and maximizing value. By addressing treasury inefficiencies, private equity firms can unlock significant value, ensuring portfolio companies have the financial health to thrive. This approach meets the rising expectations of Limited Partners, setting the stage for long-term success and profitable exits.

If you're interested in delving deeper into the benefits of strategic treasury management for private equity firms, you can contact Job Wolters.

Basel IV and External Credit Ratings

Explore how Basel IV reforms and enhanced due diligence requirements will transform regulatory capital assessments for credit risk, fostering a more resilient and informed financial sector.

The Basel IV reforms, which are set to be implemented on 1 January 2025 via amendments to the EU Capital Requirement Regulation, have introduced changes to the Standardized Approach for credit risk (SA-CR). The Basel framework is implemented in the European Union mainly through the Capital Requirements Regulation (CRR3) and Capital Requirements Directive (CRD6). The CRR3 changes are designed to address shortcomings in the existing prudential standards, by among other items, introducing a framework with greater risk sensitivity and reducing the reliance on external ratings. Action by banks is required to remain compliant with the CRR. Overall, the share of RWEA derived through an external credit rating in the EU-27 remains limited, representing less than 10% of the total RWEA under the SA with the CRR.

Introduction

The Basel Committee on Banking Supervision (BCBS) identified the excessive dependence on external credit ratings as a flaw within the Standardised Approach (SA), observing that firms frequently used these ratings to compute Risk-Weighted Assets (RWAs) without adequately understanding the associated risks of their exposures. To address this issue, regulators have implemented changes aimed to reduce the mechanical reliance on external credit ratings and to encourage firms to use external credit ratings in a more informed manner. The objective is to diminish the chances of underestimating financial risks in order to further build a more resilient financial industry. Overall, the share of Risk Weighted Assets (RWA) derived through an external credit rating remains limited, and in Europe it represents less than 10% of the total RWA under the SA.

The concept of due diligence is pivotal in the regulatory framework. It refers to the rigorous process financial institutions are expected to undertake to understand and assess the risks associated with their exposures fully. Regulators promote due diligence to ensure that banks do not solely rely on external assessments, such as credit ratings, but instead conduct their own comprehensive analysis.

The due diligence is a process performed by banks with the aim of understanding the risk profile and characteristics of their counterparties at origination and thereafter on a regular basis (at least annually). This includes assessing the appropriateness of risk weights, especially when using external ratings. The level of due diligence should match the size and complexity of the bank's activities. Banks must evaluate the operating and financial performance of counterparties, using internal credit analysis or third-party analytics as necessary, and regularly access counterparty information. Climate-related financial risks should also be considered, and due diligence must be conducted both at the solo entity level and consolidated level.

Banks must establish effective internal policies, processes, systems, and controls to ensure correct risk weight assignment to counterparties. They should be able to prove to supervisors that their due diligence is appropriate. Supervisors are responsible for reviewing these analyses and taking action if due diligence is not properly performed.

Banks should have methodologies to assess credit risk for individual borrowers and at the portfolio level, considering both rated and unrated exposures. They must ensure that risk weights under the Standardised Approach reflect the inherent risk. If a bank identifies that an exposure, especially an unrated one, has higher inherent risk than implied by its assigned risk weight, it should factor this higher risk into its overall capital adequacy evaluation.

Banks need to ensure they have an adequate understanding of their counterparties’ risk profiles and characteristics. The diligent monitoring of counterparties is applicable to all exposures under the SA. Banks would need to take reasonable and adequate steps to assess the operating and financial condition of each counterparty.

Rating System

The external credit assessment institutions (ECAIs) are credit rating agencies recognised by National supervisors. The External Credit ECAIs play a significant role in the SA through the mapping of each of their credit assessments to the corresponding risk weights. Supervisors will be responsible for assigning an eligible ECAI’s credit risk assessments to the risk weights available under the SA. The mapping of credit assessments should reflect the long-term default rate.

Exposures to banks, exposures to securities firms and other financial institutions and exposures to corporates will be risk-weighted based on the following hierarchy External Credit Risk Assessment Approach (ECRA) and the Standardised Credit Risk Assessment Approach (SCRA).

ECRA: Used in jurisdictions allowing external ratings. If an external rating is from an unrecognized or non-nominated ECAI, the exposure is considered unrated. Also, banks must perform due diligence to ensure ratings reflect counterparty creditworthiness and assign higher risk weights if due diligence reveals greater risk than the rating suggests.

SCRA: Used where external ratings are not allowed. Applies to all bank exposures in these jurisdictions and unrated exposures in jurisdictions allowing external ratings. Banks classify exposures into three grades:

- Grade A: Adequate capacity to meet obligations in a timely manner.

- Grade B: Substantial credit risk, such as repayment capacities that are dependent on stable or favourable economic or business conditions.

- Grade C: Higher credit risk, where the counterparty has material default risks and limited margins of safety

The CRR Final Agreement includes a new article (Article 495e) that allows competent authorities to permit institutions to use an ECAI credit assessment assuming implicit government support until December 31, 2029, despite the provisions of Article 138, point (g).

In cases where external credit ratings are used for risk-weighting purposes, due diligence should be used to assess whether the risk weight applied is appropriate and prudent.

If the due diligence assessment suggests an exposure has higher risk characteristics than implied by the risk weight assigned to the relevant Credit Quality Step (CQS) of an exposure, the bank would assign the risk weight at least one higher than the CQS indicated by the counterparty’s external credit rating.

Criticisms to this approach are:

- Banks are mandated to use nominated ECAI ratings consistently for all exposures in an asset class, requiring banks to carry out a due diligence on each and every ECAI rating goes against the principle of consistent use of these ratings.

- When banks apply the output floor, ECAI ratings act as a backstop to internal ratings. In case the due diligence would imply the need to assign a high-risk weight, the output floor could no longer be used consistently across banks to compare capital requirements.

Implementation Challenges

The regulation requires the bank to conduct due diligence to ensure a comprehensive understanding, both at origination and on a regular basis (at least annually), of the risk profile and characteristics of their counterparties. The challenges associated with implementing this regulation can be grouped into three primary categories: governance, business processes, and systems & data.

Governance

The existing governance framework must be enhanced to reflect the new responsibilities imposed by the regulation. This involves integrating the due diligence requirements into the overall governance structure, ensuring that accountability and oversight mechanisms are clearly defined. Additionally, it is crucial to establish clear lines of communication and decision-making processes to manage the new regulatory obligations effectively.

Business Process

A new business process for conducting due diligence must be designed and implemented, tailored to the size and complexity of the exposures. This process should address gaps in existing internal thresholds, controls, and policies. It is essential to establish comprehensive procedures that cover the identification, assessment, and monitoring of counterparties' risk profiles. This includes setting clear criteria for due diligence, defining roles and responsibilities, and ensuring that all relevant staff are adequately trained.

Systems & Data

The implementation of the regulation requires access to accurate and comprehensive data necessary for the rating system. Challenges may arise from missing or unavailable data, which are critical for assessing counterparties' risk profiles. Furthermore, reliance on manual solutions may not be feasible given the complexity and volume of data required. Therefore, it is imperative to develop robust data management systems that can capture, store, and analyse the necessary information efficiently. This may involve investing in new technology and infrastructure to automate data collection and analysis processes, ensuring data integrity and consistency.

Overall, addressing these implementation challenges requires a coordinated effort across the organization, with a focus on enhancing governance frameworks, developing comprehensive business processes, and investing in advanced systems and data management solutions.

How can Zanders help?

As a trusted advisor, we built a track record of implementing CRR3 throughout a heterogeneous group of financial institutions. This provides us with an overview of how different entities in the industry deal with the different implementation challenges presented above.

Zanders has been engaged to provide project management for these Basel IV implementation projects. By leveraging the expertise of Zanders' subject matter experts, we ensure an efficient and insightful gap analysis tailored to your bank's specific needs. Based on this analysis, combined with our extensive experience, we deliver customized strategic advice to our clients, impacting multiple departments within the bank. Furthermore, as an independent advisor, we always strive to challenge the status quo and align all stakeholders effectively.

In-depth Portfolio Analysis: Our initial step involves conducting a thorough portfolio scan to identify exposures to both currently unrated institutions and those that rely solely on government ratings. This analysis will help in understanding the extent of the challenge and planning the necessary adjustments in your credit risk framework.

Development of Tailored Models: Drawing from our extensive experience and industry benchmarks, Zanders will collaborate with your project team to devise a range of potential solutions. Each solution will be detailed with a clear overview of the required time, effort, potential impact on Risk-Weighted Assets (RWA), and the specific steps needed for implementation. Our approach will ensure that you have all the necessary information to make informed strategic decisions.

Robust Solutions for Achieving Compliance: Our proprietary Credit Risk Suite cloud platform offers banks robust tools to independently assess and monitor the credit quality of corporate and financial exposures (externally rated or not) as well as determine the relevant ECRA and SCRA ratings.

Strategic Decision-Making Support: Zanders will support your Management Team (MT) in the decision-making process by providing expert advice and impact analysis for each proposed solution. This support aims to equip your MT with the insights needed to choose the most appropriate strategy for your institution.

Implementation Guidance: Once a decision has been made, Zanders will guide your institution through the specific actions required to implement the chosen solution effectively. Our team will provide ongoing support and ensure that the implementation is aligned with both regulatory requirements and your institution’s strategic objectives.

Continuous Adaptation and Optimization: In response to the dynamic regulatory landscape and your bank's evolving needs, Zanders remains committed to advising and adjusting strategies as needed. Whether it's through developing an internal rating methodology, imposing new lending restrictions, or reconsidering business relations with unrated institutions, we ensure that your solutions are sustainable and compliant.

Independent and Innovative Thinking: As an independent advisor, Zanders continuously challenges the status quo, pushing for innovative solutions that not only comply with regulatory demands but also enhance your competitive edge. Our independent stance ensures that our advice is unbiased and wholly in your best interest.

By partnering with Zanders, you gain access to a team of dedicated professionals who are committed to ensuring your successful navigation through the regulatory complexities of Basel IV and CRR3. Our expertise and tailored approaches enable your institution to manage and mitigate risks efficiently while aligning with the strategic goals and operational realities of your bank. Reach out to Tim Neijs or Marco Zamboni for further comments or questions.

REFERENCE

[1] BCBS, The Basel Framework, Basel https://www.bis.org/basel_framework

[2] Regulation (EU) No 575/2013

[3] Directive 2013/36/EU

[4] EBA Roadmap on strengthening the prudential framework

[5] EBA REPORT ON RELIANCE ON EXTERNAL CREDIT RATINGS

Implications of CRR3 for the 2025 EU-wide stress test

An overview of how the new CRR3 regulation impacts banks’ capital requirements for credit risk and its implications for the 2025 EU-wide stress test, based on EBA’s findings.

With the introduction of the updated Capital Requirements Regulation (CRR3), which has entered into force on 9 July 2024, the European Union's financial landscape is poised for significant changes. The 2025 EU-wide stress test will be a major assessment to measure the resilience of banks under these new regulations. This article summarizes the estimated impact of CRR3 on banks’ capital requirements for credit risk based on the results of a monitoring exercise executed by the EBA in 2022. Furthermore, this article comments on the potential impact of CRR3 to the upcoming stress test, specifically from a credit risk perspective, and describes the potential implications for the banking sector.

The CRR3 regulation, which is the implementation of the Basel III reforms (also known as Basel IV) into European law, introduces substantial updates to the existing framework [1], including increased capital requirements, enhanced risk assessment procedures and stricter reporting standards. Focusing on credit risk, the most significant changes include:

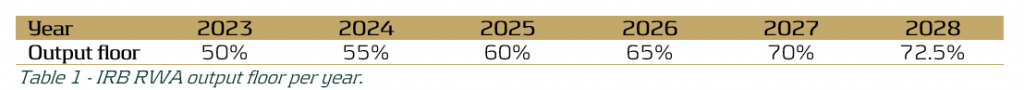

- The phased increase of the existing output floor to internally modelled capital requirements, limiting the benefit of internal models in 2028 to 72.5% of the Risk Weighted Assets (RWA) calculated under the Standardised Approach (SA), see Table 1. This floor is applied on consolidated level, i.e. on the combined RWA of all credit, market and operational risk.

- A revised SA to enhance robustness and risk sensitivity, via more granular risk weights and the introduction of new asset classes.1

- Limiting the application of the Advanced Internal Ratings Based (A-IRB) approach to specific asset classes. Additionally, new asset classes have been introduced.2

After the launch of CRR3 in January 2025, 68 banks from the EU and Norway, including 54 from the Euro area, will participate in the 2025 EU-wide stress test, thus covering 75% of the EU banking sector [2]. In light of this exercise, the EBA recently published their consultative draft of the 2025 EU-wide Stress Test Methodological Note [3], which reflects the regulatory landscape shaped by CRR3. During this forward-looking exercise the resilience of EU banks in the face of adverse economic conditions will be tested within the adjusted regulatory framework, providing essential data for the 2025 Supervisory Review and Evaluation Process (SREP).

The consequences of the updated regulatory framework are an important topic for banks. The changes in the final framework aim to restore credibility in the calculation of RWAs and improve the comparability of banks' capital ratios by aligning definitions and taxonomies between the SA and IRB approaches. To assess the impact of CRR3 on the capital requirements and whether this results in the achievement of this aim, the EBA executed a monitoring exercise in 2022 to quantify the impact of the new regulations, and published the results (refer to the report in [4]).

For this monitoring exercise the EBA used a sample of 157 banks, including 58 Group 1 banks (large and internationally active banks), of which 8 are classified as a Global Systemically Important Institution (G-SII), and 99 Group 2 banks. Group 1 banks are defined as banks that have Tier 1 capital in excess of EUR 3 billion and are internationally active. All other banks are labelled as Group 2 banks. In the report the results are separated per group and per risk type.

Looking at the impact on the credit risk capital requirements specifically caused by the revised SA and the limitations on the application of IRB, the EBA found that the median increase of current Tier 1 Minimum Required Capital3 (hereafter “MRC”) is approximately 3.2% over all portfolios, i.e. SA and IRB approach portfolios. Furthermore, the median impact on current Tier 1 MRC for SA portfolios is approximately 2.1% and for IRB portfolios is 0.5% (see [4], page 31). This impact can be mainly attributed to the introduction of new (sub) asset classes with higher risk weights on average. The largest increases are expected for ‘equities’, ‘equity investment in funds’ and ‘subordinated debt and capital instruments other than equity’. Under adverse scenarios the impact of more granular risk weights may be magnified due to a larger share of exposures having lower credit ratings. This may result in additional impact on RWA.

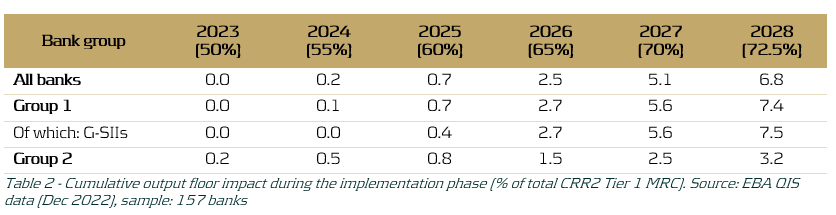

The revised SA results in more risk-sensitive capital requirements predictions over the forecast horizon due to the more granular risk weights and newly introduced asset classes. This in turn allows banks to more clearly identify their risk profile and provides the EBA with a better overview of the performance of the banking sector as a whole under adverse economic conditions. Additionally, the impact on RWA caused by the gradual increase of the output floor, as shown in Table 1, was estimated. As shown in Table 2, it was found that the gradual elevation of the output floor increasingly affects the MRC throughout the phase-in period (2023-2028).

Table 2 demonstrates that the impact is minimal in the first three years of the phase-in period, but grows significantly in the last three years of the phase-in period, with an average estimated 7.5% increase in Tier 1 MRC for G-SIIs in 2028. The larger increase in Tier 1 MRC for Group 1 banks, and G-SIIs in particular, as compared to Group 2 banks may be explained by the fact that larger banks more often employ an IRB approach and are thus more heavily impacted by an increased IRB floor, relative to their smaller counterparts. The expected impact on Group 1 banks is especially interesting in the context of the EU-wide stress test, since for the regulatory stress test only the 68 largest banks in Europe participate. Assuming that banks need to employ an increasing version of the output floor for their projections during the 2025 EU-wide stress test, this could lead to significant increases in capital requirements in the last years of the forecast horizon of the RWA projections. These increases may not be fully attributed to the adverse effects of the provided macroeconomic scenarios.

Conversely, it is good to note that a transition cap has been introduced by the Basel III reforms and adopted in CRR3. This cap puts a limit on the incremental increase of the output floor impact on total RWAs. The transitional period cap is set at 25% of a bank’s year-to-year increase in RWAs and may be exercised at the discretion of supervisors on a national level (see [5]). As a consequence, this may limit the observed increase in RWA during the execution of the 2025 EU-wide stress test.

In conclusion, the implementation of CRR3 and its adoption into the 2025 EU-wide stress test methodology may have a significant impact on the stress test results, mainly due to the gradual increase in the IRB output floor but also because of changes in the SA and IRB approaches. However, this effect may be partly mitigated by the transitional 25% cap on year-on-year incremental RWA due to the output floor increase. Additionally, the 2025 EU-wide stress test will provide a comprehensive view of the impact of CRR3, including the closer alignment between the SA and the IRB approaches, on the development of capital requirements in the banking sector under adverse conditions.

References:

- final_report_on_amendments_to_the_its_on_supervisory_reporting-crr3_crd6.pdf (europa.eu)

- The EBA starts dialogue with the banking industry on 2025 EU-Wide stress test methodology | European Banking Authority (europa.eu)

- 2025 EU-wide stress test - Methodological Note.pdf (europa.eu)

- Basel III monitoring report as of December 2022.pdf (europa.eu)

- Basel III: Finalising post-crisis reforms (bis.org)

- This includes the addition of the ‘Subordinated debt exposures’ asset class, as well as an additional branch of specialized lending exposures within the corporates asset class. Furthermore, a more detailed breakdown of exposures secured by mortgages on immovable property and acquisition, development and construction financing? has been introduced. ↩︎

- For in detailed information on the added asset classes and limited application of IRB refer to paragraph 25 of the report in [1]. ↩︎

- Tier 1 capital refers to the core capital held in a bank's reserves. It includes high-quality capital, predominantly in the form of shares and retained earnings that can absorb losses. The Tier 1 MRC is the minimum capital required to satisfy the regulatory Tier 1 capital ratio (ratio of a bank's core capital to its total RWA) determined by Basel and is an important metric the EBA uses to measure a bank’s health. ↩︎