How BCBS 239’s RDARR Principles Can Strengthen Risk Data Aggregation and Reporting in Financial Institutions

This blog explores how financial institutions can enhance their risk data aggregation and reporting by aligning with BCBS 239’s RDARR principles and the ECB’s supervisory expectations.

The ECB Banking Supervision has identified deficiencies in effective risk data aggregation and risk reporting (RDARR) as a key vulnerability in its planning of supervisory priorities for the 2023-25 cycle and has developed a comprehensive, targeted supervisory strategy for the upcoming years.

Banks are expected to step up their efforts and improve their capabilities in Risk Data Aggregation and Risk Reporting as Risk Data Architectures and supporting IT infrastructures are insufficient for most of the Financial Institutions. Hence, RDARR principles are expected to become more and more important during Internal Model Investigations and OnSite Inspections by the ECB.

In May 2024, the ECB published the Guide on effective risk data aggregation and risk reporting to ensure effective processes are in place to identify, manage, monitor and report the risks the institutions are or might be exposed to. With it, the ECB details its minimum supervisory expectations for a set of priority topics that have been identified as necessary preconditions for effective RDARR.

The ECB identifies seven priority areas, considered important prerequisites for robust governance arrangements and effective processes for identifying, monitoring and reporting tasks. The scope of application of these principles is reporting, Key Internal Models and other important models (e.g., IFRS9):

Relevance of BCBS 239

RDARR represents the implementation of the principles outlined in BCBS 239, which was published by the Basel Committee on Banking Supervision (BCBS) in 2013. BCBS 239 is essential to maintain regulatory compliance, mitigate risks, and drive data-driven decision-making. Non-compliance can result in significant financial penalties, reputational damage, and increased scrutiny from regulatory bodies. Therefore, BCBS 239 is a crucial framework that enhances financial stability by setting robust standards for risk data aggregation and reporting. Its principles encourage institutions to embrace data-driven practices, ensuring resilience, transparency, and efficiency. While challenges such as legacy infrastructure, data quality, and evolving risks persist, banks can overcome these hurdles through strategic investment in governance, technology, and data-driven culture to build end-to-end data transparency.

Zanders’ view on supervisory planning

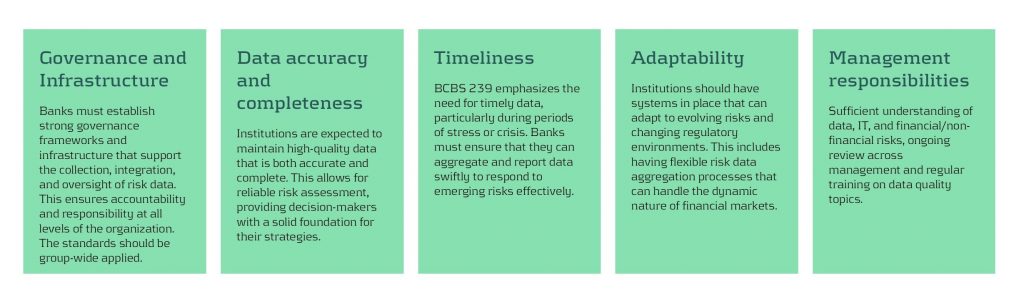

We believe the following 5 topics of the RDARR principles are of major importance for financial institutions:

Establishing an effective program to review and address these topics, considering the nature, size, scale and complexity of each financial institution, will facilitate alignment with the ECB’s expectations.

Zanders experience on RDARR implementation

Data extends beyond being merely a technical database; it is a fundamental component of an organization’s strategic framework. Data-driven organizations are not defined solely by their technological solutions, but by the data culture across the entire organization. At Zanders, we have assisted clients in developing data strategies aligned with RDARR principles and supported the implementation of future-proof data utilization, including the integration of advanced tools such as AI.

One critical observation is that organizations must urgently address key questions regarding their data: What governance structures are currently in place? Are roles and responsibilities within this governance framework clearly defined? Is the governance being effectively implemented as planned? What training, guidance, and support do employees require? Are data definitions and requirements consistently aligned across all stakeholders? When undertaking such an extensive program, institutions must carefully consider whether a top-down or bottom-up approach will be most effective. In the case of RDARR, success necessitates a comprehensive, dual-directional approach that fosters change across all levels.

If you are unsure about your compliance with BCBS 239 and RDARR requirements, contact us today to ensure alignment with best practices.

Converging on resilience: Integrating CCR, XVA, and real-time risk management

In a world where the Fundamental Review of the Trading Book (FRTB) commands much attention, it’s easy for counterparty credit risk (CCR) to slip under the radar.

However, CCR remains an essential element in banking risk management, particularly as it converges with valuation adjustments. These changes reflect growing regulatory expectations, which were further amplified by recent cases such as Archegos. Furthermore, regulatory focus seems to be shifting, particularly in the U.S., away from the Internal Model Method (IMM) and toward standardised approaches. This article provides strategic insights for senior executives navigating the evolving CCR framework and its regulatory landscape.

Evolving trends in CCR and XVA

Counterparty credit risk (CCR) has evolved significantly, with banks now adopting a closely integrated approach with valuation adjustments (XVA) — particularly Credit Valuation Adjustment (CVA), Funding Valuation Adjustment (FVA), and Capital Valuation Adjustment (KVA) — to fully account for risk and costs in trade pricing. This trend towards blending XVA into CCR has been driven by the desire for more accurate pricing and capital decisions that reflect the true risk profile of the underlying instruments/ positions.

In addition, recent years have seen a marked increase in the use of collateral and initial margin as mitigants for CCR. While this approach is essential for managing credit exposures, it simultaneously shifts a portion of the risk profile into contingent market and liquidity risks, which, in turn, introduces requirements for real-time monitoring and enhanced data capabilities to capture both the credit and liquidity dimensions of CCR. Ultimately, this introduces additional risks and modelling challenges with respect to wrong way risk and clearing counterparty risk.

As banks continue to invest in advanced XVA models and supporting technologies, senior executives must ensure that systems are equipped to adapt to these new risk characteristics, as well as to meet growing regulatory scrutiny around collateral management and liquidity resilience.

The Internal Model Method (IMM) vs. SA-CCR

In terms of calculating CCR, approaches based on IMM and SA-CCR provide divergent paths. On one hand, IMM allows banks to tailor models to specific risks, potentially leading to capital efficiencies. SA-CCR, on the other hand, offers a standardised approach that’s straightforward yet conservative. Regulatory trends indicate a shift toward SA-CCR, especially in the U.S., where reliance on IMM is diminishing.

As banks shift towards SA-CCR for Regulatory capital and IMM is used increasingly for internal purposes, senior leaders might need to re-evaluate whether separate calibrations for CVA and IMM are warranted or if CVA data can inform IMM processes as well.

Regulatory focus on CCR: Real-time monitoring, stress testing, and resilience

Real-time monitoring and stress testing are taking centre stage following increased regulatory focus on resilience. Evolving guidelines, such as those from the Bank for International Settlements (BIS), emphasise a need for efficiency and convergence between trading and risk management systems. This means that banks must incorporate real-time risk data and dynamic monitoring to proactively manage CCR exposures and respond to changes in a timely manner.

CVA hedging and regulatory treatment under IMM

CVA hedging aims to mitigate counterparty credit spread volatility, which affects portfolio credit risk. However, current regulations limit offsetting CVA hedges against CCR exposures under IMM. This regulatory separation of capital for CVA and CCR leads to some inefficiencies, as institutions can’t fully leverage hedges to reduce overall exposure.

Ongoing BIS discussions suggest potential reforms for recognising CVA hedges within CCR frameworks, offering a chance for more dynamic risk management. Additionally, banks are exploring CCR capital management through LGD reductions using third-party financial guarantees, potentially allowing for more efficient capital use. For executives, tracking these regulatory developments could reveal opportunities for more comprehensive and capital-efficient approaches to CCR.

Leveraging advanced analytics and data integration for CCR

Emerging technologies in data analytics, artificial intelligence (AI), and scenario analysis are revolutionising CCR. Real-time data analytics provide insights into counterparty exposures but typically come at significant computational costs: high-performance computing can help mitigate this, and, if coupled with AI, enable predictive modelling and early warning systems. For senior leaders, integrating data from risk, finance, and treasury can optimise CCR insights and streamline decision-making, making risk management more responsive and aligned with compliance.

By leveraging advanced analytics, banks can respond proactively to potential CCR threats, particularly in scenarios where early intervention is critical. These technologies equip executives with the tools to not only mitigate CCR but also enhance overall risk and capital management strategies.

Strategic considerations for senior executives: Capital efficiency and resilience

Balancing capital efficiency with resilience requires careful alignment of CCR and XVA frameworks with governance and strategy. To meet both regulatory requirements and competitive pressures, executives should foster collaboration across risk, finance, and treasury functions. This alignment will enhance capital allocation, pricing strategies, and overall governance structures.

For banks facing capital constraints, third-party optimisation can be a viable strategy to manage the demands of SA-CCR. Executives should also consider refining data integration and analytics capabilities to support efficient, resilient risk management that is adaptable to regulatory shifts.

Conclusion

As counterparty credit risk re-emerges as a focal point for financial institutions, its integration with XVA, and the shifting emphasis from IMM to SA-CCR, underscore the need for proactive CCR management. For senior risk executives, adapting to this complex landscape requires striking a balance between resilience and efficiency. Embracing real-time monitoring, advanced analytics, and strategic cross-functional collaboration is crucial to building CCR frameworks that withstand regulatory scrutiny and position banks competitively.

In a financial landscape that is increasingly interconnected and volatile, an agile and resilient approach to CCR will serve as a foundation for long-term stability. At Zanders, we have significant experience implementing advanced analytics for CCR. By investing in robust CCR frameworks and staying attuned to evolving regulatory expectations, senior executives can prepare their institutions for the future of CCR and beyond thereby avoiding being left behind.

Calibrating deposit models: Using historical data or forward-looking information?

Historical data is losing its edge. How can banks rely on forward-looking scenarios to future-proof non-maturing deposit models?

After a long period of negative policy rates within Europe, the past two years marked a period with multiple hikes of the overnight rate by central banks in Europe, such as the European Central Bank (ECB), in an effort to combat the high inflation levels in Europe. These increases led to tumult in the financial markets and caused banks to adjust the pricing of consumer products to reflect the new circumstances. These developments have given rise to a variety of challenges in modeling non-maturing deposits (NMDs). While accurate and robust models for non-maturing deposits are now more important than ever. These models generally consist of multiple building blocks, which together provide a full picture on the expected portfolio behavior. One of these building blocks is the calibration approach for parametrizing the relevant model elements, which is covered in this blog post.

One of the main puzzles risk modelers currently face is the definition of the expected repricing profile of non-maturing deposits. This repricing profile is essential for proper risk management of the portfolio. Moreover, banks need to substantiate modeling choices and subsequent parametrization of the models to both internal and external validation and regulatory bodies. Traditionally, banks used historically observed relationships between behavioral deposit components and their drivers for the parametrization. Because of the significant change in market circumstances, historical data has lost (part of) its forecasting power. As an alternative, many banks are now considering the use of forward-looking scenario analysis instead of, or in addition to, historical data.

The problem with using historical observations

In many European markets, the degree to which customer deposit rates track market rates (repricing) has decreased over the last decade. Repricing first decreased because banks were hesitant to lower rates below zero. And currently we still observe slower repricing when compared to past rising interest cycles, since interest rate hikes were not directly reflected in deposit rates. Therefore, the long period of low and even negative interest rates creates a bias in the historical data available for calibration, making the information less representative. Especially since the historical data does not cover all parts of the economic cycle. On the other hand, the historical data still contains relevant information on client and pricing behavior, such that fully ignoring observed behavior also does not seem sensible.

Therefore, to overcome these issues, Risk and ALM managers should analyze to what extent the historically repricing behavior is still representative for the coming years and whether it aligns with the banks’ current pricing strategy. Here, it could be beneficial for banks to challenge model forecasts by expectations following from economic rationale. Given the strategic relevance of the topic, and the impact of the portfolio on the total balance sheet, the bank’s senior management is typically highly involved in this process.

Improving models through forward looking information

Common sense and understanding deposit model dynamics are an integral part of the modeling process. Best practice deposit modeling includes forming a comprehensive set of possible (interest rate) scenarios for the future. To create a proper representation of all possible future market developments, both downward and upward scenarios should be included. The slope of the interest rate scenarios can be adjusted to reflect gradual changes over time, or sudden steepening or flattening of the curve. Pricing experts should be consulted to determine the expected deposit rate developments over time for each of the interest rate scenarios. Deposit model parameters should be chosen in such a way that its estimations on average provide the best fit for the scenario analysis.

When going through this process in your organization, be aware that the effects of consulting pricing experts go both ways. Risk and ALM managers will improve deposit models by using forward-looking business opinions and the business’ understanding of the market will improve through model forecasts.

Trying to define the most suitable calibration approach for your NMD model?

Would you like to know more about the challenges related to the calibration of NMD models based on historical data? Or would you like a comprehensive overview of the relevant considerations when applying forward-looking information in the calibration process?

Read our whitepaper on this topic: 'A comprehensive overview of deposit modelling concepts'

Surviving Prepayments: A Comparative Look at Prepayment Modelling Techniques

We investigate different model options for prepayments, among which survival analysis

In brief

- Prepayment modelling can help institutions successfully prepare for and navigate a rise in prepayments due to changes in the financial landscape.

- Two important prepayment modelling types are highlighted and compared: logistic regression vs Cox Proportional Hazard.

- Although the Cox Proportional Hazard model is theoretically preferred under specific conditions, the logical regression is preferred in practice under many scenarios.

The borrowers' option to prepay on their loan induces uncertainty for lenders. How can lenders protect themselves against this uncertainty? Various prepayment modelling approaches can be selected, with option risk and survival analyses being the main alternatives under discussion.

Prepayment options in financial products spell danger for institutions. They inject uncertainty into mortgage portfolios and threaten fixed-rate products with volatile cashflows. To safeguard against losses and stabilize income, institutions must master precise prepayment modelling.

This article delves into the nuances and options regarding the modelling of mortgage prepayments (a cornerstone of Asset Liability Management (ALM)) with a specific focus on survival models.

Understanding the influences on prepayment dynamics

Prepayments are triggered by a range of factors – everything from refinancing opportunities to life changes, such as selling a house due to divorce or moving. These motivations can be grouped into three overarching categories: refinancing factors, macroeconomic factors, and loan-specific factors.

- Refinancing factors

This encompasses key financial drivers (such as interest rates, mortgage rates and penalties) and loan-specific information (including interest rate reset dates and the interest rate differential for the customer). Additionally, the historical momentum of rates and the steepness of the yield curve play crucial roles in shaping refinancing motivations. - Macro-economic factors

The overall state of the economy and the conditions of the housing market are pivotal forces on a borrower's inclination to exercise prepayment options. Furthermore, seasonality adds another layer of variability, with prepayments being notably higher in certain months. For example, in December, when clients have additional funds due to payment of year-end bonusses and holiday budgets. - Loan-specific factors

The age of the mortgage, type of mortgage, and the nature of the property all contribute to prepayment behavior. The seasoning effect, where the probability of prepayment increases with the age of the mortgage, stands out as a paramount factor.

These factors intricately weave together, shaping the landscape in which customers make decisions regarding prepayments. Prepayment modelling plays a vital role in helping institutions to predict the impact of these factors on prepayment behavior.

The evolution of prepayment modelling

Research on prepayment modelling originated in the 1980s and initially centered around option-theoretic models that assume rational customer behavior. Over time, empirical models that cater for customer irrationality have emerged and gained prominence. These models aim to capture the more nuanced behavior of customers by explaining the relationship between prepayment rates and various other factors. In this article, we highlight two important types of prepayment models: logistic regression and Cox Proportional Hazard (Survival Model).

Logistic regression

Logistic regression, specifically its logit or probit variant, is widely employed in prepayment analysis. This is largely because it caters for the binary nature of the dependent variable indicating the occurrence of prepayment events and it moreover flexible. That is, the model can incorporate mortgage-specific and overall economic factors as regressors and can handle time-varying factors and a mix of continuous and categorical variables.

Once the logistic regression model is fitted to historical data, its application involves inputting the characteristics of a new mortgage and relevant economic factors. The model’s output provides the probability of the mortgage undergoing prepayment. This approach is already prevalent in banking practices, and frequently employed in areas such as default modeling and credit scoring. Consequently, it’s favored by many practitioners for prepayment modeling.

Despite its widespread use, the model has drawbacks. While its familiarity in banking scenarios offers simplicity in implementation, it lacks the interpretability characteristic of the Proportional Hazard model discussed below. Furthermore, in terms of robustness, a minimal drawback is that any month-on-month change in results can be caused by numerous factors, which all affect each other.

Cox Proportional Hazard (Survival model)

The Cox Proportional Hazard (PH) model, developed by Sir David Cox in 1972, is one of the most popular models in survival analysis. It consists of two core parts:

- Survival time. With the Cox PH model, the variable of interest is the time to event. As the model stems from medical sciences, this event is typically defined as death. The time variable is referred to as survival time because it’s the time a subject has survived over some follow-up period.

- Hazard rate. This is the distribution of the survival time and is used to predict the probability of the event occurring in the next small-time interval, given that the event has not occurred beforehand. This hazard rate is modelled based on the baseline hazard (the time development of the hazard rate of an average patient) and a multiplier (the effect of patient-specific variables, such as age and gender). An important property of the model is that the baseline hazard is an unspecified function.

To explain how this works in the context of prepayment modelling for mortgages:

- The event of interest is the prepayment of a mortgage.

- The hazard rate is the probability of a prepayment occurring in the next month, given that the mortgage has not been prepaid beforehand. Since the model estimates hazard rates of individual mortgages, it’s modelled using loan-level data.

- The baseline hazard is the typical prepayment behavior of a mortgage over time and captures the seasoning effect of the mortgage.

- The multiplier of the hazard rate is based on mortgage-specific variables, such as the interest rate differential and seasonality.

For full prepayments, where the mortgage is terminated after the event, the Cox PH model applies in its primary format. However, partial prepayments (where the event is recurring) require an extended version, known as the recurrent event PH model. As a result, when using the Cox PH model, , the modelling of partial and full prepayments should be conducted separately, using slightly different models.

The attractiveness of the Cox PH model is due to several features:

- The interpretability of the model. The model makes it possible to quantify the influence of various factors on the likelihood of prepayment in an intuitive way.

- The flexibility of the model. The model offers the flexibility to handle time-varying factors and a mix of continuous and categorical variables, as well as the ability to incorporate recurrent events.

- The multiplier means the hazard rate can’t be negative. The exponential nature of mortgage-specific variables ensures non-negative estimated hazard rates.

Despite the advantages listed above presenting a compelling theoretical case for using the Cox PH model, it faces limited adoption in practical prepayment modelling by banks. This is primarily due to its perceived complexity and unfamiliarity. In addition, when loan-level data is unavailable, the Cox PH model is no longer an option for prepayment modeling.

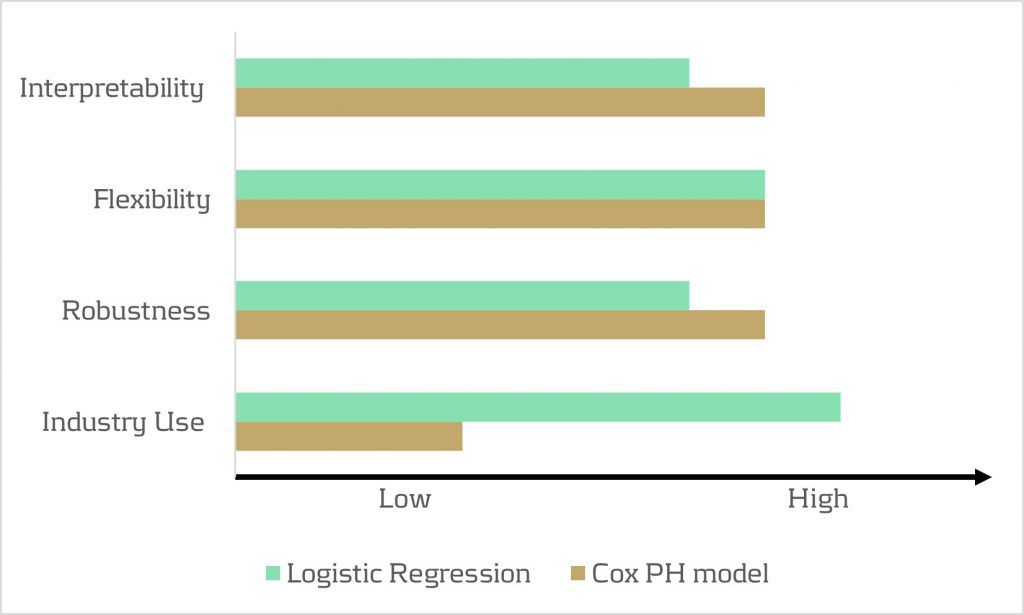

Logistic regression vs Cox Proportional Hazard

In scenarios with individual survival time data and censored observations, the Cox PH model is theoretically preferred over logistic regression. This preference arises because the Cox PH model leverages this additional information, whereas logistic regression focuses solely on binary outcomes, disregarding survival time and censoring.

However, practical considerations also come into play. Research shows that in certain cases, the logistic regression model closely approximates the results of the Cox PH model, particularly when hazard rates are low. Given that prepayments in the Netherlands are around 3-10% and associated hazard rates tend to be low, the performance gap between logistic regression and the Cox PH model is minimal in practice for this application. Also, the necessity to create a different PH model for full and partial prepayment adds an additional burden on ALM teams.

In conclusion, when faced with the absence of loan-level data, the logistic regression model emerges as a pragmatic choice for prepayment modeling. Despite the theoretical preference for the Cox PH model under specific conditions, the real-world performance similarities, coupled with the familiarity and simplicity of logistic regression, provide a practical advantage in many scenarios.

How can Zanders support?

Zanders is a thought leader on IRRBB-related topics. We enable banks to achieve both regulatory compliance and strategic risk goals by offering support from strategy to implementation. This includes risk identification, formulating a risk strategy, setting up an IRRBB governance and framework, and policy or risk appetite statements. Moreover, we have an extensive track record in IRRBB and behavioral models such as prepayment models, hedging strategies, and calculating risk metrics, both from model development and model validation perspectives.

Are you interested in IRRBB-related topics such as prepayments? Contact Jaap Karelse, Erik Vijlbrief, Petra van Meel (Netherlands, Belgium and Nordic countries) or Martijn Wycisk (DACH region) for more information.

Treasury 4.x – The age of productivity, performance and steering

We investigate different model options for prepayments, among which survival analysis

This article highlights key points mentioned in our whitepaper: Treasury 4.x - The age of productivity, performance and steering. You can download the full whitepaper here.

Summary: Resilience amid uncertainty

Tectonic geopolitical shifts leading to fragile supply chains, inflation, volatility on financial markets and adoption of business models, fundamental demographic changes leading to capacity and skill shortages on relevant labor markets – a perpetual stream of disruption is pushing businesses to their limits, highlighting vulnerabilities in operations, challenging productivity, and leading to damaging financial consequences. Never has there been a greater need for CFOs to call on their corporate treasury for help steering their business through the persistent market and economic volatility. This is accelerating the urgency to advance the role of treasury to perform this broadened mandate. This is where Treasury 4.x steps in.

Productivity. Performance. Steering.

Treasury deserves a well-recognized place at the CFO's table - not at the edge, but right in the middle. Treasury 4.x recognizes the measurable impact treasury has in navigating uncertainty and driving corporate success. It also outlines what needs to happen to enable treasury to fulfil this strategic potential, focusing on three key areas:

1. Increasing productivity: Personnel, capital, and data – these three factors of production are the source of sizeable opportunities to drive up efficiency, escape an endless spiral of cost-cutting programs and maintain necessary budgets. This can be achieved by investing in highly efficient, IT-supported decision-making processes and further amplified with analytics and AI. Another option is outsourcing activities that require highly specialized expert knowledge but don’t need to be constantly available. It’s also possible to reduce the personnel factor of production through substitution with the data factor of production (in this context knowledge) and the optimization of the capital factor of production. We explain this in detail in Chapter 4 – Unlocking the power of productivity.

2. Performance enhancements: Currency and commodity price risk management, corporate financing, interest rate risk management, cash and liquidity management and, an old classic, working capital management – it’s possible to make improvements across almost all treasury processes to achieve enhanced financial results. Working capital management is of particular importance as it’s synonymous with the focus on cash and therefore, the continuous optimization of processes which are driving liquidity. We unpick each of these performance elements in Chapter 5 – The quest for peak performance.

3. Steering success: Ideologically, the door has opened for the treasurer into the CFO’s room. But many uncertainties remain around how this role and relationship will work in practice, with persistent questions around the nature and scope of the function’s involvement in corporate management and decision-making. In this document, we outline the case for making treasury’s contribution to decision-making parameters available at an early stage, before investment and financing decisions are made. The concept of Enterprise Liquidity Performance Management (ELPM) provides a more holistic approach to liquidity management and long-needed orientation. This recognizes and accounts for cross-function dependencies and how these impact the balance sheet, income statement and cash flow. Also, the topic of company ratings bears further opportunities for treasury involvement and value-add: through optimization of both tactical and strategic measures in processes such as financing, cash management, financial risk management and working capital management. These are the core subjects we debate in Chapter 6 – The definition of successful steering.

The foundations for a more strategic treasury have been in place for years as part of a concept which is named Treasury 4.0 . But now, as businesses continue to face challenges and uncertainty, it’s time to pick up the pace of change. And to do this corporate treasury requires a new roadmap.

ISO 20022 XML – An Opportunity to Accelerate and Elevate Receivables Reconciliation

We investigate different model options for prepayments, among which survival analysis

Whether a corporate operates through a decentralized model, shared service center or even global business services model, identifying which invoices a customer has paid and in some cases, a more basic "who has actually paid me" creates a drag on operational efficiency. Given the increased focus on working capital efficiencies, accelerating cash application will improve DSO (Days Sales Outstanding) which is a key contributor to working capital. As the industry adoption of ISO 20022 XML continues to build momentum, Zanders experts Eliane Eysackers and Mark Sutton provide some valuable insights around why the latest industry adopted version of XML from the 2019 ISO standards maintenance release presents a real opportunity to drive operational and financial efficiencies around the reconciliation domain.

A quick recap on the current A/R reconciliation challenges

Whilst the objective will always be 100% straight-through reconciliation (STR), the account reconciliation challenges fall into four distinct areas:

1. Data Quality

- Partial payment of invoices.

- Single consolidated payment covering multiple invoices.

- Truncated information during the end to end payment processing.

- Separate remittance information (typically PDF advice via email).

2. In-country Payment Practices and Payment Methods

- Available information supported through the in-country clearing systems.

- Different local clearing systems – not all countries offer a direct debit capability.

- Local market practice around preferred collection methods (for example the Boleto in Brazil).

- ‘Culture’ – some countries are less comfortable with the concept of direct debit collections and want full control to remain with the customer when it comes to making a payment.

3. Statement File Format

- Limitations associated with some statement reporting formats – for example the Swift MT940 has approximately 20 data fields compared to the ISO XML camt.053 bank statement which contains almost 1,600 xml tags.

- Partner bank capability limitations in terms of the supported statement formats and how the actual bank statements are generated. For example, some banks still create a camt.053 statement using the MT940 as the data source. This means the corporates receives an xml MT940!

- Market practice as most companies have historically used the Swift MT940 bank statement for reconciliation purposes, but this legacy Swift MT first mindset is now being challenged with the broader industry migration to ISO 20022 XML messaging.

4. Technology & Operations

- Systems limitations on the corporate side which prevent the ERP or TMS consuming a camt.053 bank statement.

- Limited system level capabilities around auto-matching rules based logic.

- Dependency on limited IT resources and budget pressures for customization.

- No global standardized system landscape and operational processes.

How can ISO20022 XML bank statements help accelerate and elevate reconciliation performance?

At a high level, the benefits of ISO 20022 XML financial statement messaging can be boiled down into the richness of data that can be supported through the ISO 20022 XML messages. You have a very rich data structure, so each data point should have its own unique xml field. This covers not only more structured information around the actual payment remittance details, but also enhanced data which enables a higher degree of STR, in addition to the opportunity for improved reporting, analysis and importantly, risk management.

Enhanced Data

- Structured remittance information covering invoice numbers, amounts and dates provides the opportunity to automate and accelerate the cash application process, removing the friction around manual reconciliations and reducing exceptions through improved end to end data quality.

- Additionally, the latest camt.053 bank statement includes a series of key references that can be supported from the originator generated end to end reference, to the Swift GPI reference and partner bank reference.

- Richer FX related data covering source and target currencies as well as applied FX rates and associated contract IDs. These values can be mapped into the ERP/TMS system to automatically calculate any realised gain/loss on the transaction which removes the need for manual reconciliation.

- Fees and charges are reported separately, combined a rich and very granular BTC (Bank Transaction Code) code list which allows for automated posting to the correct internal G/L account.

- Enhanced related party information which is essential when dealing with organizations that operate an OBO (on-behalf-of) model. This additional transparency ensures the ultimate party is visible which allows for the acceleration through auto-matching.

- The intraday (camt.052) provides an acceleration of this enhanced data that will enable both the automation and acceleration of reconciliation processes, thereby reducing manual effort. Treasury will witness a reduction in credit risk exposure through the accelerated clearance of payments, allowing the company to release goods from warehouses sooner. This improves customer satisfaction and also optimizes inventory management. Furthermore, the intraday updates will enable efficient management of cash positions and forecasts, leading to better overall liquidity management.

Enhanced Risk Management

- The full structured information will help support a more effective and efficient compliance, risk management and increasing regulatory process. The inclusion of the LEI helps identify the parent entity. Unique transaction IDs enable the auto-matching with the original hedging contract ID in addition to credit facilities (letters of credit/bank guarantees).

In Summary

The ISO 20022 camt.053 bank statement and camt.052 intraday statement provide a clear opportunity to redefine best in class reconciliation processes. Whilst the camt.053.001.02 has been around since 2009, corporate adoption has not matched the scale of the associated pain.001.001.03 payment message. This is down to a combination of bank and system capabilities, but it would also be relevant to point out the above benefits have not materialised due to the heavy use of unstructured data within the camt.053.001.02 message version.

The new camt.053.001.08 statement message contains enhanced structured data options, which when combined with the CGI-MP (Common Global Implementation – Market Practice) Group implementation guidelines, provide a much greater opportunity to accelerate and elevate the reconciliation process. This is linked to the recommended prescriptive approach around a structured data first model from the banking community.

Finally, linked to the current Swift MT-MX migration, there is now agreement that up to 9,000 characters can be provided as payment remittance information. These 9,000 characters must be contained within the structured remittance information block subject to bilateral agreement within the cross border messaging space. Considering the corporate digital transformation agenda – to truly harness the power of artificial intelligence (AI) and machine learning (ML) technology – data – specifically structured data, will be the fuel that powers AI. It’s important to recognize that ISO 20022 XML will be an enabler delivering on the technologies potential to deliver both predictive and prescriptive analytics. This technology will be a real game-changer for corporate treasury not only addressing a number of existing and longstanding pain-points but also redefining what is possible.

ISO 20022 XML V09 – Is it time for Corporate Treasury to Review the Cash Management Relationship with Banks?

We investigate different model options for prepayments, among which survival analysis

The corporate treasury agenda continues to focus on cash visibility, liquidity centralization, bank/bank account rationalization, and finally digitization to enable greater operational and financial efficiencies. Digital transformation within corporate treasury is a must have, not a nice to have and with technology continuing to evolve, the potential opportunities to both accelerate and elevate performance has just been taken to the next level with ISO 20022 becoming the global language of payments. In this 6th article in the ISO 20022 XML series, Zanders experts Fernando Almansa, Eliane Eysackers and Mark Sutton provide some valuable insights around why this latest global industry move should now provide the motivation for corporate treasury to consider a cash management RFP (request for proposal) for its banking services.

Why Me and Why Now?

These are both very relevant important questions that corporate treasury should be considering in 2024, given the broader global payments industry migration to ISO 20022 XML messaging. This goes beyond the Swift MT-MX migration in the interbank space as an increasing number of in-country RTGS (real-time gross settlement) clearing systems are also adopting ISO 20022 XML messaging. Swift are currently estimating that by 2025, 80% of the domestic high value clearing RTGS volumes will be ISO 20022-based with all reserve currencies either live or having declared a live date. As more local market infrastructures migrate to XML messaging, there is the potential to provide richer and more structured information around the payment to accelerate and elevate compliance and reconciliation processes as well as achieving a more simplified and standardized strategic cash management operating model.

So to help determine if this really applies to you, the following questions should be considered around existing process friction points:

- Is your current multi-banking cash management architecture simplified and standardised?

- Is your account receivables process fully automated?

- Is your FX gain/loss calculations fully automated?

- Have you fully automated the G/L account posting?

- Do you have a standard ‘harmonized’ payments message that you send to all your banking partners?

If the answer is yes to all the above, then you are already following a best-in-class multi-banking cash management model. But if the answer is no, then it is worth reading the rest of this article as we now have a paradigm shift in the global payments landscape that presents a real opportunity to redefine best in class.

What is different about XML V09?

Whilst structurally, the XML V09 message only contains a handful of additional data points when compared with the earlier XML V03 message that was released back in 2009, the key difference is around the changing mindset from the CGI-MP (Common Global Implementation – Market Practice) Group1 which is recommending a more prescriptive approach to the banking community around adoption of its published implementation guidelines. The original objective of the CGI-MP was to remove the friction that existed in the multi-banking space as a result of the complexity, inefficiency, and cost of corporates having to support proprietary bank formats. The adoption of ISO 20022 provided the opportunity to simplify and standardize the multi-banking environment, with the added benefit of providing a more portable messaging structure. However, even with the work of the CGI-MP group, which produced and published implementation guidelines back in 2009, the corporate community has encountered a significant number of challenges as part of their adoption of this global financial messaging standard.

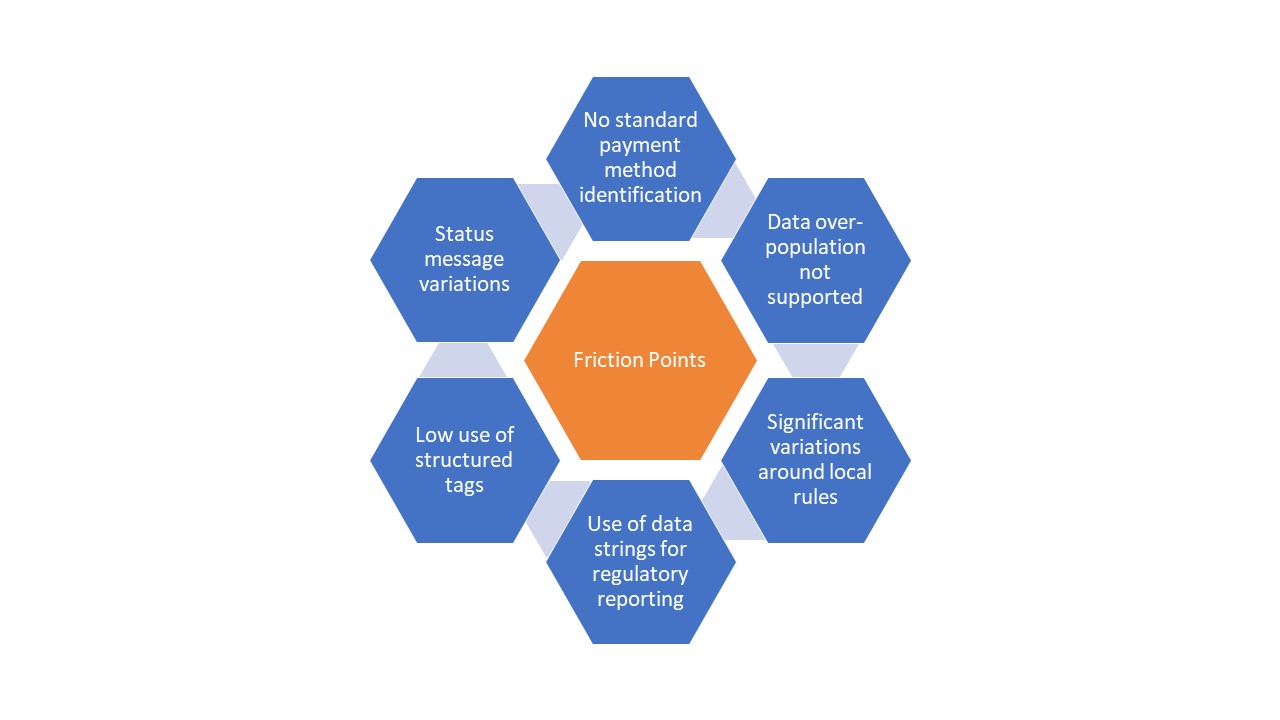

The key friction points are highlighted below:

Diagram 1: Key friction points encountered by the corporate community in adopting XML V03

The highlighted friction points have resulted in the corporate community achieving a sub-optimal cash management architecture. Significant divergence in terms of the banks’ implementation of this standard covers a number of aspects, from non-standard payment method codes and payment batching logic to proprietary requirements around regulatory reporting and customer identification. All of this translated into additional complexity, inefficiency, and cost on the corporate side.

However, XML V09 offers a real opportunity to simplify, standardise, accelerate and elevate cash management performance where the banking community embraces the CGI-MP recommended ‘more prescriptive approach’ that will help deliver a win-win situation. This is more than just about a global standardised payment message, this is about the end to end cash management processes with a ‘structured data first’ mindset which will allow the corporate community to truly harness the power of technology.

What are the objectives of the RFP?

The RFP or RFI (request for information) process will provide the opportunity to understand the current mindset of your existing core cash management banking partners. Are they viewing the MT-MX migration as just a compliance exercise. Do they recognize the importance and benefits to the corporate community of embracing the recently published CGI-MP guidelines? Are they going to follow a structured data first model when it comes to statement reporting? Having a clearer view in how your current cash management banks are thinking around this important global change will help corporate treasury to make a more informed decision on potential future strategic cash management banking partners. More broadly, the RFP will provide an opportunity to ensure your core cash management banks have a strong strategic fit with your business across dimensions such as credit commitment, relationship support to your company and the industry you operate, access to senior management and ease of doing of business. Furthermore, you will be in a better position to achieve simplification and standardization of your banking providers through bank account rationalization combined with the removal of non-core partner banks from your current day to day banking operations.

In Summary

The Swift MT-MX migration and global industry adoption of ISO 20022 XML should be viewed as more than just a simple compliance change. This is about the opportunity to redefine a best in class cash management model that delivers operational and financial efficiencies and provides the foundation to truly harness the power of technology.

- Common Global Implementation–Market Practice (CGI-MP) provides a forum for financial institutions and non-financial institutions to progress various corporate-to-bank implementation topics on the use of ISO 20022 messages and to other related activities, in the payments domain. ↩︎

Navigating intercompany financing in 2024

We investigate different model options for prepayments, among which survival analysis

In January 2022, the OECD incorporated Chapter X to the latest edition of their Transfer Pricing Guidelines, a pivotal step in regulating financial transactions globally. This addition aimed to set a global standard for transfer pricing of intercompany financial transactions, an area long scrutinized for its potential for profit shifting and tax avoidance. In the years since, we have seen various jurisdictions explicitly incorporating these principles and providing further guidance in this area. Notably, in the last year, we saw new guidance in South Africa, Germany, and the United Arab Emirates (UAE), while the Swiss and American tax authorities offered more explanations on this topic. In this article we will take you through the most important updates for the coming years.

Finding the right comparable

The arm's length principle established in the OECD Transfer Pricing Guidelines stipulates that the price applied in any intra-group transaction should be as if the parties were independent.1 This principle applies equally to financial transactions: every intra-group loan, guarantee, and cash pool should be priced in a manner that would be reasonable for independent market participants. Chapter X of the OECD Guidelines provided for the first time a detailed guidance on applying the arm's length principle to financial transactions. Since its publication, achieving arm's length pricing for financial transactions has become a significant regulatory challenge for many multinational corporations. At the same time the increased interest rates have encouraged tax authorities to pay increased attention to the topic – strengthened with the guidelines from Chapter X.

To determine the arm’s length price of an intra-group financial transaction, the most common methodology is to search for comparable market transactions that share the characteristics of the internal transaction under analysis. For example, in terms of credit risk, maturity, currency or the presence of embedded options. In the case of financial transactions, these comparable market transactions are often loans, traded bonds, or publicly available deposit facilities. Using these comparable observations, an estimate is made on the appropriate price of a transaction as a compensation for the risk taken by either party in a transaction. The risk-adjusted rate of return incorporates the impact of the borrower’s credit rating, any security present, the time to maturity of the transaction, and any other features that are deemed relevant. This methodology has been explicitly incorporated in many publications including in the guidance from the South African Revenue Service (SARS)2 and the Administrative Principles 2023 from the German Federal Ministry of Finance.3

The recently published Corporate Tax Guide of the UAE also implements OECD Chapter X, but does not explicitly mention a preference for market instruments. Instead, the tax guide prefers the use of “comparable debt instruments” without offering examples of appropriate instruments. This nuance requires taxpayers to describe and defend their selection of instruments for each type of transaction. Although the regulation allows for comparability adjustments for differences in terms and conditions, the added complexity poses an additional challenge for many taxpayers.

A special case of financial transaction for transfer pricing are cash pooling structures. Due to the multilateral nature of cash pools, a single benchmark study might be insufficient. OECD Chapter X introduced the principle of synergy allocation in a cash pool, where the benefits of the pool are shared between its leader and the participants of the pool based on the functions performed and risks assumed. This synergy allocation approach is also found in the recent guidance of SARS, but not in the German Administrative Principles. Instead, the German authorities suggest a cost-plus compensation for a leader of a cash pool with limited risks and functionality. Surprisingly, approaches for complex cash pooling structures such as an in-house bank are not described by the new German Administrative Principles.

To find out more about the search for the best comparable, have a look at our white paper. You can download a free copy here.

Moving towards new credit rating analyses

Before pricing an intra-group financial transaction, it is paramount to determine the credit risks attached to the transaction under analysis. This can be a challenging exercise, as the borrowing entity is rarely a stand-alone entity which has public debt outstanding or a public credit rating. As a result, corporates typically rely on a top-down or bottom-up rating analysis to estimate the appropriate credit risk in a transaction. In a top-down analysis, the credit rating is largely based on the strength of the group: the subsidiary credit rating is derived by downgrading the group rating by one or two notches. An alternative approach is the bottom-up analysis, where the stand-alone creditworthiness of the borrower is first assessed through its financial statements. Afterwards, the stand-alone credit rating is adjusted with the group’s credit rating based on the level of support that the subsidiary can derive from the group.

The group support assessment is an important consideration in the credit rating assessment of subsidiaries. Although explicit guarantees or formal support between an entity and the group are often absent, it should still be assessed whether the entity benefits from association with the group: implicit group support. Authorities in the United States, Switzerland, and Germany have provided more insight into their views on the role of the implicit group support, all of them recognizing it as a significant factor that needs to be considered in the credit rating analysis. For instance, the American Internal Revenue Service emphasized the impact of passive association of an entity with the group in the memorandum issued in December 2023.4

The Swiss tax authorities have also stressed the importance of implicit support for rating analyses in the Q&A released in February 2024.5 In this guidance, the authorities did not only emphasize the importance of factoring the implicit group support, but also expressed a preference for the bottom-up approach. This contrasts with the top-down approach followed by many multinationals in the past, which are now encouraged to adopt a more comprehensive method aligned with the bottom-up approach.

Interested in learning more about credit ratings? Our latest white paper has got you covered!

Grab a free copy here.

Standardization for success

Although the standards set by the OECD have been explicitly adopted by numerous jurisdictions, the additional guidance further develops the requirements in complex transfer pricing areas. Navigating such a complex and demanding environment under increasing interest rates is a challenge for many multinational corporations. Perhaps the best advice is found in the German publication: in its Administrative Principles, it is stressed that the transfer price determination should occur before completion of the transaction and the guidelines prefer a standardized methodology. To get a head start, it is important to put in place an easy to execute process for intra-group financial transactions with comprehensive transfer pricing documentation.

Despite the complexity of the topic involved, such a standardized method will always be easier to defend. One thing is for certain: the details of transfer pricing studies for financial transactions, such as the analysis of ratings and the debt market, will continue to be a part of every transfer pricing and tax manager agenda for 2024.

For more information on Mastering Financial Transaction Transfer Pricing, download our white paper.

- Chapter X, transfer pricing guidance on financial transactions, was published in February 2020 and incorporated in the 2022 edition of the OECD TP Guidelines. ↩︎

- Interpretation Note 127 issued in 17 January 2023 by the South African Revenue Service. ↩︎

- Administrative Principles on Transfer Pricing issued by the German Ministry of Finance, published on 6 June 2023. ↩︎

- Memorandum (AM 2023-008) issued on 19 December 2023 by the US Internal Revenue Service (IRS) Deputy Associate Chief Counsel on Effect of Group membership on Financial Transactions under Section 482 and Treas. Reg. § 1.482-2(a). ↩︎

- Practical Q&A Guidance published on 23 February 2024 by the Swiss Federal Tax Authorities. ↩︎

European committee accepts NII SOT while EBA published its roadmap for IRRBB

We investigate different model options for prepayments, among which survival analysis

The European Committee (EC) has approved the regulatory technical standards (RTS) that include the specification of the Net Interest Income (NII) Supervisory Outlier Test (SOT). The SOT limit for the decrease in NII is set at 5% of Tier 1 capital. Since the three-month scrutiny period has ended it is expected that the final RTS will be published soon. 20 days after the publication the RTS will go into force. The acceptance of the NII SOT took longer than expected among others due to heavy pushback from the banking sector. The SOT, and the fact that some banks rely heavily on it for their internal limit framework is also one of the key topics on the heatmap IRRBB published by the European Banking Authority (EBA). The heatmap detailing its scrutiny plans for implementing interest rate risk in the banking book (IRRBB) standards across the EU. In the short to medium term (2024/Mid-2025), the focus is on

- The EBA has noted that some banks use the as an internal limit without identifying other internal limits. The EBA will explore the development of complementary indicators useful for SREP purposes and supervisory stress testing.

- The different practices on behavioral modelling of NMDs reported by the institutions.

- The variety of hedging strategies that institutions have implemented.

- Contribute to the Dynamic Risk Management project of the International Accounting Standards Board (IASB), which will replace the macro hedge accounting standard.

In the medium to long-term objectives (beyond mid-2025) the EBA mentions it will monitor the five-year cap on NMDs and CSRBB definition used by banks. No mention is made by the EBA on the consultation started by the Basel Committee on Banking Supervision, on the newly calibrated interest rate scenarios methodology and levels. In the coming weeks, Zanders will publish a series of articles on the Dynamic Risk Management project of the IASB and what implications it will have for banks. Please contact us if you have any questions on this topic or others such as NMD modelling or the internal limit framework/ risk appetite statements.

The EBA published its roadmap the implementation of Basel and starts with the first consultations

We investigate different model options for prepayments, among which survival analysis

The European Banking Authority (EBA) published its roadmap on the Banking Package, which implements the final Basel III reforms in the European Union. This roadmap develops over four phases, and it is expected to be completed as follows:

- Phase 1: Covers 32 mandates in the areas of credit, market and operational risk, which predominantly result from the transition to Basel III. In addition, this first phase will also see the first mandates under the Capital Requirements Directive (CRD) in the area of ESG.

- Phase 2: This phase will further progress in covering Capital Requirements Regulation (CRR) mandates related to credit, operational and market risk. Furthermore, a considerable number of CRD mandates related to high EU standards in terms of governance and access to the single market with regard to third-country branches will be developed in this phase.

- Phase 3: It includes most of the remaining mandates related to regulatory products as well as a number of reports, whereby further perspectives and initial monitoring efforts regarding banking regulation implementation are worth considering.•

- Phase 4: In this last phase, a number of products, mostly consisting of reports, will be developed, providing information on the implementation progress, results and challenges.

In addition, there are some mandates that are ongoing and reoccurring and are not part of any of the four phases but will be made operational at the date of implementation in 2025. As part of phase 1, the EBA has published multiple consultation papers, which form the first step in the implementation of the Banking Package. The three main consultation papers published are:

- A public consultation on two draft ITS amending Pillar 3 disclosure requirements and supervisory reporting requirements. The suggested amendments on the reporting obligations cover a wide range of topics such as the output floor, standardized and internal ratings-based models (IRB) for credit risk, the three new approaches for own funds requirements for CVA risk and the (simplified) standardized approach for market risk.

- A public consultation launched by the EBA on the Regulatory Technical Standards (RTS) determining the conditions for an instrument with residual risk to be classified as a hedge. This consultation, on the standardized approach under the FRTB framework, focuses on the residual risk add-on (RRAO). Introduced by the Capital Requirements Regulation (CRR3), the RRAO framework allows exemptions for instruments hedging residual risks. The proposed RTS outline criteria for identifying hedges, distinguishing between non-sensitivity-based method risk factors and other reasons for RRAO charges.

- A public consultation on two draft Implementing Technical Standards (ITS) amending Pillar 3 disclosures and supervisory reporting requirements for operational risk. These revisions align with the new Capital Requirements Regulation (CRR3) and aim to consolidate reporting and disclosure requirements for operational risk and broader CRR3 changes. These consultation papers should be read in conjunction with the consultation papers on the new framework for the business indicator for operational risk, published at the same time.