One of the main goals of the European Banking Authority (EBA) is to promote supervisory convergence across the European Union regarding supervisory practices. To further the convergence of these supervisory practices the EBA annually outlines key areas of supervisory attention in its European Supervisory Examination Programme (ESEP). On 19 October 2023, the EBA announced the primary focus points for 2024.

Liquidity and funding risk

While European banks generally have sufficient liquidity, there are potential challenges on the horizon. Recent events, including bank failures in the United States and the issues with Credit Suisse, have underscored the importance for banks to have a framework in place that allows a quick response to market volatility and changes in liquidity.

Several factors contribute to these challenges, such as the end of funding programs (i.e. quantitative easing and the TLTRO program), changes in market interest rates, and evolving depositor behavior. The EBA stresses it's not just about meeting regulatory requirements; banks are urged to manage liquidity proactively, maintain reasonable liquidity buffers beyond regulatory mandates, diversify their funding sources, and adapt to changing market dynamics.

The EBA also stresses that the role of social media in relation to the financial markets cannot be underestimated. Banks are encouraged to incorporate social media sentiment into their stress-testing frameworks and develop strategies to counter the impact of negative social media news on deposit withdrawals or market funding stability.

Supervisory authorities should assess institutions’ liquidity risk, funding profiles, and their readiness to deal with wholesale/retail counterparties and funding concentrations. They should also scrutinize banks’ internal liquidity adequacy assessment processes (ILAAP) and their ability to sell securities under different market conditions. Relevant to the increased scrutiny of liquidity and funding risk are the revised technical standards on supervisory reporting on liquidity, published in the summer of 2023 (EBA, June 2023).

Interest rate risk and hedging

The transition from an era of persistently low or even negative interest rates to a period of rising rates and persistent inflation is a major concern for banks in 2024. While the initial impact on net interest margins may be positive, banks face challenges in managing interest rate risk effectively.

Supervisors are tasked with assessing whether banks have suitable organizational frameworks for managing interest rate risk. This includes examining responsibilities at the management level and ensuring that senior management is implementing effective interest rate risk strategies. Moreover, supervisors should evaluate how changes in interest rates may impact an institution’s Net Interest Income (NII) and Economic Value of Equity (EVE). This involves examining assumptions about customers’ behavior, particularly in the context of deposit funding in the digital age.

Interest rate risk and liquidity and funding risk are closely linked, and supervisors are encouraged to consider these links in their assessments, reflecting the interconnected nature of these topics. The new guidelines on IRRBB and CSRBB (EBA, July 2023) emphasize this interconnectedness. It underscores the necessity for financial institutions and regulatory bodies alike to adopt a holistic approach, recognizing that addressing one risk may have cascading effects on others.

The EBA has announced a data collection scheme regarding IRRBB data of financial institutions, highlighting the priority the EBA gives to IRRBB. The data collection exercise is based on the newly published implementing technical standards (ITS) for IRRBB and has a March 2023 deadline. The collection of the IRRBB data will only apply to those institutions that are already reporting IRRBB to the EBA in the context of the QIS exercise.

Recovery operationalization

Recent financial market events (such as Credit Suisse, SVB) have underscored the importance of being prepared for swift and effective crisis responses. Recovery plans, which banks are required to have in place, must be updated and they must include credible options to restore financial soundness in a timely manner.

Supervisors play a vital role in assessing the adequacy and severity of scenarios in recovery plans. These scenarios must be sufficiently severe to trigger the full range of available recovery options, allowing institutions to demonstrate their capacity to restore business and financial viability in a crisis.

Moreover, the Overall Recovery Capacity (ORC) is a key outcome of recovery planning, providing an indication of the institution’s ability to restore its financial position following a significant downturn. It’s crucial for supervisors to review the adequacy and quality of the ORC, with a focus on liquidity recovery capacity.

To ensure the effectiveness of recovery plans, supervisors should also encourage banks to perform dry-run exercises and assess the suitability of communication arrangements, including faster communication tools like social media.

A strategic imperative

Beyond these key focus areas, the EBA also emphasizes the ongoing relevance of issues such as asset quality, cyber risk, and data security. These challenges remain important in the supervisory landscape, although they are not the main priorities in the coming year.

Thus, in light of the EBA’s aforementioned regulatory priorities for 2024, it is imperative for all financial institutions across the European Union to proactively engage in ensuring the stability and resilience of the banking sector. From a liquidity perspective, it is vital to actively manage your financial institution’s liquidity and anticipate the ripples of market volatility. Moreover, the insights of social media sentiment within your stress-testing frameworks can add vital information. The ability to navigate funding challenges is not just a regulatory requirement; it’s a strategic imperative.

The shift to the current high interest rate environment warrants an assessment of a bank’s organizational readiness for this change. Make sure that your senior management is not only aware of implementing effective interest rate risk strategies but also adept at them. Moreover, scrutinize the impact of changing interest rates on your NII and EVE.

How can Zanders support?

Zanders is a thought leader in the management and modeling of IRRBB. We enable financial institutions to meet their strategic risk goals while achieving regulatory compliance, by offering support from strategy to implementation. In light of the aforementioned regulatory priorities of the EBA, we can support and guide you through these changes in the world of IRRBB with agility and foresight.

Are you interested in IRRBB-related topics? Contact Jaap Karelse, Erik Vijlbrief (Netherlands, Belgium and Nordic countries) or Martijn Wycisk (DACH region) for more information.

EBA, July 2023. Guidelines on IRRBB and CSRBB. s.l.:s.n.

EBA, June 2023. Implementing Technical Standards on supervisory reporting amendments with regards to COREP, asset encumbrance and G-SIIs. s.l.:s.n.

EBA, September 2023. Work Program 2024. s.l.:s.n.

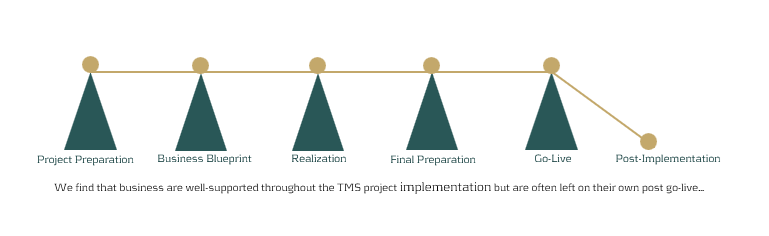

Businesses across the globe invest tens of thousands of dollars in implementing treasury management systems to achieve the accuracy, automation, speed, and reporting they require.

But what happens after implementation, when the project team has packed up and handed over the reins to the employees and support staff?

The first months after a system implementation can be some of the most challenging to a business and its people. Learning a new system is like learning any new skill – it requires time and effort to become familiar with the new ways of working, and to be completely comfortable again performing tasks. Previous processes, even if they were not the most efficient, were no doubt second nature to system users and many would have been experts in working their way through what needed to be done to get accurate results. New, improved processes can initially take longer as the user learns how to step through the unfamiliar system. This is a normal part of adopting a new landscape and can be expected. However, employee frustration is often high during this period, as more mental effort is required to perform day-to-day tasks and avoid errors. And when mistakes are made, it often takes more time to resolve them because the process for doing so is unfamiliar.

High-risk period for the company

With an SAP system, the complexity is often great, given the flexibility and available options that it offers. New users of SAP Treasury Management Software may take on average around 12 – 18 months to feel comfortable enough to perform their day-to-day operations, with minimal errors made. This can be a high-risk period for the company, both in terms of staff retention as well as in the mistakes made. Staff morale can dip due to the changes, frustrations and steep learning curve and errors can be difficult to work through and correct.

In-house support staff are often also still learning the new technology and are generally not able to provide the quick turnaround times required for efficient error management right from the start. When the issue is a critical one, the cost of a slow support cycle can be high, and business reputation may even be at stake.

While the benefits of a new implementation are absolutely worthwhile, businesses need to ensure that they do not underestimate the challenges that arise during the months after a system go-live.

Experts to reduce risks

What we have seen is that especially during the critical post-implementation period – and even long afterward – companies can benefit and reduce risks by having experts at their disposal to offer support, and even additional training. This provides a level of relief to staff as they know that they can reach out to someone who has the knowledge needed to move forward and help them resolve errors effectively.

Noticing these challenges regularly across our clients has led Zanders to set up a dedicated support desk. Our Treasury Technology Support (TTS) service can meet your needs and help reduce the risks faced. While we have a large number of highly skilled SAP professionals as part of the Zanders group, we are not just SAP experts. We have a wide pool of treasury experts with both functional & technical knowledge. This is important because it means we are able to offer support across your entire treasury system landscape. So whether it be your businesses inbound services, the multitude of interfaces that you run, the SAP processes that take place, or the delivery of messages and payments to third parties and customers, the Zanders TTS team can help you. We don’t just offer vendor support, but rather are ready to support and resolve whatever the issue is, at any point in your treasury landscape.

As the leading independent treasury consultancy globally, we can fill the gaps where your company demands it and help to mitigate that key person risk. If you are experiencing these challenges or can see how these risks may impact your business that is already in the midst of a treasury system implementation, contact Warren Epstein for a chat about how we can work together to ensure the long-term success of your system investment.

Early 2023, SAP launched its Digital Currency Hub as a pilot to explore the future of cross-border transactions using crypto or digital currencies.

In this article, we explore this stablecoin payments trial, examine the advantages of digital currencies and how they could provide a matching solution to tackle the hurdles of international transactions.

Cross-border payment challenges

While cross-border payments form an essential part of our globalized economy today, they have their own set of challenges. For example, cross-border payments often involve various intermediaries, such as banks and payment processors, which can result in significantly higher costs compared to domestic payments. The involvement of multiple parties and regulations can lead to longer processing times, often combined with a lack of transparency, making it difficult to track the progress of a transaction. This can lead to uncertainty and potential disputes, especially when dealing with unfamiliar payment systems or intermediaries. Last but not least, organizations must ensure they meet the different regulations and compliance requirements set by different countries, as failure to comply can result in penalties or delays in payment processing.

Advantages of digital currencies

Digital currencies have gained significant interest in recent years and are rapidly adopted, both globally and nationally. The impact of digital currencies on treasury is no longer a question of ‘if’ but ‘when’, as such it is important for treasurers to be prepared. While we address the latest developments, risks and opportunities in a separate article, we will now focus on the role digital currencies can play in cross-border transactions.

The notorious volatility of traditional crypto currencies, which makes them less practical in a business context, has mostly been addressed with the introduction of stablecoins and central bank digital currencies. These offer a relatively stable and safe alternative for fiat currencies and bring some significant benefits.

These digital currencies can eliminate the need for intermediaries such as banks for payment processing. By leveraging blockchain technology, they facilitate direct host-to-host transactions with the benefit of reducing transaction fees and near-instantaneous transactions across borders. Transactions are stored in a distributed ledger which provides a transparent and immutable record and can be leveraged for real-time tracking and auditing of cross-border transactions. Users can have increased visibility into the status and progress of their transactions, reducing disputes and enhancing trust. At a more advanced level, compliance measures such as KYC, KYS or AML can be directly integrated to ensure regulatory compliance.

SAP Digital Currency Hub

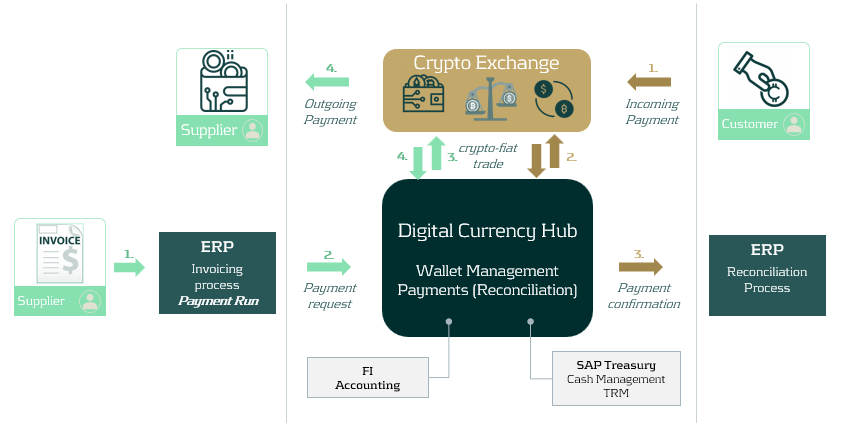

Earlier this year, SAP launched its Digital Currency Hub as a pilot to further explore the future of cross-border transactions using crypto or digital currencies. The Digital Currency Hub enables the integration of digital currencies to settle transactions with customers and suppliers. Below we provide a conceptual example of how this can work.

- Received invoices are recorded into the ERP and a payment run is executed.

- The payment request is sent to SAP Digital Currency Hub, which processes the payment and creates an outgoing payment instruction. The payment can also be entered directly in SAP Digital Currency Hub.

- The payment instruction is sent to a crypto exchange, instructing to transfer funds to the wallet of the supplier.

- The funds are received in the supplier’s wallet and the transaction is confirmed back to SAP Digital Currency Hub.

In a second example, we have a customer paying crypto to our wallet:

- The customer pays funds towards our preferred wallet address. Alternatively, a dedicated wallet per customer can be set up to facilitate reconciliation.

- Confirmation of the transaction is sent to SAP Digital Currency Hub. Alternatively, a request for payment can also be sent.

- A confirmation of the transaction is sent to the ERP where the open AR item is managed and reconciled. This can be in the form of a digital bank statement or via the use of an off-chain reference field.

Management of the wallet(s) can be done via custodial services or self-management. There are a few security aspects to consider, on which we recently published an interesting article for those keen to learn more.

While still on the roadmap, SAP Digital Currency Hub can be linked to the more traditional treasury modules such as Cash and Liquidity Management or Treasury and Risk Management. This would allow to integrate digital currency payments into the other treasury activities such as cash management, forecasting or financial risk management.

Conclusion

With the introduction of SAP Digital Currency Hub, there is a valid solution for addressing the current pain points in cross-border transactions. Although the product is still in a pilot phase and further integration with the rest of the ERP and treasury landscape needs to be built, its outlook is promising as it intends to make cross-border payments more streamlined and transparent.

In SAP Treasury, business partners represent counterparties with whom a corporation engages in treasury transactions, including banks, financial institutions, and internal subsidiaries.

Additionally, business partners are essential in SAP for recording information related to securities issues, such as shares and funds.

The SAP Treasury Business Partner (BP) serves as a fundamental treasury master data object, utilized for managing relationships with both external and internal counterparties across a variety of financial transactions; including FX, MM, derivatives, and securities. The BP master data encompasses crucial details such as names, addresses, contact information, bank details, country codes, credit ratings, settlement information, authorizations, withholding tax specifics, and more.

Treasury BPs are integral and mandatory components within other SAP Treasury objects, including financial instruments, cash management, in-house cash, and risk analysis. As a result, the proper design and accurate creation of BPs are pivotal to the successful implementation of SAP Treasury functionality. The creation of BPs represents a critical step in the project implementation plan.

Therefore, we aim to highlight key specifics for professionally designing BPs and maintaining them within the SAP Treasury system. The following section will outline the key focus areas where consultants need to align with business users to ensure the smooth and seamless creation and maintenance of BPs.

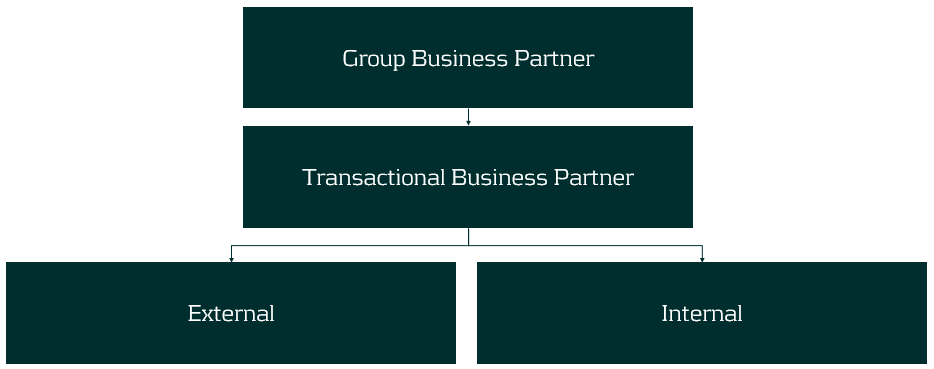

Structure of the BPs:

The structure of BPs may vary depending on a corporation's specific requirements. Below is the most common structure of treasury BPs:

Group BP – represents a parent company, such as the headquarters of a bank group or corporate entity. Typically, this level of BP is not directly involved in trading processes, meaning no deals are created with this BP. Instead, these BPs are used for: a. reflecting credit ratings, b. limiting utilization in the credit risk analyzer, c. reporting purposes, etc.

Transactional BP – represents a direct counterparty used for booking deals. Transactional BPs can be divided into two types:

- External BPs – represent banks, financial institutions, and security issuers.

- Internal BPs – represent subsidiaries of a company.

Naming convention of BPs

It is important to define a naming convention for the different types of BPs, and once defined, it is recommended to adhere to the blueprint design to maintain the integrity of the data in SAP.

Group BP ID: Should have a meaningful ID that most business users can understand. Ideally, the IDs should be of the same length. For example: ABN AMRO Group = ABNAMR or ABNGRP, Citibank Group = CITGRP or CITIBNK.

External BP ID: Should also have a meaningful ID, with the addition of the counterparty's location. For example: ABN AMRO Amsterdam – ABNAMS, Citibank London – CITLON, etc.

Internal BP ID: The main recommendation here is to align the BP ID with the company code number. For example, if the company code of the subsidiary is 1111, then its BP ID should be 1111. However, it is not always possible to follow this simple rule due to the complexity of the ERP and SAP Treasury landscape. Nonetheless, this simple rule can help both business and IT teams find straightforward solutions in SAP Treasury.

The length of the BP IDs should be consistent within each BP type.

Maintenance of Treasury BPs

1. BP Creation:

Business partners are created in SAP using the t-code BP. During the creation process, various details are entered to establish the master data record. This includes basic information such as name, address, contact details, as well as specific financial data such as bank account information, settlement instructions, WHT, authorizations, credit rating, tax residency country, etc.

Consider implementing an automated tool for creating Treasury BPs. We recommend leveraging SAP migration cockpit, SAP scripting, etc. At Zanders we have a pre-developed solution to create complex Treasury BPs which covers both SAP ECC and most recent version of SAP S/4 HANA.

2. BP Amendment:

Regular updates to BP master data are crucial to ensure accuracy. Changes in addresses, contact information, or payment details should be promptly recorded in SAP.

3. BP Release:

Treasury BPs must be validated before use. This validation is carried out in SAP through a release workflow procedure. We highly recommend activating such a release for the creation and amendment of BPs, and nominating a person to release a BP who is not authorized to create/amend a BP.

BP amendments are often carried out by the Back Office or Master Data team, while BP release is handled by a Middle Office officer.

4. BP Hierarchies:

Business partners can have relationships as described, and the system allows for the maintenance of these relationships, ensuring that accurate links are established between various entities involved in financial transactions.

5. Alignment:

During the Treasury BP design phase, it is important to consider that BPs will be utilized by other teams in a form of Vendors, Customers, or Employees. SAP AP/AR/HR teams may apply different conditions to a BP, which can have an impact on Treasury functions. For instance, the HR team may require bank details of employees to be hidden, and this requirement should be reflected in the Treasury BP roles. Additionally, clearing Treasury identification types or making AP/AR reconciliation GL accounts mandatory for Treasury roles could also be necessary.

Transparent and effective communication, as well as clear data ownership, are essential in defining the design of the BPs.

Conclusion

The design and implementation of BPs require expertise and close alignment with treasury business users to meet all requirements and consider other SAP streams.

At Zanders, we have a strong team of experienced SAP consultants who can assist you in designing BP master data, developing tools to create/amend the BPs meeting strict treasury segregation of duties and the clients IT rules and procedures.

As the SWIFT MT-MX migration gains momentum, we are now starting to see more questions from the corporate community around the potential impact of this industry migration.

But the adoption of ISO 20022 XML messaging goes beyond SWIFT’s adoption in the interbank financial messaging space – SWIFT are currently estimating that by 2025, 80% of the RTGS (real time gross settlement) volumes will be ISO 20022 based with all reserve currencies either live or having declared a live date. What this means is that ISO 20022 XML is becoming the global language of payments. In this fourth article in the ISO 20022 series, Zanders experts Eliane Eysackers and Mark Sutton provide some valuable insights around what the version 9 payment message offers the corporate community in terms of richer functionality.

A quick recap on the ISO maintenance process?

So, XML version 9. What we are referencing is the pain.001.001.09 customer credit transfer initiation message from the ISO 2019 annual maintenance release. Now at this point, some people reading this article will be thinking they are currently using XML version 3 and now we talking about XML version 9. The logical question is whether version 9 is the latest message and actually, we expect version 12 to be released in 2024. So whilst ISO has an annual maintenance release process, the financial industry and all the associated key stakeholders will be aligning on the XML version 9 message from the ISO 2019 maintenance release. This version is expected to replace XML version 3 as the de-facto standard in the corporate to bank financial messaging space.

What new functionality is available with the version 9 payment message?

Comparing the current XML version 3 with the latest XML version 9 industry standard, there are a number of new tags/features which make the message design more relevant to the current digital transformation of the payment’s ecosystem. We look at the main changes below:

- Proxy: A new field has been introduced to support a proxy or tokenisation as its sometimes called. The relevance of this field is primarily linked to the new faster payment rails and open banking models, where consumers want to provide a mobile phone number or email address to mask the real bank account details and facilitate the payment transfer. The use of the proxy is becoming more widely used across Asia with the India (Unified Payments Interface) instant payment scheme being the first clearing system to adopt this logic. With the rise of instant clearing systems across the world, we are starting to see a much greater use of proxy, with countries like Australia (NPP), Indonesia (BI-FAST), Malaysia (DuitNow), Singapore (FAST) and Thailand (Promptpay) all adopting this feature.

- The Legal Entity Identifier (LEI): This is a 20-character, alpha-numeric code developed by the ISO. It connects to key reference information that enables clear and unique identification of legal entities participating in financial transactions. Each LEI contains information about an entity’s ownership structure and thus answers the questions of 'who is who’ and ‘who owns whom’. Simply put, the publicly available LEI data pool can be regarded as a global directory, which greatly enhances transparency in the global marketplace. The first country to require the LEI as part of the payment data is India, but the expectation is more local clearing system’s will require this identifier from a compliance perspective.

- Unique End-to-end Transaction Reference (commonly known as a UETR): This is a string of 36 unique characters featured in all payment instruction messages carried over the SWIFT network. UETRs are designed to act as a single source of truth for a payment and provide complete transparency for all parties in a payment chain, as well as enable functionality from SWIFT gpi (global payments innovation)1, such as the payment Tracker.

- Gender neutral term: This new field has been added as a name prefix.

- Requested Execution Date: The requested execution date now includes a data and time option to provide some additional flexibility.

- Structured Address Block: The structured address block has been updated to include the Building Name.

In Summary

Whilst there is no requirement for the corporate community to migrate onto the XML version 9 message, corporate treasury should now have the SWIFT ISO 20022 XML migration on their own radar in addition to understanding the broader global market infrastructure adoption of ISO 20022. This will ensure corporate treasury can make timely and informed decisions around any future migration plan.

Notes:

- SWIFT gpi is a set of standards and rules that enable banks to offer faster, more transparent, and more reliable cross-border payments to their customers.

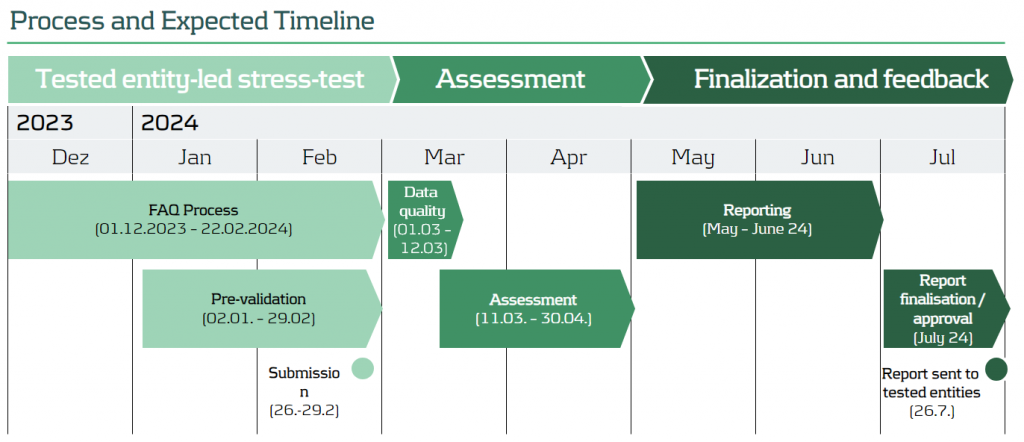

The European Central Bank (ECB) is charting new territories in the realm of financial security with a groundbreaking thematic stress test slated for 2024

In the stress test methodology, participating banks are required to evaluate the impact of a cyber attack. They must communicate their response and recovery efforts by completing a questionnaire and submitting pertinent documentation. Banks undergoing enhanced assessment are further mandated to conduct and report the results of IT recovery tests specific to the scenario. The reporting of the cyber incident is to be done using the template outlined in the SSM Cyber-incident reporting framework.

Assessing Digital Fortitude: Scope and Objectives

The ECB's decision to conduct a thematic stress test on cyber resilience in 2024 holds profound significance. The primary objective is to assess the digital operational resilience of 109 Significant Institutions, contemplating the impact of a severe but plausible cybersecurity event. This initiative seeks to uncover potential weaknesses within the systems and derive strategic remediation actions. Notably, 28 banks will undergo an enhanced assessment, heightening the scrutiny on their cyber resilience capabilities. The outcomes are poised to reverberate across the financial landscape, influencing the 2024 SREP OpRisk Score and shaping qualitative requirements.

General Overview and Scope

- Supervisory Board of ECB has decided to conduct a thematic stress test on „cyber resilience“ in 2024.

- Main objective is to assess the digital operational resilience in case of a severe but plausible cybersecurity event, to identify potential weaknesses and derive remediation actions.

- Participants will be 109 Significant Institutions (28 banks will be in scope of an enhanced assessment).

- The outcome will have an impact on the 2024 SREP OpRisk Score and qualitative requirements.

Navigating the Evaluation: Stress Test Methodology

Participating banks find themselves at the epicenter of this evaluative process. They are tasked with assessing the impact of a simulated cyber attack and meticulously reporting their response and recovery efforts. This involves answering a comprehensive questionnaire and providing relevant documentation as evidence. For those under enhanced assessment, an additional layer of complexity is introduced – the execution and reporting of IT recovery tests tailored to the specific scenario. The cyber incident reporting follows a structured template outlined in the SSM Cyber-incident reporting framework.

Stress Test Methodology

- Participating banks have to assess the impact of the cyber-attack and report their response and recovery by answering the questionnaire and providing relevant documentation as evidence.

- Banks under the enhanced assessment are additionally requested to execute and provide results of IT recovery tests tailored to the specific scenario.

- The cyber incident has to be reported by using the template of the SSM Cyber-incident reporting framework.

Setting the Stage: Scenario Unveiled

The stress test unfolds with a meticulously crafted hypothetical scenario. Envision a landscape where all preventive measures against a cyber attack have either been bypassed or failed. The core of this simulation involves a cyber-attack causing a loss of integrity in the databases supporting a bank's main core banking system. Validation of the affected core banking system is a crucial step, overseen by the Joint Supervisory Team (JST). The final scenario details will be communicated on January 2, 2024, adding a real-time element to this strategic evaluation.

Scenario

- The stress test will consist of a hypothetical scenario that assumes that all preventive measures have been bypassed or have failed.

- The cyber-attack will cause a loss of integrity of the database(s) that support the bank’s main core banking system.

- The banks have to validate the selection of the affected core banking system with the JST.

- The final scenario will be communicated on 2 January 2024.

Partnering for Success: Zanders' Service Offering

In the complex terrain of the Cyber Resilience Stress Test, Zanders stands as a reliable partner. Armed with deep knowledge in Non-Financial Risk, we navigate the intricacies of the upcoming stress test seamlessly. Our support spans the entire exercise, from administrative aspects to performing assessments that determine the impact of the cyber attack on key financial ratios as requested by supervisory authorities. This service offering underscores our commitment to fortifying financial institutions against evolving cyber threats.

Zanders Service Offering

- Our deep knowledge in Non-Financial Risk enables us to navigate smoothly through the complexity of the upcoming Cyber Resilience Stress Test.

- We support participating banks during the whole exercise of the upcoming Stress Test.

- Our Services cover the whole bandwidth of required activities starting from administrative aspects and ending up at performing assessments to determine the impact of the cyber-attack in regard of key financial ratios requested by the supervisory authority.

The 2023 Global Risk Report by the World Economic Forum investigates the potential hazards for humanity in the next decade.

In this report, biodiversity loss ranks as the fourth most pressing concern after climate change adaptation, mitigation failure, and natural disasters. For financial institutions (FIs), it is therefore a relevant risk that should be taken into account. So, how should FIs implement biodiversity risk in their risk management framework?

Despite an increasing awareness of the importance of biodiversity, human activities continue to significantly alter the ecosystems we depend on. The present rate of species going extinct is 10 to 100 times higher than the average observed over the past 10 million years, according to Partnership for Biodiversity Accounting Financials[i]. The Intergovernmental Science-Policy Platform on Biodiversity and Ecosystem Services (IPBES) reports that 75% of ecosystems have been modified by human actions, with 20% of terrestrial biomass lost, 25% under threat, and a projection of 1 million species facing extinction unless immediate action is taken. Resilience theory and planetary boundaries state that once a certain critical threshold is surpassed, the rate of change enters an exponential trajectory, leading to irreversible changes, and, as noted in a report by the Nederlandsche Bank (DNB), we are already close to that threshold[ii].

We will now explain biodiversity as a concept, why it is a significant risk for financial institutions (FIs), and how to start thinking about implementing biodiversity risk in a financial institutions’ risk management framework.

What is biodiversity?

The Convention on Biological Diversity (CBD) defines biodiversity as “the variability among living organisms from all sources including, i.a., terrestrial, marine and other aquatic ecosystems and the ecological complexes of which they are part.”[iii] Humans rely on ecosystems directly and indirectly as they provide us with resources, protection and services such as cleaning our air and water.

Biodiversity both affects and is affected by climate change. For example, ecosystems such as tropical forests and peatlands consist of a diverse wildlife and act as carbon sinks that reduce the pace of climate change. At the same time, ecosystems are threatened by the accelerating change caused by human-induced global warming. The IPBES and Intergovernmental Panel on Climate Change (IPCC), in their first-ever collaboration, state that “biodiversity loss and climate change are both driven by human economic activities and mutually reinforce each other. Neither will be successfully resolved unless both are tackled together.”[iv]

Why is it relevant for financial institutions?

While financial institutions’ own operations do not materially impact biodiversity, they do have impact on biodiversity through their financing. ASN Bank, for instance, calculated that the net biodiversity impact of its financed exposure is equivalent to around 516 square kilometres of lost biodiversity – which is roughly equal to the size of the isle of Ibiza in Spain[v]. The FIs’ impact on biodiversity also leads to opportunities. The Institute Financing Nature (IFN) report estimates that the financing gap for biodiversity is close to $700 billion annually[vi]. This emphasizes the importance of directing substantial financial resources towards biodiversity-positive initiatives.

At the same time, biodiversity loss also poses risks to financial institutions.

The global economy highly depends on biodiversity as a result of the increasedglobalization and interconnectedness of the financial system. Due to these factors, the effects of biodiversity losses are magnified and exacerbated through the financial system, which can result in significant financial losses. For example, approximately USD 44 trillion of the global GDP is highly or moderately dependent on nature (World Economic Forum, 2020). Specifically for financial institutions, the DNB estimated that Dutch FIs alone have EUR 510 billionof exposure to companies that are highly or very highly dependent on one or more ecosystems services[vii]. Furthermore, in the 2010 World Economic Forum report worldwide economic damage from biodiversity loss is estimated to be around USD 2 to 4.5 trillion annually. This is remarkably high when compared to the negative global financial damage of USD 1.7 trillion per year from greenhouse gas emissions (based on 2008 data), which demonstrates that institutions should not focus their attention solely on the effects of climate change when assessing climate & environmental risks[viii].

Examples of financial impact

Similarly to climate risk, biodiversity risk is expected to materialize through the traditional risk types a financial institution faces. To illustrate how biodiversity loss can affect individual financial institutions, we provide an example of the potential impact of physical biodiversity risk on, respectively, the credit risk and market risk of an institution:

Credit risk:

Failing ecosystem services can lead to disruptions of production, reducing the profits of counterparties. As a result, there is an increase in credit risk of these counterparties. For example, these disruptions can materialize in the following ways:

- A total of 75% of the global food crop rely on animals for their pollination. For the agricultural sector, deterioration or loss of pollinating species may result in significant crop yield reduction.

- Marine ecosystems are a natural defence against natural hazards. Wetlands prevented USD 650 million worth of damages during the 2012 Superstorm Sandy [OECD, 2019), while the material damage of hurricane Katrina would have been USD 150 billion less if the wetlands had not been lost.

Market risk:

The market value of investments of a financial institution can suffer from the interconnectedness of the global economy and concentration of production when a climate event happens. For example:

- A 2011 flood in Thailand impacted an area where most of the world's hard drives are manufactured. This led to a 20%-40% rise in global prices of the product[ix]. The impact of the local ecosystems for these type of products expose the dependency for investors as well as society as a whole.

Core part of the European Green Deal

The examples above are physical biodiversity risk examples. In addition to physical risk, biodiversity loss can also lead to transition risk – changes in the regulatory environment could imply less viable business models and an increase in costs, which will potentially affect the profitability and risk profile of financial institutions. While physical risk can be argued to materialize in a more distant future, transition risk is a more pressing concern as new measures have been released, for example by the European Commission, to transition to more sustainable and biodiversity friendly practices. These measures are included in the EU biodiversity strategy for 2030 and the EU’s Nature restoration law.

The EU’s biodiversity strategy for 2030 is a core part of European Green Deal. It is a comprehensive, ambitious, and long-term plan that focuses on protecting valuable or vulnerable ecosystems, restoring damaged ecosystems, financing transformation projects, and introducing accountability for nature-damaging activities. The strategy aims to put Europe's biodiversity on a path to recovery by 2030, and contains specific actions and commitments. The EU biodiversity strategy covers various aspects such as:

- Legal protection of an additional 4% of land area (up to a total of 7%) and 19% of sea area (up to a total of 30%)

- Strict protection of 9% of sea and 7% of land area (up to a total of 10% for both)

- Reduction of fertilizer use by at least 20%

- Setting measures for sustainable harvesting of marine resources

A major step forwards towards enforcement of the strategy is the approval of the Nature restoration law by the EU in July 2023, which will become the first continent-wide comprehensive law on biodiversity and ecosystems. The law is likely to impact the agricultural sector, as the bill allows for 30% of all former peatlands that are currently exploited for agriculture to be restored or partially shifted to other uses by 2030. By 2050, this should be at least 70%. These regulatory actions are expected to have a positive impact on biodiversity in the EU. However, a swift implementation may increase transition risk for companies that are affected by the regulation.

The ECB Guide on climate-related and environmental risks explicitly states that biodiversity loss is one of the risk drivers for financial institutions[x]. Furthermore, the ECB Guide requires financial institutions to asses both physical and transition risks stemming from biodiversity loss. In addition, the EBA Report on the Management and Supervision of ESG Risk for Credit Institutions and Investment Firms repeatedly refers to biodiversity when discussing physical and transition risks[xi].

Moreover, the topic ‘biodiversity and ecosystems’ is also covered by the Corporate Sustainability Reporting Directive (CSRD), which requires companies within its scope to disclose on several sustainability related matters using a double materiality perspective.[1] Biodiversity and ecosystems is one of five environmental sustainability matters covered by CSRD. At a minimum, financial institutions in scope of CSRD must perform a materiality assessment of impacts, risks and opportunities stemming from biodiversity and ecosystems. Furthermore, when biodiversity is assessed to be material, either from financial or impact materiality perspective, the institution is subject to granular biodiversity-related disclosure requirements covering, among others, topics such as business strategy, policies, actions, targets, and metrics.

Where to start?

In line with regulatory requirements, financial institutions should already be integrating biodiversity into their risk management practices. Zanders recognizes the challenges associated with biodiversity-related risk management, such as data availability and multidimensionality. Therefore, Zanders suggests to initiate this process by starting with the following two steps. The complexity of the methodologies can increase over time as the institution’s, the regulator’s and the market’s knowledge on biodiversity-related risks becomes more mature.

- Perform materiality assessment using the double materiality concept. This means that financial institutions should measure and analyze biodiversity-related financial materiality through the identification of risks and opportunities. Institutions should also assess their impacts on biodiversity, for example, through calculation of their biodiversity footprint. This can start with classifying exposures’ impact and dependency on biodiversity based on a sector-level analysis.

- Integrate biodiversity-related risks considerations into their business strategy and risk management frameworks. From a business perspective, if material, financial institutions are expected to integrate biodiversity in their business strategy, and set policies and targets to manage the risks. Such actions could be engagement with clients to promote their sustainability practices, allocation of financing to ‘biodiversity-friendly’ projects, and/or development of biodiversity specific products. Moreover, institutions are expected to adjust their risk appetites to account for biodiversity-related risks and opportunities, establish KRIs along with limits and thresholds. Embedding material ESG risks in the risk appetite frameworks should include a description on how risk indicators and limits are allocated within the banking group, business lines and branches.

Considering the potential impact of biodiversity loss on financial institutions, it is crucial for them to extend their focus beyond climate change and also start assessing and managing biodiversity risks. Zanders can support financial institutions in measuring biodiversity-related risks and taking first steps in integrating these risks into risk frameworks. Curious to hear more on this? Please reach out to Marije Wiersma, Iryna Fedenko, or Jaap Gerrits.

[1] CSRD applies to large EU companies, including banks and insurance firms. The first companies subject to CSRD must disclose according to the requirements in the European Sustainability Reporting Standards (ESRS) from 2025 (over financial year 2024), and by the reporting year 2029, the majority of European companies will be subject to publishing the CSRD reports. The sustainability report should be a publicly available statement with information on the sustainability-matters that the company considers material. This statement needs to be audited with limited assurance.

[i] PBAF. (2023). Dependencies - Pertnership for Biodiversity Acccounting Financials (PBAF)

[ii] De Nederlandche Bank. (2020). Indepted to nature - Exploring biodiversity risks for the Dutch Financial Sector.

[iii] CBD. (2005). Handbook of the convention on biological diversity

[iv] IPBES. (2021). Tackling Biodiversity & Climate Crises Together & Their Combined Social Impacts

[v] ASN Bank (2022). ASN Bank Biodiversity Footprint

[vi] Paulson Institute. (2021). Financing nature: Closing the Global Biodiversity

[vii] De Nederlandche Bank. (2020). Indepted to nature - Exploring biodiversity risks for the Dutch Financial Sector

[viii] PwC for World Economic Forum. (2010). Biodiversity and business risk

[ix] All the examples related to credit and market risk are presented in the report by De Nederlandsche Bank. (2020). Biodiversity Opportunities and Risks for the Financial Sector

[x] ECB. (2020). Guide on climate-related and environmental risks.

[xi] EBA. (2021). EBA Report on Management and Supervision of ESG Risk for Credit Institutions and Investment Firms

Current carbon offset processes are opaque and rely on centralized players; blockchain technology can provide improvements by assuring transparency and decentralization.

Carbon offset processes are currently dominated by private actors providing legitimacy for the market. The two largest of these, Verra and Gold Standard, provide auditing services, carbon registries and a marketplace to sell carbon offsets, making them ubiquitous in the whole process. Due to this opacity and centralisation, the business models of the existing companies was criticised regarding its validity and the actual benefit for climate action. By buying an offset in the traditional manner, the buyer must place trust in these players and their business models. Alternative solutions that would enhance the transparency of the process as well as provide decentralised marketplaces are thus called for.

The conventional process

Carbon offsets are certificates or credits that represent a reduction or removal of greenhouse gas emissions from the atmosphere. Offset markets work by having companies and organizations voluntarily pay for carbon offsetting projects. Reasons for partaking in voluntary carbon markets vary from increased awareness of corporate responsibility to a belief that emissions legislation is inevitable, and it is thus better to partake earlier.

Some industries also suffer prohibitively expensive barriers for lowering their emissions, or simply can’t reduce them because of the nature of their business. These industries can instead benefit from carbon offsets, as they manage to lower overall carbon emissions while still staying in business. Environmental organisations run climate-friendly projects and offer certificate-based investments for companies or individuals who therefore can reduce their own carbon footprint. By purchasing such certificates, they invest in these projects and their actual or future reduction of emissions. However, on a global scale, it is not enough to simply lower our carbon footprint to negate the effects of climate change. Emissions would in practice have to be negative, so that even a target of 1,5-degree Celsius warming could be met. This is also remedied by carbon credits, as they offer us a chance of removing carbon from the atmosphere. In the current process, companies looking to take part in the offsetting market will at some point run into the aforementioned behemoths and therefore an opaque form of purchasing carbon offsets.

The blockchain approach

A blockchain is a secure and decentralised database or ledger which is shared among the nodes of a computer network. Therefore, this technology can offer a valid contribution addressing the opacity and centralisation of the traditional procedure. The intention of the first blockchain approaches were the distribution of digital information in a shared ledger that is agreed on jointly and updated in a transparent manner. The information is recorded in blocks and added to the chain irreversibly, thus preventing the alteration, deletion and irregular inclusion of data.

In the recent years, tokenization of (physical) assets and the creation of a digital version that is stored on the blockchain gained more interest. By utilizing blockchain technology, asset ownership can be tokenized, which enables fractional ownership, reduces intermediaries, and provides a secure and transparent ledger. This not only increases liquidity but also expands access to previously illiquid assets (like carbon offsets). The blockchain ledger allows for real-time settlement of transactions, increasing efficiency and reducing the risk of fraud. Additionally, tokens can be programmed to include certain rules and restrictions, such as limiting the number of tokens that can be issued or specifying how they can be traded, which can provide greater transparency and control over the asset.

Blockchain-based carbon offset process

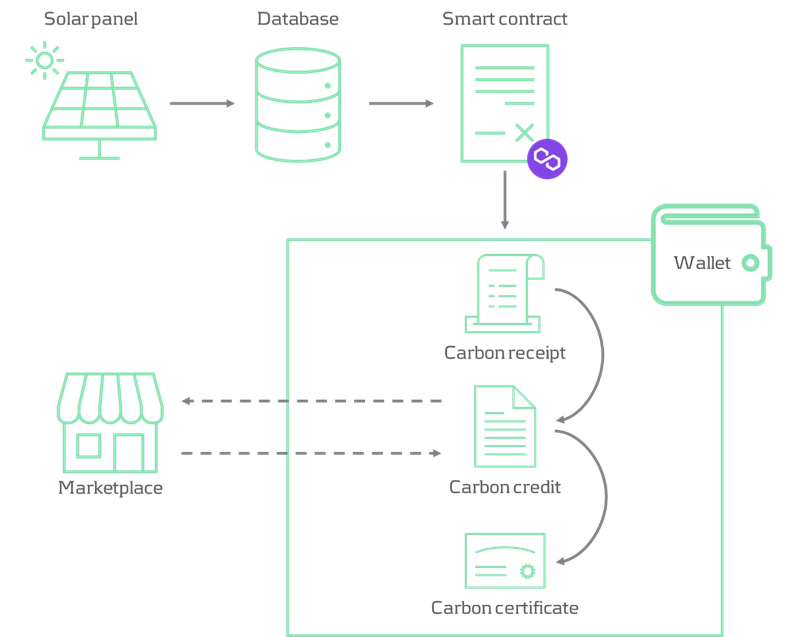

The tokenisation process for carbon credits begins with the identification of a project that either captures or helps to avoid carbon creation. In this example, the focus is on carbon avoidance through solar panels. The generation of solar electricity is considered an offset, as alternative energy use would emit carbon dioxide, whereas solar power does not.

The solar panels provide information regarding their electricity generation, from which a figure is derived that represents the amount of carbon avoided and fed into a smart contract. A smart contract is a self-executing application that exist on the blockchain and performs actions based on its underlying code. In the blockchain-based carbon offset process, smart contracts convert the different tokens and send them to the owner’s wallet. The tokens used within the process are compliant with the ERC-721 Non-Fungible Token (NFT) standard, which represents a unique token that is distinguishable from others and cannot be exchanged for other units of the same asset. A practical example is a work of art that, even if replicated, is always slightly different.

In the first stage of the process, the owner claims a carbon receipt, based on the amount of carbon avoided by the solar panel. Thereby the aggregated amount of carbon avoided (also stored in a database just for replication purposes) is sent to the smart contract, which issues a carbon receipt of the corresponding figure to the owner. Carbon receipts can further be exchanged for a uniform amount of carbon credits (e.g. 5 kg, 10 kg, 15 kg) by interacting with the second smart contract. Carbon credits are designed to be traded on the decentralised marketplace, where the price is determined by the supply and demand of its participants. Ultimately, carbon credits can be exchanged for carbon certificates indicating the certificate owner and the amount of carbon offset. Comparable with a university diploma, carbon certificates are tied to the address of the owner that initiated the exchange and are therefore non-tradable. Figure 1 illustrates the process of the described blockchain-based carbon offset solution:

Figure 1: Process flow of a blockchain-based carbon offset solution

Conclusion

The outlined blockchain-based carbon offset process was developed by Zanders’ blockchain team in a proof of concept. It was designed as an approach to reduce dependence on central players and a transparent method of issuing carbon credits. The smart contracts that the platform interacts with are implemented on the Mumbai test network of the public Polygon blockchain, which allows for fast transaction processing and minimal fees. The PoC is up and running, tokenizing the carbon savings generated by one of our colleagues photovoltaic system, and can be showcased in a demo. However, there are some clear optimisations to the process that should be considered for a larger scale (commercial) setup.

If you're interested in exploring the concept and benefits of a blockchain-based carbon offset process involving decentralised issuance and exchange of digital assets, or if you would like to see a demo, you can contact Robert Richter or Justus Schleicher.

Take-aways for bank risk management, supervision and regulation

Early October, the Basel Committee on Banking Supervision (BCBS) published a report[1] on the 2023 banking turmoil that involved the failure of several US banks as well as Credit Suisse. The report draws lessons for banking regulation and supervision which may ultimately lead to changes in banking regulation as well as supervisory practices. In this article we summarize the main findings of the report[2]. Based on the report’s assessment, the most material consequences for banks, in our view, could be in the following areas:

- Reparameterization of the LCR calculation and/or introduction of additional liquidity metrics

- Inclusion of assets accounted for at amortized cost at their fair value in the determination of regulatory capital

- Implementation of extended disclosure requirements for a bank's interest rate exposure and liquidity position

- More intensive supervision of smaller banks, especially those experiencing fast growth and concentration in specific client segments

- Application of the full Basel III Accord and the Basel IRRBB framework to a larger group of banks

Bank failures and underlying causes

The BCBS report first describes in some detail the events that led to the failure of each of the following banks in the spring of 2023:

- Silicon Valley Bank (SVB)

- Signature Bank of New York (SBNY)

- First Republic Bank (FRB)

- Credit Suisse (CS)

While each failure involved various bank-specific factors, the BCBS report highlights common features (with the relevant banks indicated in brackets).

- Long-term unsustainable business models (all), in part due to remuneration incentives for short-term profits

- Governance and risk management did not keep up with fast growth in recent years (SVB, SBNY, FRC)

- Ineffective oversight of risks by the board and management (all)

- Overreliance on uninsured customer deposits, which are more likely to be withdrawn in a stress situation (SVB, SBNY, FRC)

- Unprecedented speed of deposit withdrawals through online banking (all)

- Investment of short-term deposits in long-term assets without adequate interest-rate hedges (SVB, FRC)

- Failure to assess whether designated assets qualified as eligible collateral for borrowing at the central bank (SVB, SBNY)

- Client concentration risk in specific sectors and on both asset and liability side of the balance sheet (SVB, SBNY, FRC)

- Too much leniency by supervisors to address supervisory findings (SVB, SBNY, CS)

- Incomplete implementation of the Basel Framework: SVB, SBNY and FRB were not subject to the liquidity coverage ratio (LCR) of the Basel III Accord and the BCBS standard on interest rate risk in the banking book (IRRBB)

Of the four failed banks, only Credit Suisse was subject to the LCR requirements of the Basel III Accord, in relation to which the BCBS report includes the following observations:

- A substantial part of the available high quality liquid assets (HQLA) at CS was needed for purposes other than covering deposit outflows under stress, in contrast to the assumptions made in the LCR calculation

- The bank hesitated to make use of the LCR buffer and to access emergency liquidity so as to avoid negative signalling to the market

Although not part of the BCBS report, these observations could lead to modifications to the LCR regulation in the future.

Lessons for supervision

With respect to supervisory practices, the BCBS report identifies various lessons learned and raises a few questions, divided into four main areas:

1. Bank’s business models

- Importance of forward-looking assessment of a bank’s capital and liquidity adequacy because accounting measures (on which regulatory capital and liquidity measures are based) mostly are not forward-looking in nature

- A focus on a bank’s risk-adjusted profitability

- Proactive engagement with ‘outlier banks’, e.g., banks that experienced fast growth and have concentrated funding sources or exposures

- Consideration of the impact of changes in the external environment, such as market conditions (including interest rates) and regulatory changes (including implementation of Basel III)

2. Bank’s governance and risk management

- Board composition, relevant experience and independent challenge of management

- Independence and empowerment of risk management and internal audit functions

- Establishment of an enterprise-wide risk culture and its embedding in corporate and business processes.

- Senior management remuneration incentives

3.Liquidity supervision

- Do the existing metrics (LCR, NSFR) and supervisory review suffice to identify start of material liquidity outflows?

- Should the monitoring frequency of metrics be increased (e.g., weekly for business as usual and daily or even intra-day in times of stress)?

- Monitoring of concentration risks (clients as well as funding sources)

- Are sources of liquidity transferable within the legal entity structure and freely available in times of stress?

- Testing of contingency funding plans

4. Supervisory judgment

- Supplement rules-based regulation with supervisory judgment in order to intervene pro-actively when identifying risks that could threaten the bank’s safety and soundness. However, the report acknowledges that a supervisor may not be able to enforce (pre-emptive) action as long as an institution satisfies all minimum requirements. This will also depend on local legislative and regulatory frameworks

Lessons for regulation

In addition, the BCBS report identifies various potential enhancement to the design and implementation of bank regulation in four main areas:

1. Liquidity standards

- Consideration of daily operational and intra-day liquidity requirements in the LCR, based on the observation that a material part of the HQLA of CS was used for this purpose but this is not taken into account in the determination of the LCR

- Recalibration of deposit outflows in the calculation of LCR and NSFR, based on the observation that actual outflow rates at the failed banks significantly exceeded assumed outflows in the LCR and NSFR calculations

- Introduction of additional liquidity metrics such as a 5-day forward liquidity position, survival period and/or non-risk based liquidity metrics that do not rely on run-off assumptions (similar to the role of the leverage ratio in the capital framework)

2. IRRBB

- Implementation of the Basel standard on IRRBB, which did not apply to the US banks, could have made the interest rate risk exposures transparent and initiated timely action by management or regulatory intervention.

- More granular disclosure, covering for example positions with and without hedging, contractual maturities of banking book positions and modeling assumptions

3. Definition of regulatory capital

- Reflect unrealised gains and losses on assets that are accounted for at amortised cost (AC) in regulatory capital, analogous to the treatment of assets that are classified as available-for-sale (AFS). This is supported by the observation that unrealised losses on fixed-income assets held at amortised cost, resulting from to the sharp rise in interest rates, was an important driver of the failure of several US banks when these assets were sold to create liquidity and unrealised losses turned into realised losses. The BCBS report includes the following considerations in this respect:

- If AC assets can be repo-ed to create liquidity instead of being sold, then there is no negative impact on the financial statement

- Treating unrealised gains and losses on AC assets in the same way as AFS assets will create additional volatility in earnings and capital

- The determination of HQLA in the LCR regulation requires that assets are measured at no more than market value. However, this does not prevent the negative capital impact described above

- Reconsideration of the role, definition and transparency of additional Tier-1 (AT1) instruments, considering the discussion following the write-off of AT1 instruments as part of the take-over of CS by UBS

4. Application of the Basel framework

- Broadening the application of the full Basel III framework beyond internationally active banks and/or developing complementary approaches to identify risks at domestic banks that could pose a threat to cross-border financial stability. The events in the spring of this year have demonstrated that distress at relatively small banks that are not subject to the (full) Basel III regulation can trigger broader and cross-border systemic concerns and contagion effects.

- Prudent application of the ‘proportionality’ principle to domestic banks, based on the observation that financial distress at such banks can have cross-border financial stability effects

- Harmonization of approaches that aim to ensure that sufficient capital and liquidity is available at individual legal entity level within banking groups

Conclusion

The BCBS report identifies common shortcomings in bank risk management practices and governance at the four banks that failed during the 2023 banking turmoil and summarizes key take-aways for bank supervision and regulation.

The identified shortcomings in bank risk management include gaps in the management of traditional banking risks (interest rate, liquidity and concentration risks), failure to appreciate the interrelation between individual risks, unsustainable business models driven by short-term incentives at the expense of appropriate risk management, poor risk culture, ineffective senior management and board oversight as well as a failure to adequately respond to supervisory feedback and recommendations.

Key take-aways for effective supervision include enforcing prompt action by banks in response to supervisory findings, actively monitoring and assessing potential implications of structural changes to the banking system, and maintaining effective cross-border supervisory cooperation.

Key lessons for regulatory standards include the importance of full and consistent implementation of Basel standards as well as potential enhancements of the Basel III liquidity standards, the regulatory treatment of interest rate risk in the banking book, the treatment of assets that are accounted for at amortised cost within regulatory capital and the role of additional Tier-1 capital instruments.

The BCBS report is intended as a starting point for discussion among banking regulators and supervisors about possible changes to banking regulation and supervisory practices. For those interested in engaging in discussions related to the insights and recommendations in the BCBS report, please feel free to contact Pieter Klaassen.

[1] Report on the 2023 banking turmoil (bis.org) (accessed on October 19, 2023)

[2] Although recognized as relevant in relation to the banking turmoil, the BCBS report explicitly excludes from its consideration the role and design of deposit guarantee schemes, the effectiveness of resolution arrangements, the use and design of central bank lending facilities and FX swap lines, and public support measures in banking crises.

As per March 2023, SWIFT has taken a major step in its MT/MX migration journey. How does this impact your activities in SAP?

SWIFT now supports the exchange of ISO 20022 XML or MX message via the so-called FINplus network. In parallel, the legacy MT format messages remain to be exchanged over the ‘regular’ FIN network; The MT flow for message categories 1 (customer payments), 2 (FI transfers) and 9 (statements) through the FIN network will be decommissioned per November 2025.

As such, between March 2023 and November 2025, financial institutions need to be able to receive and process MX messages through FINplus on the inbound side, and optionally send MX messages or MT messages for outbound messaging. After that period, only MX will be allowed.

CBPR+ and HVPS+

Another important aspect of the MX migration is the development of the CBPR+ and HVPS+ specifications within the ISO20022 XML standard. These specifications dictate how an XML message should be populated in terms of data and field requirements for Cross Border Payments (CBPR+) and Domestic High Value Payments (HVPS+). Note that HVPS+ refers to domestic RTGS clearing systems and a number of countries are in the process of making the domestic clearing systems native ISO20022 XML-compliant.

Impact for Corporates

As of today, there should be no immediate need for corporates to change. However, it is advised to start assessing impact and to start planning for change if needed. We give you some cases to consider:

- A corporate currently exchanging e.g. MT101/MT103/MT210 messages towards its house bank via SWIFT FIN Network to make cross border payments, e.g. employing a SWIFT Service Bureau or an Alliance connection. This flow will cease to work after November 2025. If this flow is relevant to your company, it needs attention to be replaced.

- Another case is where, for example, an MT101 is exchanged with a house bank as a file over the FileAct network. Now it depends purely on the house bank’s capabilities to continue supporting this flow after 2025; it could offer a service to do a remapping of your MT message into an MX. This needs to be checked with the house banks.

- The MT940 message flow from the house bank via FIN also requires replacement.

- With respect to the MT940 file flow from house bank via FileAct, we expect little impact as we think most banks will continue supporting the MT940 format exchange as files. We do recommend to check with your house bank to be sure.

- High Value Payments for Domestic Japanese Yen using Zengin format; the BOJ-NET RTGS clearing system has already completed the migration to ISO20022 XML standard. Check with your house bank when the legacy payment format will become unsupported and take action accordingly.

These were just some examples and should not be considered an exhaustive list.

In addition, moving to the ISO20022 XML standard can also provide some softer benefits. We discussed this in a previously published article.

Impact on your SAP implementation

So you have determined that the MT/MX migration has impact and that remediating actions need to be taken. What does that look like in SAP?

First of all, it is very important to onboard the bank to support you with your change. Most typically, the bank needs to prepare its systems to be able to receive a new payment file format from your end. It is good practice to first test the payment file formatting and receive feedback from the banks implementation manager before going live with it.

On the incoming side, it is advisable to first request a number of production bank statements in e.g. the new CAMT.053 format, which can be analyzed and loaded in your test system. This will form a good basis for understanding the changes needed in bank statement posting logic in the SAP system.

PAYMENTS

In general, there are two ways of generating payment files in SAP. The classical one is via a payment method linked to a Payment Medium Workbench (PMW) format and a Data Medium Exchange (DME) tree. This payment method is then linked to your open items which can be processed with the payment run. The payment run then outputs the files as determined in your DME tree.

In this scenario, the idea is to simply setup a new payment method and link it to a desired PMW/DME output like pain.001.001.03. These have long been pre-delivered in standard SAP, in both ECC and S/4. It may be necessary to make minor mapping corrections to meet country- or bank-specific data requirements. Under most circumstances this can be achieved with a functional consultant using DME configuration. Once the payment method is fully configured, it can be linked to your customer and vendor master records or your treasury business partners, for example.

The new method of generating payment files is via the Advanced Payment Management (SAP APM) module. SAP APM is a module that facilitates the concepts of centralizing payments for your whole group in a so-called payment factory. APM is a module that’s only available in S/4 and is pushed by SAP AG as the new way of implementing payment factories.

Here it is a matter of linking the new output format to your applicable scenario or ‘payment route’.

BANK STATEMENTS

Classical MT940 bank statements are read by SAP using ABAP logic. The code interprets the information that is stored in the file and saves parts of it to internal database tables. The stored internal data is then interpreted a second time to determine how the posting and clearing of open items will take place.

Processing of CAMT.053 works a bit differently, interpreting the data from the file by a so-called XSLT transformation. This XSLT transformation is a configurable mapping where a CAMT.053 field maps into an internal database table field. SAP has a standard XSLT transformation package that is fairly capable for most use cases. However, certain pieces of useful information in the CAMT.053 may be ignored by SAP. An adjustment to the XSLT transformation can be added to ensure the data is picked up and made available for further interpretation by the system.

Another fact to be aware of is the difference in Bank Transaction Codes (BTC) between MT940 and CAMT.053. There could be a different level of granularity and the naming convention is different. BTC codes are the main differentiator in SAP to control posting logic.

SAP Incoming File Mapping Engine (IFME)

SAP has also put forward a module called Incoming File Mapping Engine (IFME). It serves the purpose as a ‘remapper’ of one output format to another output format. As an example, if your current payment method outputs an MT101, the remapper can take the pieces of information from the MT101 and save it in a pain.001 XML file.

Although there may be some fringe scenarios for this solution, we do not recommend such an approach as MT101 is generally weaker in terms of data structure and content than XML. Mapping it into some other format will not solve the problems that MT101 has in general. It is much better to directly generate the appropriate format from the internal SAP data directly to ensure maximum richness and structure. However, this should be considered as a last resort or if the solution is temporary.