Emergence of Artificial Intelligence and Machine Learning

The rise of ChatGPT has brought generative artificial intelligence (GenAI) into the mainstream, accelerating adoption across industries ranging from healthcare to banking. The pace at which (Gen)AI is being used is outpacing prior technological advances, putting pressure on individuals and companies to adapt their ways of working to this new technology. While GenAI uses data to create new content, traditional AI is typically designed to perform specific tasks such as making predictions or classifications. Both approaches are built on complex machine learning (ML) models which, when applied correctly, can be highly effective even on a stand-alone basis.

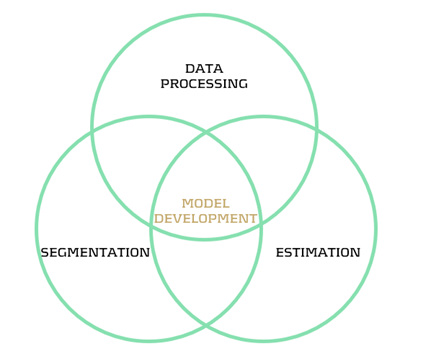

Figure 1: Model development steps

Though ML techniques are known for their accuracy, a major challenge lies in their complexity and limited interpretability. Unlocking the full potential of ML requires not only technical expertise, but also deep domain knowledge. Asset and Liability Management (ALM) departments can benefit from ML, for example in the area of behavioral modeling. In this article, we explore the application of ML in prepayment modeling (data processing, segmentation, estimation) based on research conducted at a Dutch bank. The findings demonstrate how ML can improve the accuracy of prepayment models, leading to better cashflow forecasts and, consequently, a more accurate hedge. By building ML capabilities in this context, ALM teams can play a key role in shaping the future of behavioral modeling throughout the whole model development process.

Prepayment Modeling and Machine Learning

Prepayment risk is a critical concern for financial institutions, particularly in the mortgage sector, where borrowers have the option to repay (a part of) their loans earlier than contractually agreed. While prepayments can be beneficial for borrowers (allowing them to refinance at lower interest rates or reduce their debt obligations) they present several challenges for financial institutions. Uncertainty in prepayment behaviour makes it harder to predict the duration mismatch and the corresponding interest rate hedge.

Effective prepayment modeling by accurately forecasting borrower behavior is crucial for financial institutions seeking to manage interest rate risks. Improved forecasting enables institutions to better anticipate cash flow fluctuations and implement more robust hedging strategies. To facilitate the modeling, data is segmented based on similar prepayment characteristics. This segmentation is often based on expert judgment and extensive data analysis, accounting for factors like loan age, interest rate, type, and borrower characteristics. Each segment is then analyzed through tailored prepayment models, such as a logistic regression or survival models.1

ML techniques offer significant potential to enhance segmentation and estimation in prepayment modeling. In rapidly changing interest rate environments, traditional models often struggle to accurately capture borrower behavior that deviates from conventional financial logic. In contrast, ML models can detect complex, non-linear patterns and adapt to changing behaviour, improving predictive accuracy by uncovering hidden relationships. Investigating such relationships becomes particularly relevant when borrower actions undermine traditional assumptions, as was the case in early 2021, when interest rates began to rise but prepayment rates did not decline immediately.

Real-world application

In collaboration with a Dutch bank, we conducted research on the application of ML in prepayment modeling within the Dutch mortgage market. The applications include data processing, segmentation, and estimation followed by an interpretation of the results with the use of ML specific interpretability metrics. Despite being constrained by limited computational power, the ML-based approaches outperformed the traditional methods, demonstrating superior predictive accuracy and stronger ability to capture complex patterns in the data. The specific applications are highlighted below.

Data processing

One of the first steps in model development is ensuring that the data is fit for use. An ML technique that can be commonly applied for outlier detection is the DBSCAN algorithm. This clustering method relies on the concept of distance to identify groups of observations, flagging those that do not fit well into any cluster as potential outliers. Since DBSCAN requires the user to define specific parameters, it offers flexibility and robustness in detecting outliers across a wide range of datasets.

Another example is an isolation forest algorithm. It detects outliers by randomly splitting the data and measuring how quickly a point becomes isolated. Outliers tend to be separated faster, since they share fewer similarities with the rest of the data. The model assigns an anomaly score based on how few splits were needed to isolate each point, where fewer splits suggest a higher likelihood of being an outlier. The isolation forest method is computationally efficient, performs well with large datasets, and does not require labelled data.

Segmentation

Following the data processing step, where outliers are identified, evaluated, and treated appropriately, the next phase in model development involves analyzing the dataset to define economically meaningful segments. ML-based clustering techniques are well-suited for deriving segments from data. It is important to note that mortgage data is generally high-dimensional and contains a large number of observations. As a result, clustering techniques must be carefully selected to ensure they can handle high-volume data efficiently within reasonable timelines. Two effective techniques for this purpose are K-means clustering and decision trees.

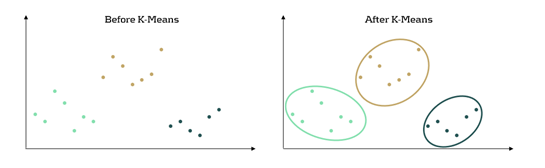

K-means clustering is an ML algorithm used to partition data into distinct segments based on similarity. Data points that are close to each other in a multi-dimensional space are grouped together, as illustrated in Figure 2. In the context of mortgage portfolio segmentation, K-means enables the grouping of loans with similar characteristics, making the segmentation process data-driven rather than based on predefined rules.

Figure 2: K-Means concept in a 2-dimensional space. Before, the dataset is seen as a whole unstructured dataset while K-means reveals three different segments in the data

Another ML technique useful for segmentation is the decision tree. This method involves splitting the dataset based on certain variables in a way that optimizes a predefined objective. A key advantage of tree-based methods is their interpretability: it is easy to see which variables drive the splits and to assess whether those splits make economic sense. Variable importance measures, like Information Gain, help interpret the decision tree by showing how much each split reduces the entropy (uncertainty). Lower entropy means the data is more organized, allowing for clearer and more meaningful segments to be created.

Estimation

Once the segments are defined, the final step involves applying prediction models to each segment. While the segments resulting from ML models can be used in traditional estimation models such as a logistic regression or a survival model, ML-based estimation models can also be used. An example of such an ML estimation technique is XGBoost. The method combines multiple small decision trees, learning from previous errors, and continuously improving its predictions. It was observed that applying this estimation method in combination with ML-based segments outperformed traditional methods on the used dataset.

Interpretability

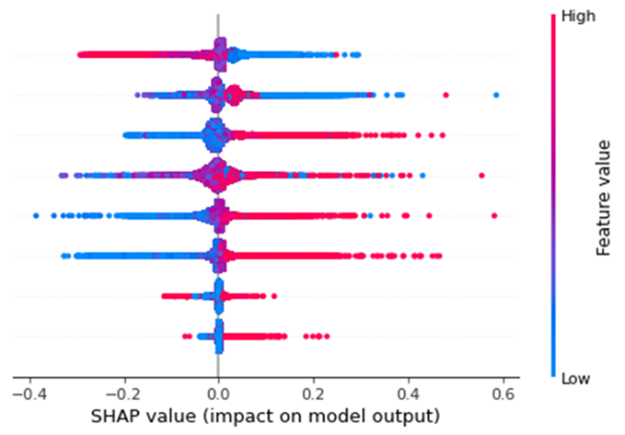

Though the techniques show added value, a significant drawback of using ML models for both segmentation and estimation is their tendency to be perceived as black-boxes. A lack of transparency can be problematic for financial institutions, where interpretability is crucial to ensure compliance with regulatory and internal requirements. The SHapley Additive exPlanations (SHAP) method provides an insightful way for explaining predictions made by ML models. The method provides an understanding of a prediction model by showing the average contribution of features across many predictions. SHAP values highlight which features are most important for the model and how they affect the model outcome. This makes it a powerful tool for explaining complex models, by enhancing interpretability and enabling practical use in regulated industries and decision-making processes.

Figure 3 presents an illustrative SHAP plot, showing how different features (variables) influence the ML model’s prepayment rate predictions on a per-observation basis. These features are given on the y-axis, in this case 8 features. Each dot represents a prepayment observation, with its position on the x-axis indicating the SHAP value. This value indicates the impact of that feature on the predicted prepayment rate. Positive values on the x-axis indicate that the feature increased the prediction, while negative values show a decreasing effect. For example, the feature on the bottom indicates that high feature values have a positive effect on the prepayment prediction. This type of plot helps identify the key drivers of prepayment estimates within the model as it can be seen that the bottom two features have the smallest impact on the model output. It also supports stakeholder communication of model results, offering an additional layer of evaluation beyond the conventional in-sample and out-of-sample performance metrics.

Figure 3: Illustrative SHAP plot

Conclusion

ML techniques can improve prepayment modeling throughout various stages of the model development process, specifically in data processing, segmentation and estimation. By enabling the full potential of ML in these facets, future cashflows can be estimated more precisely, resulting in a more accurate hedge. However, the trade-off between interpretability and accuracy remains an important consideration. Traditional methods offer high transparency and ease of implementation, which is particularly valuable in a heavily regulated financial sector whereas ML models can be considered a black-box. The introduction of explainability techniques such as SHAP help bridge this gap, providing financial institutions with insights into ML model decisions and ensuring compliance with internal and regulatory expectations for model transparency.

In the coming years, (Gen)AI and ML are expected to continue expanding their presence across the financial industry. This creates a growing need to explore opportunities for enhancing model performance, interpretability, and decision-making using these technologies. Beyond prepayment modeling, (Gen)AI and ML techniques are increasingly being applied in areas such as credit risk modeling, fraud detection, stress testing, and treasury analytics.

Zanders has extensive experience in applying advanced analytics across a wide range of financial domains, e.g.:

- Development of a standardized GenAI validation policy for foundational models (i.e., large, general-purpose AI models), ensuring responsible, explainable, and compliant use of GenAI technologies across the organization.

- Application of ML to distinguish between stable and non-stable portions of deposit balances, supporting improved behavioural assumptions for liquidity and interest rate risk management.

- Use of ML in credit risk to monitor the performance and stability of the production Probability of Default (PD) model, enabling early detection of model drift or degradation.

- Deployment of ML to enhance the efficiency and effectiveness of Financial Crime Prevention, including anomaly detection, transaction monitoring, and prioritization of investigative efforts.

Please contact Erik Vijlbrief or Siska van Hees for more information.

Citations

- See Surviving Prepayments: A Comparative Look at Prepayment Modeling Techniques - Zanders for a comparison between both types of models. ↩︎

With new EU rules on instant payments taking effect in October 2025, corporates must navigate the practical challenge of applying payee verification to file-based payment processes.

The EU instant payments regulation1 comes into force on the 5th October this year. Importantly from a corporate perspective, it includes a VoP (verification of payee) regulation that requires the originating banking partner (Payment Service Provider) to validate one of the following data options with the beneficiary bank:

- IBAN and Beneficiary Name

- IBAN and Identification (for example LEI or VAT number)

This beneficiary verification must be carried out as part of the payment initiation process and be completed before the payment can be potentially reviewed and authorized by the corporate and finally processed by the originating banking partner. Now whilst this concept of beneficiary verification works perfectly in the instant payments world as only individual payment transactions are processed, a material challenge exists where a corporate operates a bulk/batch payment (file based) model.

Many large corporates will pay vendor invoices (commercial payments) on a weekly or fortnightly basis or salaries on a monthly basis. Their ERP (Enterprise Resource Planning) system will complete a payment run and generate a file of pre-approved payment transactions which are sent via a secure connection to their banking partners. Typically, this automated file-based transmission contains pre-authorized transactions which has been contractually agreed with the banking partners. The banking partners will complete file syntax validation and a more detailed payment validation before processing the payment through the relevant clearing system.

If we focus on euro denominated electronic payments, these must be fully compliant with the new EU regulation from 5th October. This means the banking partners will need to verify the beneficiary information before the payment process can continue. So the banking partner will send an individual VoP check for each payment instruction contained within the payment file (batch of euro transactions) for the beneficiary bank to provide one of the following verification statuses:

- Match

- Close Match

- No Match

- Not applicable

The EU regulation then requires the banking partner to make the status available to the corporate to allow a review and approve or reject transactions based on the status that has been returned. This means there may be a ‘pause’ in the euro payments processing before the originating partner bank can proceed in processing the euro payment transactions. But this ‘pause’ may be exempted by contractual agreement, which is referred to as an ‘Opt-Out’. This is only available to corporates and covered in more detail below.

Key Considerations for the Corporate Community:

1- Notification of VoP Status: This new EU regulation will include the following status codes which will apply at a group and individual transaction level.

Group Status

RCVC Received Verification Completed

RVCM Received Verification Completed With Mismatches

Transaction Status

RCVC Received Verification Completed

RVNA Received Verification Completed Not Applicable

RVNM Received Verification Completed No Match

RVMC Received Verification Completed Match Closely

These new status codes have been designed to be provided in the ISO 20022 XML payment status message (pain.002.001.XX). However, a key question the corporate community need to ask their banking partners is around the flexibility that can be provided in supporting the communication of these VoP status codes. Does the corporate workflow already support the ISO XML payment status message and if so, can these new status codes be supported? At this stage, corporate community preference might be skewed towards leveraging a bank portal to access these new status codes, so an important area for discussion.

- Time to complete the VoP Check: The regulation includes a 5-second rule for a single payment transaction VoP request which includes the following activities:

- the time needed by the originating banking partner to identify the beneficiary bank based on the IBAN received,

- send the request to the beneficiary bank,

- beneficiary bank to check whether received info is associated to received IBAN,

- beneficiary bank to return the information,

- originating bank to return info to initiator.

If we now consider the timing based on a file of payments, the overall timing calculation becomes more challenging as it is expected originating banks will be executing multiple VoP requests in parallel. The EU regulation requires the originating banking partner to carry out the VoP check as soon as an individual transaction is ´unpacked´ from the associated batch of transactions. At this stage, a very rough estimate is that a file containing 100,000 transactions will take around 4 minutes to complete the full VoP process. But this is an approximate at this stage. The important point is that the corporate community will need to test the timings based on their specific euro payment logic to determine if existing file processing times need to be adjusted to respect existing agreed cut-off times and reduce the risk of late payments.

Corporate Action on VoP Status: This will be another area for discussion between the corporate and its banking partners, but the current options include:

- Authorize Regardless: Pre-authorise all payments, including those with mismatches.

- Review and Authorize: Review mismatches and provide explicit authorization for processing.

- Reject: mismatched payments or the entire batch.

The corporate discussion should also include how the review and approval can be undertaken. Given that the October deadline is fast approaching, the current expectation is that banking portals will be used to support this function, but this needs to be discussed including whether exceptions can be rejected individually, or if the whole file of transactions will need to be rejected. Whilst the corporate position is very clear that the exceptions only approach is required, it is still unclear if banks can support this flexibility.

Understanding the ‘Opt-Out’ Option:

Whilst the EU regulation does include an ‘opt-out’ option, meaning a VoP check will not be performed by the originating banking partners on euro transactions, this option currently only applies to bulk/batch payments and not a file containing a single payment transaction. Whilst the option to use a bank portal to make individual transactions has been suggested as a possible workaround, there is increasing corporate resistance to using bank portals given the automated secure file based processing that is now in place across many corporates.

The opt-out option needs to be contractually agreed with the relevant partner banks, so the corporate community will need to discuss this point in addition to understanding the options available for files containing single transactions, which will still be subject to the VoP verification requirement.

In conclusion

Whilst the industry continues to discuss the file based VoP model, the CGI-MP (common global implementation market practice) group which is an industry collaboration, is now recommending corporates to opt-out at this stage due to the various workflow challenges that currently exist. However, corporate and partner bank discussions will still be required around files containing single payment transactions.

There is no doubt VoP provides benefits in terms of mitigating the risk of fraudulent and misdirected payments, but the current EU design introduces material logistical challenges that require further broader discussion at an industry level.

We investigate different model options for prepayments, among which survival analysis

In brief

- Prepayment modeling can help institutions successfully prepare for and navigate a rise in prepayments due to changes in the financial landscape.

- Two important prepayment modeling types are highlighted and compared: logistic regression vs Cox Proportional Hazard.

- Although the Cox Proportional Hazard model is theoretically preferred under specific conditions, the logical regression is preferred in practice under many scenarios.

The borrowers' option to prepay on their loan induces uncertainty for lenders. How can lenders protect themselves against this uncertainty? Various prepayment modeling approaches can be selected, with option risk and survival analyses being the main alternatives under discussion.

Prepayment options in financial products spell danger for institutions. They inject uncertainty into mortgage portfolios and threaten fixed-rate products with volatile cashflows. To safeguard against losses and stabilize income, institutions must master precise prepayment modeling.

This article delves into the nuances and options regarding the modeling of mortgage prepayments (a cornerstone of Asset Liability Management (ALM)) with a specific focus on survival models.

Understanding the influences on prepayment dynamics

Prepayments are triggered by a range of factors – everything from refinancing opportunities to life changes, such as selling a house due to divorce or moving. These motivations can be grouped into three overarching categories: refinancing factors, macroeconomic factors, and loan-specific factors.

- Refinancing factors

This encompasses key financial drivers (such as interest rates, mortgage rates and penalties) and loan-specific information (including interest rate reset dates and the interest rate differential for the customer). Additionally, the historical momentum of rates and the steepness of the yield curve play crucial roles in shaping refinancing motivations. - Macro-economic factors

The overall state of the economy and the conditions of the housing market are pivotal forces on a borrower's inclination to exercise prepayment options. Furthermore, seasonality adds another layer of variability, with prepayments being notably higher in certain months. For example, in December, when clients have additional funds due to payment of year-end bonusses and holiday budgets. - Loan-specific factors

The age of the mortgage, type of mortgage, and the nature of the property all contribute to prepayment behavior. The seasoning effect, where the probability of prepayment increases with the age of the mortgage, stands out as a paramount factor.

These factors intricately weave together, shaping the landscape in which customers make decisions regarding prepayments. Prepayment modeling plays a vital role in helping institutions to predict the impact of these factors on prepayment behavior.

The evolution of prepayment modeling

Research on prepayment modeling originated in the 1980s and initially centered around option-theoretic models that assume rational customer behavior. Over time, empirical models that cater for customer irrationality have emerged and gained prominence. These models aim to capture the more nuanced behavior of customers by explaining the relationship between prepayment rates and various other factors. In this article, we highlight two important types of prepayment models: logistic regression and Cox Proportional Hazard (Survival Model).

Logistic regression

Logistic regression, specifically its logit or probit variant, is widely employed in prepayment analysis. This is largely because it caters for the binary nature of the dependent variable indicating the occurrence of prepayment events and it moreover flexible. That is, the model can incorporate mortgage-specific and overall economic factors as regressors and can handle time-varying factors and a mix of continuous and categorical variables.

Once the logistic regression model is fitted to historical data, its application involves inputting the characteristics of a new mortgage and relevant economic factors. The model’s output provides the probability of the mortgage undergoing prepayment. This approach is already prevalent in banking practices, and frequently employed in areas such as default modeling and credit scoring. Consequently, it’s favored by many practitioners for prepayment modeling.

Despite its widespread use, the model has drawbacks. While its familiarity in banking scenarios offers simplicity in implementation, it lacks the interpretability characteristic of the Proportional Hazard model discussed below. Furthermore, in terms of robustness, a minimal drawback is that any month-on-month change in results can be caused by numerous factors, which all affect each other.

Cox Proportional Hazard (Survival model)

The Cox Proportional Hazard (PH) model, developed by Sir David Cox in 1972, is one of the most popular models in survival analysis. It consists of two core parts:

- Survival time. With the Cox PH model, the variable of interest is the time to event. As the model stems from medical sciences, this event is typically defined as death. The time variable is referred to as survival time because it’s the time a subject has survived over some follow-up period.

- Hazard rate. This is the distribution of the survival time and is used to predict the probability of the event occurring in the next small-time interval, given that the event has not occurred beforehand. This hazard rate is modelled based on the baseline hazard (the time development of the hazard rate of an average patient) and a multiplier (the effect of patient-specific variables, such as age and gender). An important property of the model is that the baseline hazard is an unspecified function.

To explain how this works in the context of prepayment modeling for mortgages:

- The event of interest is the prepayment of a mortgage.

- The hazard rate is the probability of a prepayment occurring in the next month, given that the mortgage has not been prepaid beforehand. Since the model estimates hazard rates of individual mortgages, it’s modelled using loan-level data.

- The baseline hazard is the typical prepayment behavior of a mortgage over time and captures the seasoning effect of the mortgage.

- The multiplier of the hazard rate is based on mortgage-specific variables, such as the interest rate differential and seasonality.

For full prepayments, where the mortgage is terminated after the event, the Cox PH model applies in its primary format. However, partial prepayments (where the event is recurring) require an extended version, known as the recurrent event PH model. As a result, when using the Cox PH model, , the modeling of partial and full prepayments should be conducted separately, using slightly different models.

The attractiveness of the Cox PH model is due to several features:

- The interpretability of the model. The model makes it possible to quantify the influence of various factors on the likelihood of prepayment in an intuitive way.

- The flexibility of the model. The model offers the flexibility to handle time-varying factors and a mix of continuous and categorical variables, as well as the ability to incorporate recurrent events.

- The multiplier means the hazard rate can’t be negative. The exponential nature of mortgage-specific variables ensures non-negative estimated hazard rates.

Despite the advantages listed above presenting a compelling theoretical case for using the Cox PH model, it faces limited adoption in practical prepayment modeling by banks. This is primarily due to its perceived complexity and unfamiliarity. In addition, when loan-level data is unavailable, the Cox PH model is no longer an option for prepayment modeling.

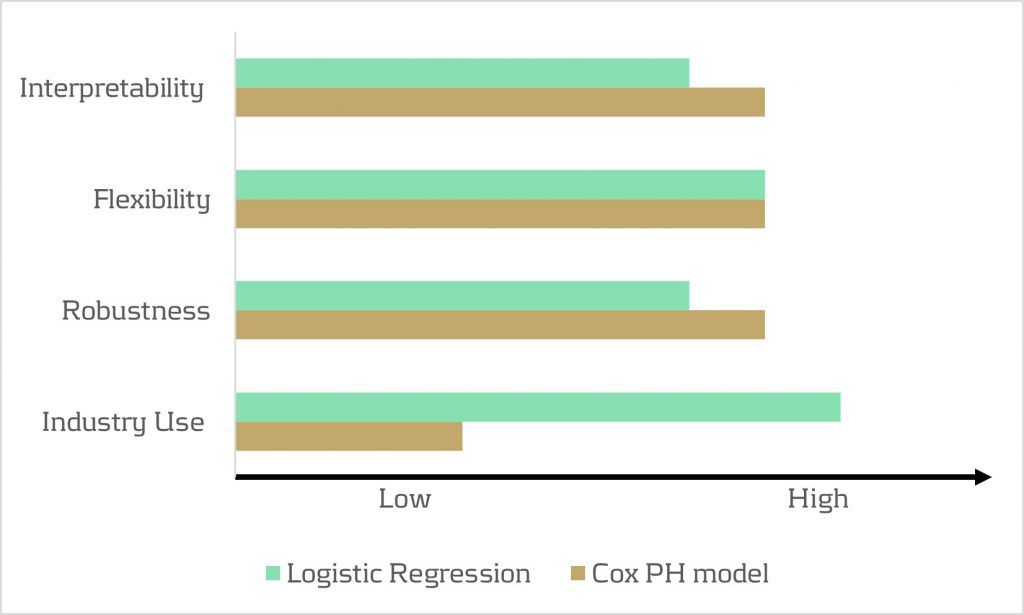

Logistic regression vs Cox Proportional Hazard

In scenarios with individual survival time data and censored observations, the Cox PH model is theoretically preferred over logistic regression. This preference arises because the Cox PH model leverages this additional information, whereas logistic regression focuses solely on binary outcomes, disregarding survival time and censoring.

However, practical considerations also come into play. Research shows that in certain cases, the logistic regression model closely approximates the results of the Cox PH model, particularly when hazard rates are low. Given that prepayments in the Netherlands are around 3-10% and associated hazard rates tend to be low, the performance gap between logistic regression and the Cox PH model is minimal in practice for this application. Also, the necessity to create a different PH model for full and partial prepayment adds an additional burden on ALM teams.

In conclusion, when faced with the absence of loan-level data, the logistic regression model emerges as a pragmatic choice for prepayment modeling. Despite the theoretical preference for the Cox PH model under specific conditions, the real-world performance similarities, coupled with the familiarity and simplicity of logistic regression, provide a practical advantage in many scenarios.

How can Zanders support?

Zanders is a thought leader on IRRBB-related topics. We enable banks to achieve both regulatory compliance and strategic risk goals by offering support from strategy to implementation. This includes risk identification, formulating a risk strategy, setting up an IRRBB governance and framework, and policy or risk appetite statements. Moreover, we have an extensive track record in IRRBB and behavioral models such as prepayment models, hedging strategies, and calculating risk metrics, both from model development and model validation perspectives.

Are you interested in IRRBB-related topics such as prepayments? Contact Jaap Karelse, Erik Vijlbrief, Petra van Meel (Netherlands, Belgium and Nordic countries) or Martijn Wycisk (DACH region) for more information.