Implications of CRR3 for the 2025 EU-wide stress test

An overview of how the new CRR3 regulation impacts banks’ capital requirements for credit risk and its implications for the 2025 EU-wide stress test, based on EBA’s findings.

With the introduction of the updated Capital Requirements Regulation (CRR3), which has entered into force on 9 July 2024, the European Union's financial landscape is poised for significant changes. The 2025 EU-wide stress test will be a major assessment to measure the resilience of banks under these new regulations. This article summarizes the estimated impact of CRR3 on banks’ capital requirements for credit risk based on the results of a monitoring exercise executed by the EBA in 2022. Furthermore, this article comments on the potential impact of CRR3 to the upcoming stress test, specifically from a credit risk perspective, and describes the potential implications for the banking sector.

The CRR3 regulation, which is the implementation of the Basel III reforms (also known as Basel IV) into European law, introduces substantial updates to the existing framework [1], including increased capital requirements, enhanced risk assessment procedures and stricter reporting standards. Focusing on credit risk, the most significant changes include:

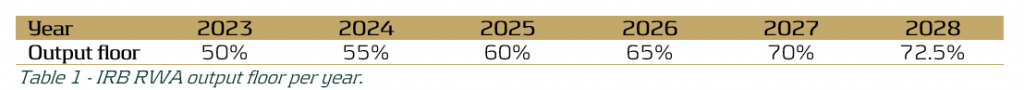

- The phased increase of the existing output floor to internally modelled capital requirements, limiting the benefit of internal models in 2028 to 72.5% of the Risk Weighted Assets (RWA) calculated under the Standardised Approach (SA), see Table 1. This floor is applied on consolidated level, i.e. on the combined RWA of all credit, market and operational risk.

- A revised SA to enhance robustness and risk sensitivity, via more granular risk weights and the introduction of new asset classes.1

- Limiting the application of the Advanced Internal Ratings Based (A-IRB) approach to specific asset classes. Additionally, new asset classes have been introduced.2

After the launch of CRR3 in January 2025, 68 banks from the EU and Norway, including 54 from the Euro area, will participate in the 2025 EU-wide stress test, thus covering 75% of the EU banking sector [2]. In light of this exercise, the EBA recently published their consultative draft of the 2025 EU-wide Stress Test Methodological Note [3], which reflects the regulatory landscape shaped by CRR3. During this forward-looking exercise the resilience of EU banks in the face of adverse economic conditions will be tested within the adjusted regulatory framework, providing essential data for the 2025 Supervisory Review and Evaluation Process (SREP).

The consequences of the updated regulatory framework are an important topic for banks. The changes in the final framework aim to restore credibility in the calculation of RWAs and improve the comparability of banks' capital ratios by aligning definitions and taxonomies between the SA and IRB approaches. To assess the impact of CRR3 on the capital requirements and whether this results in the achievement of this aim, the EBA executed a monitoring exercise in 2022 to quantify the impact of the new regulations, and published the results (refer to the report in [4]).

For this monitoring exercise the EBA used a sample of 157 banks, including 58 Group 1 banks (large and internationally active banks), of which 8 are classified as a Global Systemically Important Institution (G-SII), and 99 Group 2 banks. Group 1 banks are defined as banks that have Tier 1 capital in excess of EUR 3 billion and are internationally active. All other banks are labelled as Group 2 banks. In the report the results are separated per group and per risk type.

Looking at the impact on the credit risk capital requirements specifically caused by the revised SA and the limitations on the application of IRB, the EBA found that the median increase of current Tier 1 Minimum Required Capital3 (hereafter “MRC”) is approximately 3.2% over all portfolios, i.e. SA and IRB approach portfolios. Furthermore, the median impact on current Tier 1 MRC for SA portfolios is approximately 2.1% and for IRB portfolios is 0.5% (see [4], page 31). This impact can be mainly attributed to the introduction of new (sub) asset classes with higher risk weights on average. The largest increases are expected for ‘equities’, ‘equity investment in funds’ and ‘subordinated debt and capital instruments other than equity’. Under adverse scenarios the impact of more granular risk weights may be magnified due to a larger share of exposures having lower credit ratings. This may result in additional impact on RWA.

The revised SA results in more risk-sensitive capital requirements predictions over the forecast horizon due to the more granular risk weights and newly introduced asset classes. This in turn allows banks to more clearly identify their risk profile and provides the EBA with a better overview of the performance of the banking sector as a whole under adverse economic conditions. Additionally, the impact on RWA caused by the gradual increase of the output floor, as shown in Table 1, was estimated. As shown in Table 2, it was found that the gradual elevation of the output floor increasingly affects the MRC throughout the phase-in period (2023-2028).

Table 2 demonstrates that the impact is minimal in the first three years of the phase-in period, but grows significantly in the last three years of the phase-in period, with an average estimated 7.5% increase in Tier 1 MRC for G-SIIs in 2028. The larger increase in Tier 1 MRC for Group 1 banks, and G-SIIs in particular, as compared to Group 2 banks may be explained by the fact that larger banks more often employ an IRB approach and are thus more heavily impacted by an increased IRB floor, relative to their smaller counterparts. The expected impact on Group 1 banks is especially interesting in the context of the EU-wide stress test, since for the regulatory stress test only the 68 largest banks in Europe participate. Assuming that banks need to employ an increasing version of the output floor for their projections during the 2025 EU-wide stress test, this could lead to significant increases in capital requirements in the last years of the forecast horizon of the RWA projections. These increases may not be fully attributed to the adverse effects of the provided macroeconomic scenarios.

Conversely, it is good to note that a transition cap has been introduced by the Basel III reforms and adopted in CRR3. This cap puts a limit on the incremental increase of the output floor impact on total RWAs. The transitional period cap is set at 25% of a bank’s year-to-year increase in RWAs and may be exercised at the discretion of supervisors on a national level (see [5]). As a consequence, this may limit the observed increase in RWA during the execution of the 2025 EU-wide stress test.

In conclusion, the implementation of CRR3 and its adoption into the 2025 EU-wide stress test methodology may have a significant impact on the stress test results, mainly due to the gradual increase in the IRB output floor but also because of changes in the SA and IRB approaches. However, this effect may be partly mitigated by the transitional 25% cap on year-on-year incremental RWA due to the output floor increase. Additionally, the 2025 EU-wide stress test will provide a comprehensive view of the impact of CRR3, including the closer alignment between the SA and the IRB approaches, on the development of capital requirements in the banking sector under adverse conditions.

References:

- final_report_on_amendments_to_the_its_on_supervisory_reporting-crr3_crd6.pdf (europa.eu)

- The EBA starts dialogue with the banking industry on 2025 EU-Wide stress test methodology | European Banking Authority (europa.eu)

- 2025 EU-wide stress test - Methodological Note.pdf (europa.eu)

- Basel III monitoring report as of December 2022.pdf (europa.eu)

- Basel III: Finalising post-crisis reforms (bis.org)

- This includes the addition of the ‘Subordinated debt exposures’ asset class, as well as an additional branch of specialized lending exposures within the corporates asset class. Furthermore, a more detailed breakdown of exposures secured by mortgages on immovable property and acquisition, development and construction financing? has been introduced. ↩︎

- For in detailed information on the added asset classes and limited application of IRB refer to paragraph 25 of the report in [1]. ↩︎

- Tier 1 capital refers to the core capital held in a bank's reserves. It includes high-quality capital, predominantly in the form of shares and retained earnings that can absorb losses. The Tier 1 MRC is the minimum capital required to satisfy the regulatory Tier 1 capital ratio (ratio of a bank's core capital to its total RWA) determined by Basel and is an important metric the EBA uses to measure a bank’s health. ↩︎

Challenges to Treasury’s role in commodity risk management

Defining the challenges that prevent increased Treasury participation in the commodity risk management strategy and operations and proposing a framework to address these challenges.

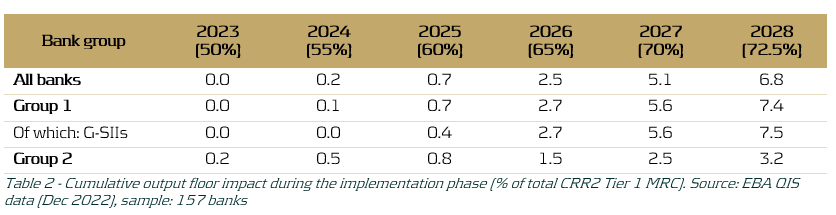

The heightened fluctuations observed in the commodity and energy markets from 2021 to 2022 have brought Treasury's role in managing these risks into sharper focus. While commodity prices stabilized in 2023 and have remained steady in 2024, the ongoing economic uncertainty and geopolitical landscape ensures that these risks continue to command attention. Building a commodity risk framework that is in-line with the organization’s objectives and unique exposure to different commodity risks is Treasury’s key function, but it must align with an over-arching holistic risk management approach.

Figure 1: Commodity index prices (Source: IMF Primary Commodity Price Research)

Traditionally, when treasury has been involved in commodity risk management, the focus is on the execution of commodity derivatives hedging. However, rarely did that translate into a commodity risk management framework that is fully integrated into the treasury operations and strategy of a corporate, particularly in comparison to the common frameworks applied for FX and interest rate risk.

On the surface it seems curious that corporates would have strict guidelines on hedging FX transaction risk, while applying a less stringent set of guidelines when managing material commodity positions. This is especially so when the expectation is often that the risk bearing capacity and risk appetite of a company should be no different when comparing exposure types.

The reality though is that commodity risk management for corporates is far more diverse in nature than other market risks, where the business case, ownership of tasks, and hedging strategy bring new challenges to the treasury environment. To overcome these challenges, we need to address them and understand them better.

Risk management framework

To first identify all the challenges, we need to analyze a typical market risk management framework, encompassing the identification, monitoring and mitigation aspects, in order to find the complexities specifically related to commodity risk management.

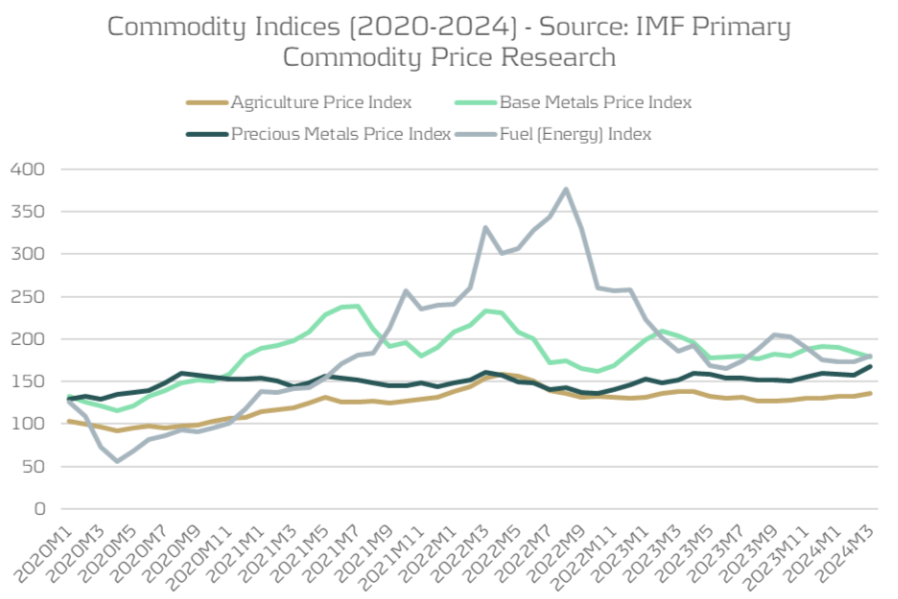

Figure 2: Zanders’ Commodity Risk Management Framework.

In the typical framework that Zanders’ advocates, the first step is always to gain understanding through:

- Identification: Establish the commodity exposure profile by identifying all possible sources of commodity exposure, classifying their likelihood and impact on the organization, and then prioritizing them accordingly.

- Measurement: Risk quantification and measurement refers to the quantitative analysis of the exposure profile, assessing the probability of market events occurring and quantifying the potential impact of the commodity price movements on financial parameters using common techniques such as sensitivity analysis, scenario analysis, or simulation analysis (pertaining to cashflow at risk, value at risk, etcetera).

- Strategy & Policy: With a clear understanding of the existing risk profile, the objectives of the risk management framework can be defined, giving special consideration to the specific goals of procurement teams when formulating the strategy and policy. The hedging strategy can then be developed in alignment with the established financial risk management objectives.

- Process & Execution: This phase directly follows the development of the hedging strategy, defining the toolbox for hedging and clearly allocating roles and responsibilities.

- Monitoring & Reporting: All activities should be supported by consistent monitoring and reporting, exception handling capabilities, and risk assessments shared across departments.

We will discuss each of these areas next in-depth and start to consider how teams of various skillsets can be combined to provide organizations with a best practice approach to commodity risk management.

Exposure identification & measurement is crucial

A recent Zanders survey and subsequent whitepaper revealed that the primary challenge most corporations face in risk management processes is data visibility and risk identification. Furthermore, identifying commodity risks is significantly more nuanced compared to understanding more straightforward risks such as counterparty or interest rate exposures.

Where the same categorization of exposures between transaction and economic risk apply to commodities (see boxout), there are additional layers of categorization that should be considered, especially in regard to transaction risk.

Transactions and economic risks affect a company's cash flows.

While transaction risk represents the future and known cash flows,

Economic risk represents the future (but unknown) cash flows.

Direct exposures: Certain risks may be viewed as direct exposures, where the commodity serves as a necessary input within the manufacturing supply chain, making it crucial for operations. In this scenario, financial pricing is not the only consideration for hedging, but also securing the delivery of the commodity itself to avoid any disruption to operations. While the financial risk component of this scenario sits nicely within the treasury scope of expertise, the physical or delivery component requires the expertise of the procurement team. Cross-departmental cooperation is therefore vital.

Indirect exposures: These exposures may be more closely aligned to FX exposures, where the risk is incurred only in a financial capacity, but no consideration is needed of physical delivery aspects. This is commonly experienced explicitly with indexation on the pricing conditions with suppliers, or implicitly with an understanding that the supplier may adjust the fixed price based on market conditions.

As with any market risk, it is important to maintain the relationship with procurement teams to ensure that the exposure identifications and assumptions used remain true

Indirect exposures may provide a little more independence for treasury teams in exercising the hedging decision from an operational perspective, particularly with strong systems support, reporting on and capturing the commodity indexation on the contracts, and analyzing how fixed price contracts are correlating with market movements. However, as with any market risk, it is important to maintain the relationship with procurement teams to ensure that the exposure identifications and assumptions used remain true.

Only once an accurate understanding of the nature and characteristics of the underlying exposures has been achieved can the hedging objectives be defined, leading to the creation of the strategy and policy element in the framework.

Strategy & Policy

Where all the same financial objectives of financial risk management such as ‘predictability’ and ‘stability’ are equally applicable to commodity risk management, additional non-financial objectives may need to be considered for commodities, such as ensuring delivery of commodity materials.

In addition, as the commodity risk is normally incurred as a core element of the operational processes, the objective of the hedging policy may be more closely aligned to creating stability at a product or component level and incorporated into the product costing processes. This is in comparison to FX where the impact from FX risk on operations falls lower in priority and the financial objectives at group or business unit level take central focus.

The exposure identification for each commodity type may reveal vastly different characteristics, and consequently the strategic objectives of hedging may differ by commodity and even at component level. This will require unique knowledge in each area, further confirming that a partnership approach with procurement teams is needed to adequately create effective strategy and policy guidelines.

Process and Execution

When a strategy is in place, the toolbox of hedging instruments available to the organization must be defined. For commodities, this is not only limited to financial derivatives executed by treasury and offsetting natural hedges. Strategic initiatives to reduce the volume of commodity exposure through manufacturing processes, and negotiations with suppliers to fix commodity prices within the contract are only a small sample of additional tools that should be explored.

Both treasury and procurement expertise is required throughout the commodity risk management Processing and Execution steps. This creates a challenge in defining a set of roles and responsibilities that correctly allocate resources against the tasks where the respective treasury and procurement subject matter experts can best utilize their knowledge.

As best practice, Treasury should be recognized as the financial market risk experts, ideally positioned to thoroughly comprehend the impact of commodity market movements on financial performance. The Treasury function should manage risk within a comprehensive, holistic risk framework through the execution of offsetting financial derivatives. Treasurers can use the same skillset and knowledge that they already use to manage FX and IR risks.

Procurement teams on the other hand will always have greater understanding of the true nature of commodity exposures, as well as an understanding of the supplier’s appetite and willingness to support an organizations’ hedging objectives. Apart from procurements understanding of the exposure, they may also face the largest impact from commodity price movements. Importantly, the sourcing and delivery of the actual underlying commodities and ensuring sufficient raw material stock for business operations would also remain the responsibility of procurement teams, as opposed to treasury who will always focus on price risk.

Clearly both stakeholders have a role to play, with neither providing an obvious reason to be the sole owner of tasks operating in isolation of the other. For simplicity purposes, some corporates have distinctly drawn a line between the procurement and treasury processes, often with procurement as the dominant driver. In this common workaround, Treasury is often only used for the hedging execution of derivatives, leaving the exposure identification, impact analysis and strategic decision-making with the procurement team. This allocation of separate responsibilities limits the potential of treasury to add value in the area of their expertise and limits their ability to innovate and create an improved end-to-end process. Operating in isolation also segregates commodity risk from a greater holistic risk framework approach, which the treasury may be trying to achieve for the organization.

One alternative to allocating tasks departmentally and distinctly would be to find a bridge between the stakeholders in the form of a specialized commodity and procurement risk team with treasury and procurement acting together in partnership. Through this specialized team, procurement objectives and exposure analysis may be combined with treasury risk management knowledge to ensure the most appropriate resources perform each task in-line with the objectives. This may not always be possible with the available resources, but variations of this blended approach are possible with less intrusive changes to the organizational structure.

Conclusion

With treasury trends pointing towards adopting a holistic view of risk management, together with a backdrop of global economic uncertainty and geopolitical instability, it may be time to face the challenges limiting Treasury’s role in commodity risk management and set up a framework that addresses these challenges. Treasury’s closer involvement should best utilize the talent in an organization, gain transparency to the exposures and risk profile in times of uncertainty and enable agile end-to-end decision-making with improved coordination between teams.

These advantages carry substantial potential value in fortifying commodity risk management practices to uphold operational stability across diverse commodity market conditions.

Component VaR: Automating VaR Attribution

Learn more about automating VaR attribution with Component VaR (CVaR), and how it can enhance efficiency and accuracy by replacing traditional, labor-intensive manual processes.

VaR has been one of the most widely used risk measures in banks for decades. However, due to the non-additive nature of VaR, explaining the causes of changes to VaR has always been challenging. VaR attribution techniques seek to identify the individual contributions, from specific positions, assets or risk factors to the portfolio-level VaR. For larger portfolios with many risk factors, the process can be complicated, time-consuming and computationally expensive.

In this article, we discuss some of the challenges with VaR attribution and describe some of the common methods for successful and efficient attribution. We also share insights into our own approach to performing VaR attribution, alongside our experience with delivering successful attribution frameworks for our clients.

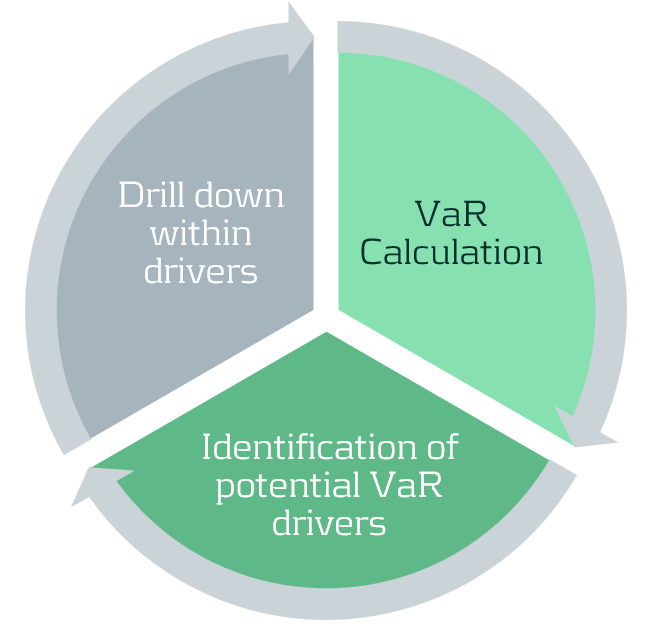

The challenge of VaR attribution

Providing attribution for changes in VaR due to day-on-day market moves and model changes is a common task for risk teams. The typical attribution approach is a manual investigation, drilling down into the data to isolate and identify VaR drivers. However, this can be a complex and time-consuming process, with thousands of potential factors that need to be analysed. This, compounded by the non-additive nature of VaR, can lead to attributions that may be incorrect or incomplete.

How is a manual explain performed?

The manual explain is a common approach to attributing changes in VaR, which is often performed by inefficient ad-hoc analyses. A manual explain is an iterative approach where VaR is computed at increasingly higher granularities until VaR drivers can be isolated. This iterative drill-down is both computationally and time inefficient, often taking many iterations and hours of processing to isolate VaR drivers with sufficient granularity.

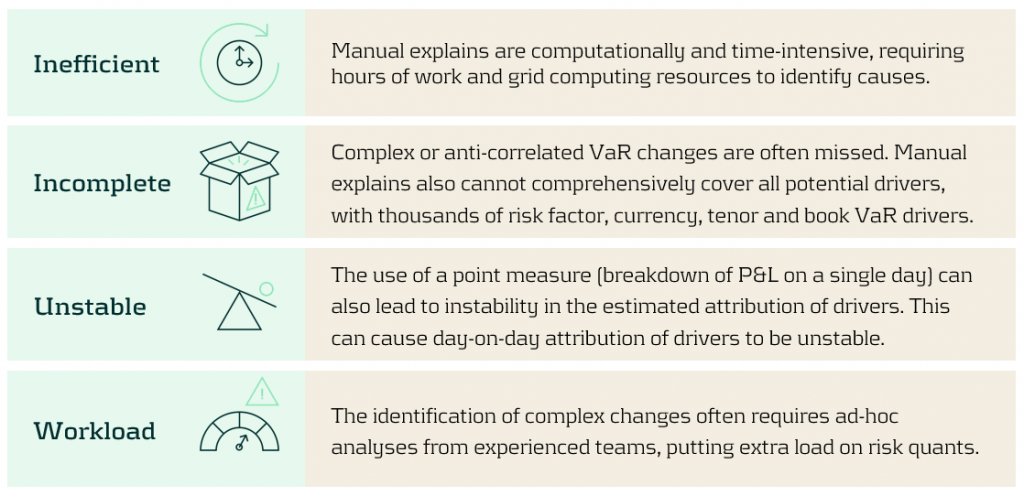

What problems are there with manual VaR explains?

There are several problems teams face when conducting manual VaR explains:

Our approach to performing VaR attribution

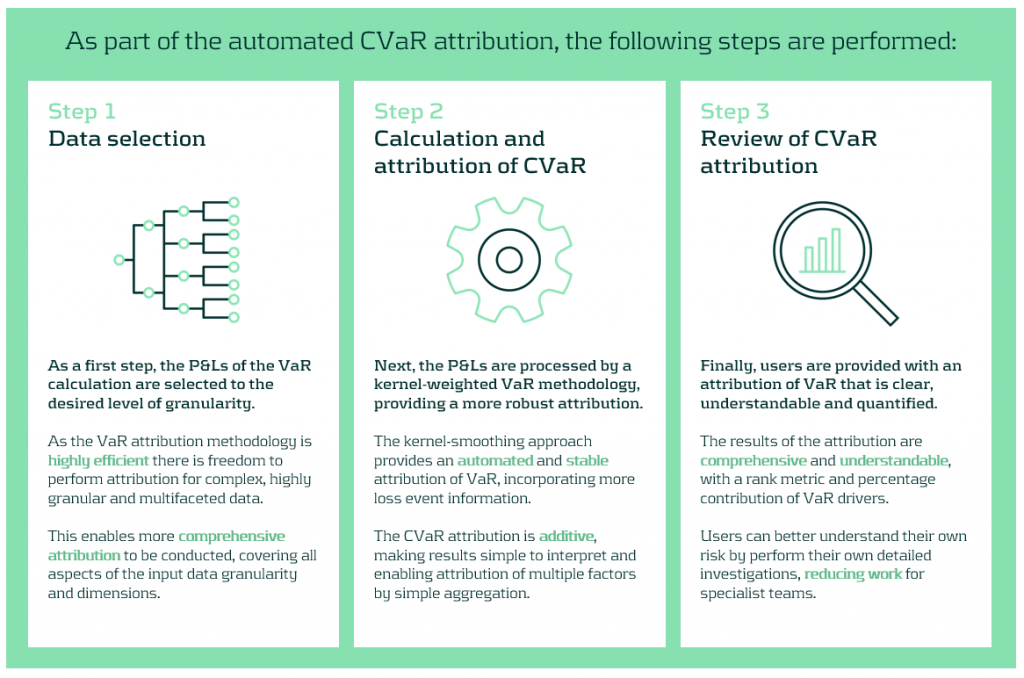

We propose an automated attribution approach to improve the efficiency and coverage of VaR explains. Our component VaR (CVaR) explain approach replaces the iterative and manual explain process with an automated process with three main steps.

First, risk P&Ls are selected at the desired level of granularity for attribution. This can cover a large number of dimensions at high granularity. Next, the data are analysed by a kernel-smoothed algorithm, which increases the stability and automates the attribution of VaR. Finally, users are provided with a comprehensive set of attribution results, enabling users to investigate their risk, determining and quantifying their core VaR drivers.

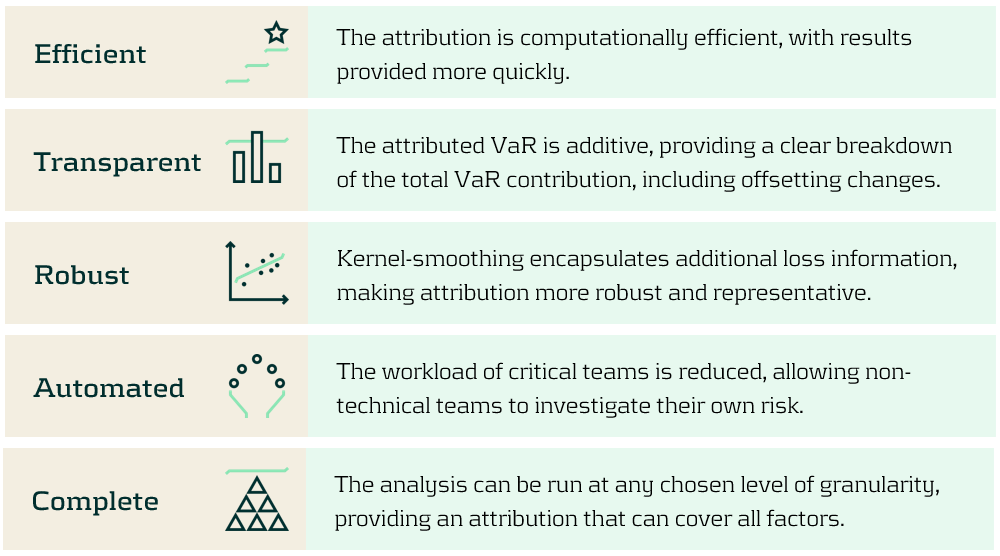

Benefits of the CVaR attribution methodology

CVaR attribution empowers users, enabling them to conduct their own VaR attribution analyses. This accelerates attribution for VaR and other percentile-based models, reducing the workload of specialised teams. The benefits our automated CVaR attribution methodology provides are presented below:

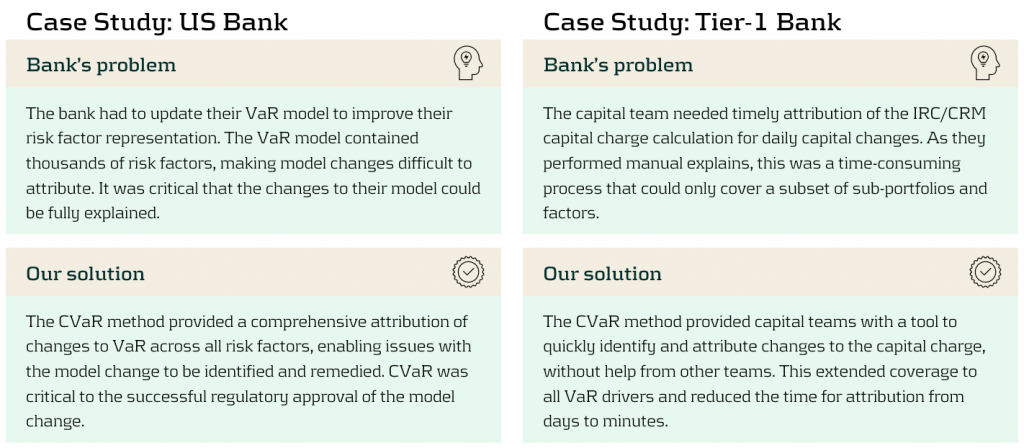

Zanders’ experiences with CVaR

We have implemented CVaR attribution for several large and complex VaR models, greatly improving efficiency and speed.

Conclusion

Although VaR attribution offers vital insights into the origins and drivers of risk, it is often complex and computationally demanding, particularly for large portfolios. Traditional manual methods are both time-consuming and inefficient. To address these challenges, we have proposed an automated CVaR attribution approach, which enhances efficiency, reduces workload, and provides timely and detailed risk driver insights. Our case studies demonstrate clear improvements in the coverage of VaR drivers and overall speed, enabling better risk management.

For more information on this topic, contact Dilbagh Kalsi (Partner) or Mark Baber (Senior Manager).

Regulatory exemptions during extreme market stresses: EBA publishes final RTS on extraordinary circumstances for continuing the use of internal models

Covid-19 exposed flaws in banks’ risk models, prompting regulatory exemptions, while new EBA guidelines aim to identify and manage future extreme market stresses.

The Covid-19 pandemic triggered unprecedented market volatility, causing widespread failures in banks' internal risk models. These backtesting failures threatened to increase capital requirements and restrict the use of advanced models. To avoid a potentially dangerous feedback loop from the lower liquidity, regulators responded by granting temporary exemptions for certain pandemic-related model exceptions. To act faster to future crises and reduce unreasonable increases to banks’ capital requirements, more recent regulation directly comments on when and how similar exemptions may be imposed.

Although FRTB regulation briefly comments on such situations of market stress, where exemptions may be imposed for backtesting and profit and loss attribution (PLA), it provides very little explanation of how banks can prove to the regulators that such a scenario has occurred. On 28th June, the EBA published its final draft technical standards on extraordinary circumstances for continuing the use of internal models for market risk. These standards discuss the EBA’s take on these exemptions and provide some guidelines on which indicators can be used to identify periods of extreme market stresses.

Background and the BCBS

In the Basel III standards, the Basel Committee on Banking Supervision (BCBS) briefly comment on rare occasions of cross-border financial market stress or regime shifts (hereby called extreme stresses) where, due to exceptional circumstances, banks may fail backtesting and the PLA test. In addition to backtesting overages, banks often see an increasing mismatch between Front Office and Risk P&L during periods of extreme stresses, causing trading desks to fail PLA.

The BCBS comment that one potential supervisory response could be to allow the failing desks to continue using the internal models approach (IMA), however only if the banks models are updated to adequately handle the extreme stresses. The BCBS make it clear that the regulators will only consider the most extraordinary and systemic circumstances. The regulation does not, however, give any indication of what analysis banks can provide as evidence for the extreme stresses which are causing the backtesting or PLA failures.

The EBA’s standards

The EBA’s conditions for extraordinary circumstances, based on the BCBS regulation, provide some more guidance. Similar to the BCBS, the EBA’s main conditions are that a significant cross-border financial market stress has been observed or a major regime shift has taken place. They also agree that such scenarios would lead to poor outcomes of backtesting or PLA that do not relate to deficiencies in the internal model itself.

To assess whether the above conditions have been met, the EBA will consider the following criteria:

- Analysis of volatility indices (such as the VIX and the VSTOXX), and indicators of realised volatilities, which are deemed to be appropriate to capture the extreme stresses,

- Review of the above volatility analysis to check whether they are comparable to, or more extreme than, those observed during COVID-19 or the global financial crisis,

- Assessment of the speed at which the extreme stresses took place,

- Analysis of correlations and correlation indicators, which adequately capture the extreme stresses, and whether a significant and sudden change of them occurred,

- Analysis of how statistical characteristics during the period of extreme stresses differ to those during the reference period used for the calibration of the VaR model.

The granularity of the criteria

The EBA make it clear that the standards do not provide an exhaustive list of suitable indicators to automatically trigger the recognition of the extreme stresses. This is because they believe that cases of extreme stresses are very unique and would not be able to be universally captured using a small set of prescribed indicators.

They mention that defining a very specific set of indicators would potentially lead to banks developing automated or quasi-automated triggering mechanisms for the extreme stresses. When applied to many market scenarios, this may lead to a large number of unnecessary triggers due the specificity of the prescribed indicators. As such, the EBA advise that the analysis should take a more general approach, taking into consideration the uniqueness of each extreme stress scenario.

Responses to questions

The publication also summarises responses to the original Consultation Paper EBA/CP/2023/19. The responses discuss several different indicators or factors, on top of the suggested volatility indices, that could be used to identify the extreme stresses:

- The responses highlight the importance of correlation indicators. This is because stress periods are characterised by dislocations in the market, which can show increased correlations and heightened systemic risk.

- They also mention the use of liquidity indicators. This could include jumps of the risk-free rates (RFRs) or index swap (OIS) indicators. These liquidity indicators could be used to identify regime shifts by benchmarking against situations of significant cross-border market stress (for example, a liquidity crisis).

- Unusual deviations in the markets may also be strong indicators of the extreme stresses. For example, there could be a rapid widening of spreads between emerging and developed markets triggered by regional debt crisis. Unusual deviations between cash and derivatives markets or large difference between futures/forward and spot prices could also indicate extreme stresses.

- They suggest that restrictions on trading or delivery of financial instruments/commodities may be indicative of extreme stresses. For example, the restrictions faced by the Russian ruble due to the Russia-Ukraine war.

- Finally, the responses highlighted that an unusual amount of backtesting overages, for example more than 2 in a month, could also be a useful indicator.

Zanders recommends

It’s important that banks are prepared for potential extreme stress scenarios in the future. To achieve this, we recommend the following:

- Develop a holistic set of indicators and metrics that capture signs of potential extreme stresses,

- Use early warning signals to preempt potential upcoming periods of stress,

- Benchmark the indicators and metrics against what was observed during the great financial crisis and Covid-19,

- Create suitable reporting frameworks to ensure the knowledge gathered from the above points is shared with relevant teams, supporting early remediation of issues.

Conclusion

During extreme stresses such as Covid-19 and the global financial crisis, banks’ internal models can fail, not because of modelling issues but due to systemic market issues. Under FRTB, the BCBS show that they recognise this and, in these rare situations, may provide exemptions. The EBA’s recently published technical standards provide better guidance on which indicators can be used to identify these periods of extreme stresses. Although they do not lay out a prescriptive and definitive set of indicators, the technical standards provide a starting point for banks to develop suitable monitoring frameworks.

For more information on this topic, contact Dilbagh Kalsi (Partner) or Hardial Kalsi (Manager).

The Ridge Backtest Metric: Backtesting Expected Shortfall

Explore how ridge backtesting addresses the intricate challenges of Expected Shortfall (ES) backtesting, offering a robust and insightful approach for modern risk management.

Challenges with backtesting Expected Shortfall

Recent regulations are increasingly moving toward the use of Expected Shortfall (ES) as a measure to capture risk. Although ES fixes many issues with VaR, there are challenges when it comes to backtesting.

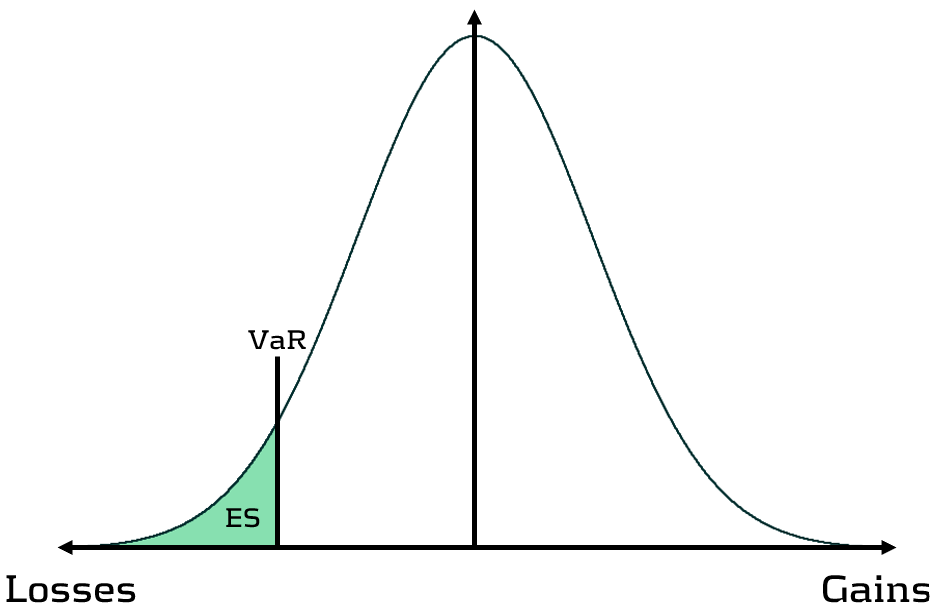

Although VaR has been widely-used for decades, its shortcomings have prompted the switch to ES. Firstly, as a percentile measure, VaR does not adequately capture tail risk. Unlike VaR, which gives the maximum expected portfolio loss in a given time period and at a specific confidence level, ES gives the average of all potential losses greater than VaR (see figure 1). Consequently, unlike Var, ES can capture a range of tail scenarios. Secondly, VaR is not sub-additive. ES, however, is sub-additive, which makes it better at accounting for diversification and performing attribution. As such, more recent regulation, such as FRTB, is replacing the use of VaR with ES as a risk measure.

Figure 1: Comparison of VaR and ES

Elicitability is a necessary mathematical condition for backtestability. As ES is non-elicitable, unlike VaR, ES backtesting methods have been a topic of debate for over a decade. Backtesting and validating ES estimates is problematic – how can a daily ES estimate, which is a function of a probability distribution, be compared with a realised loss, which is a single loss from within that distribution? Many existing attempts at backtesting have relied on approximations of ES, which inevitably introduces error into the calculations.

The three main issues with ES backtesting can be summarised as follows:

- Transparency

- Without reliable techniques for backtesting ES, banks struggle to have transparency on the performance of their models. This is particularly problematic for regulatory compliance, such as FRTB.

- Sensitivity

- Existing VaR and ES backtesting techniques are not sensitive to the magnitude of the overages. Instead, these techniques, such as the Traffic Light Test (TLT), only consider the frequency of overages that occur.

- Stability

- As ES is conditional on VaR, any errors in VaR calculation lead to errors in ES. Many existing ES backtesting methodologies are highly sensitive to errors in the underlying VaR calculations.

Ridge Backtesting: A solution to ES backtesting

One often-cited solution to the ES backtesting problem is the ridge backtesting approach. This method allows non-elicitable functions, such as ES, to be backtested in a manner that is stable with regards to errors in the underlying VaR estimations. Unlike traditional VaR backtesting methods, it is also sensitive to the magnitude of the overages and not just their frequency.

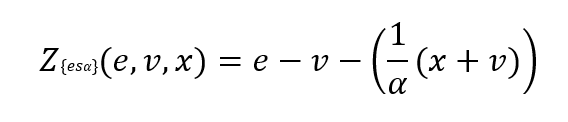

The ridge backtesting test statistic is defined as:

where 𝑣 is the VaR estimation, 𝑒 is the expected shortfall prediction, 𝑥 is the portfolio loss and 𝛼 is the confidence level for the VaR estimation.

The value of the ridge backtesting test statistic provides information on whether the model is over or underpredicting the ES. The technique also allows for two types of backtesting; absolute and relative. Absolute backtesting is denominated in monetary terms and describes the absolute error between predicted and realised ES. Relative backtesting is dimensionless and describes the relative error between predicted and realised ES. This can be particularly useful when comparing the ES of multiple portfolios. The ridge backtesting result can be mapped to the existing Basel TLT RAG zones, enabling efficient integration into existing risk frameworks.

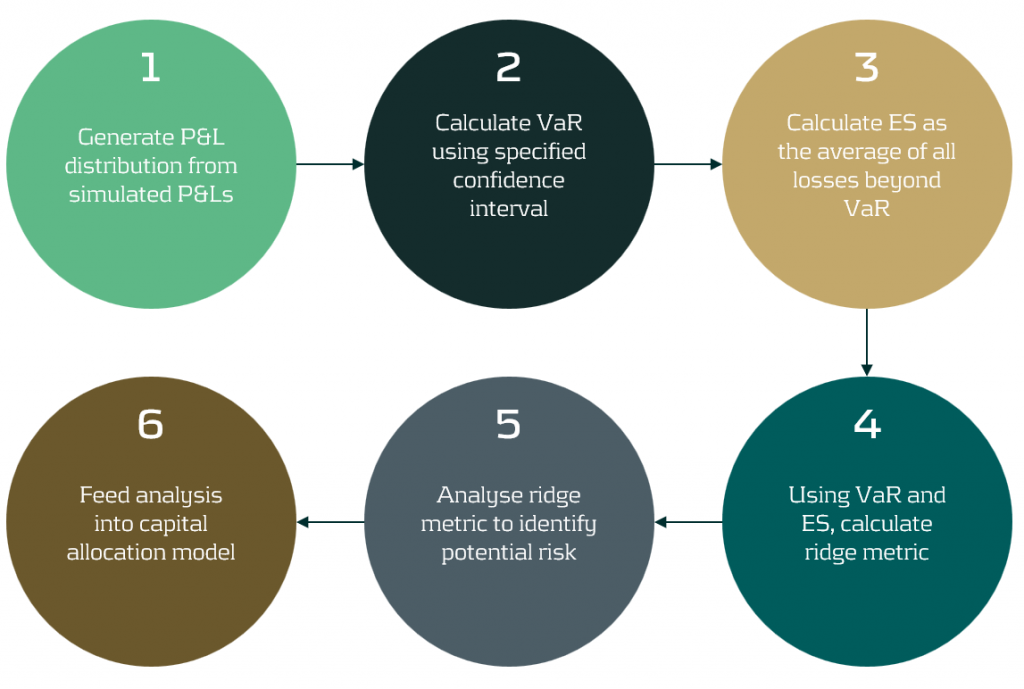

Figure 2: The ridge backtesting methodology

Sensitivity to Overage Magnitude

Unlike VaR backtesting, which does not distinguish between overages of different magnitudes, a major advantage of ES ridge backtesting is that it is sensitive to the size of each overage. This allows for better risk management as it identifies periods with large overages and also periods with high frequency of overages.

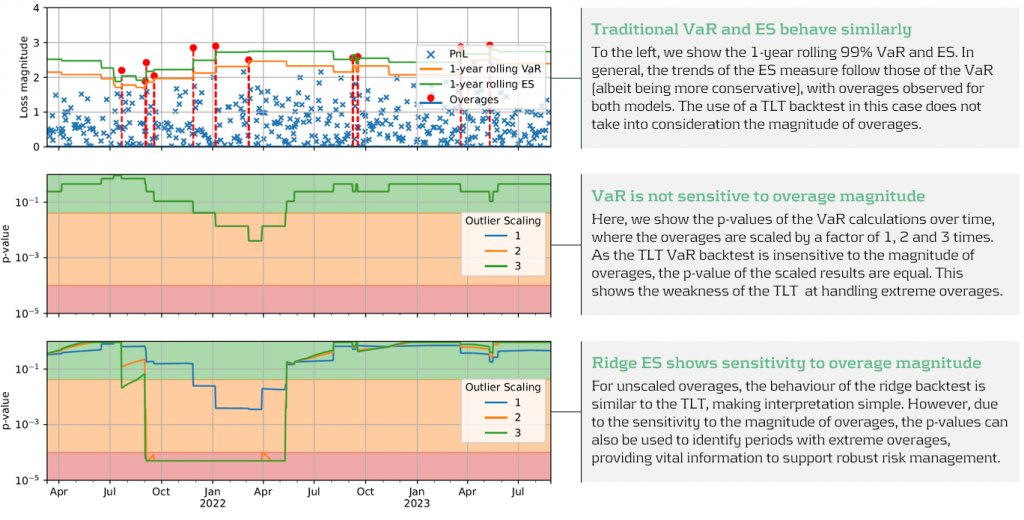

Below, in figure 3, we demonstrate the effectiveness of the ridge backtest by comparing it against a traditional VaR backtest. A scenario was constructed with P&Ls sampled from a Normal distribution, from which a 1-year 99% VaR and ES were computed. The sensitivity of ridge backtesting to overage magnitude is demonstrated by applying a range of scaling factors, increasing the size of overages by factors of 1, 2 and 3. The results show that unlike the traditional TLT, which is sensitive only to overage frequency, the ridge backtesting technique is effective at identifying both the frequency and magnitude of tail events. This enables risk managers to react more quickly to volatile markets, regime changes and mismodelling of their risk models.

Figure 3: Demonstration of ridge backtesting’s sensitivity to overage magnitude.

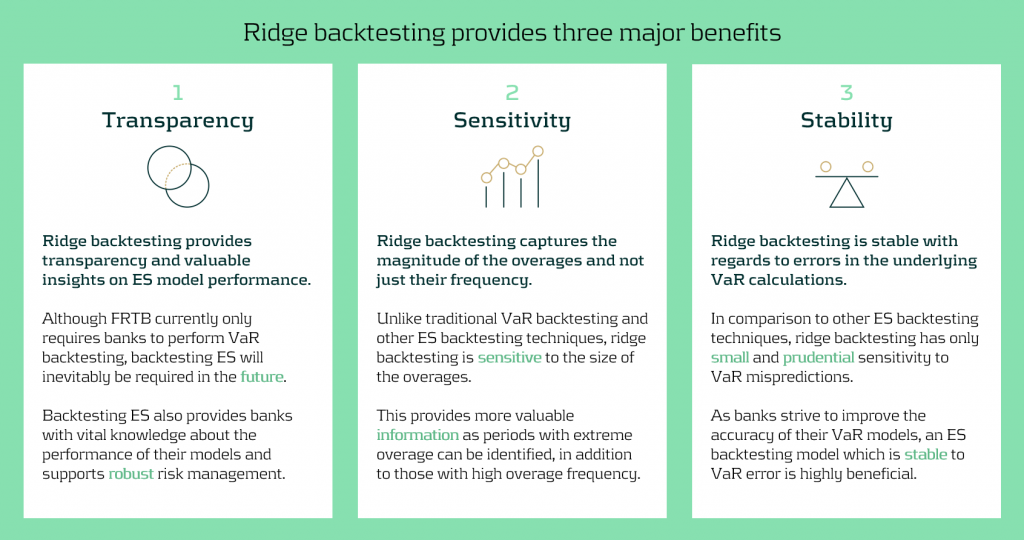

The Benefits of Ridge Backtesting

Rapidly changing regulation and market regimes require banks enhance their risk management capabilities to be more reactive and robust. In addition to being a robust method for backtesting ES, ridge backtesting provides several other benefits over alternative backtesting techniques, providing banks with metrics that are sensitive and stable.

Despite the introduction of ES as a regulatory requirement for banks choosing the internal models approach (IMA), regulators currently do not require banks to backtest their ES models. This leaves a gap in banks’ risk management frameworks, highlighting the necessity for a reliable ES backtesting technique. Despite this, banks are being driven to implement ES backtesting methodologies to be compliant with future regulation and to strengthen their risk management frameworks to develop a comprehensive understanding of their risk.

Ridge backtesting gives banks transparency to the performance of their ES models and a greater reactivity to extreme events. It provides increased sensitivity over existing backtesting methodologies, providing information on both overage frequency and magnitude. The method also exhibits stability to any underlying VaR mismodelling.

In figure 4 below, we summarise the three major benefits of ridge backtesting.

Figure 4: The three major benefits of ridge backtesting.

Conclusion

The lack of regulatory control and guidance on backtesting ES is an obvious concern for both regulators and banks. Failure to backtest their ES models means that banks are not able to accurately monitor the reliability of their ES estimates. Although the complexities of backtesting ES has been a topic of ongoing debate, we have shown in this article that ridge backtesting provides a robust and informative solution. As it is sensitive to the magnitude of overages, it provides a clear benefit in comparison to traditional VaR TLT backtests that are only sensitive to overage frequency. Although it is not a regulatory requirement, regulators are starting to discuss and recommend ES backtesting. For example, the PRA, EBA and FED have all recommended ES backtesting in some of their latest publications. However, despite the fact that regulation currently only requires banks to perform VaR backtesting, banks should strive to implement ES backtesting as it supports better risk management.

For more information on this topic, contact Dilbagh Kalsi (Partner) or Hardial Kalsi (Manager).

Exploring IFRS 9 Best Practices: Insights from Leading European Banks

Explore how ridge backtesting addresses the intricate challenges of Expected Shortfall (ES) backtesting, offering a robust and insightful approach for modern risk management.

Across the whole of Europe, banks apply different techniques to model their IFRS9 Expected Credit Losses on a best estimate basis. The diverse spectrum of modelling techniques raises the question: what can we learn from each other, such that we all can improve our own IFRS 9 frameworks? For this purpose, Zanders hosted a webinar on the topic of IFRS 9 on the 29th of May 2024. This webinar was in the form of a panel discussion which was led by Martijn de Groot and tried to discuss the differences and similarities by covering four different topics. Each topic was discussed by one panelist, who were Pieter de Boer (ABN AMRO, Netherlands), Tobia Fasciati (UBS, Switzerland), Dimitar Kiryazov (Santander, UK), and Jakob Lavröd (Handelsbanken, Sweden).

The webinar showed that there are significant differences with regards to current IFRS 9 issues between European banks. An example of this is the lingering effect of the COVID-19 pandemic, which is more prominent in some countries than others. We also saw that each bank is working on developing adaptable and resilient models to handle extreme economic scenarios, but that it remains a work in progress. Furthermore, the panel agreed on the fact that SICR remains a difficult metric to model, and, therefore, no significant changes are to be expected on SICR models.

Covid-19 and data quality

The first topic covered the COVID-19 period and data quality. The poll question revealed widespread issues with managing shifts in their IFRS 9 model resulting from the COVID-19 developments. Pieter highlighted that many banks, especially in the Netherlands, have to deal with distorted data due to (strong) government support measures. He said this resulted in large shifts of macroeconomic variables, but no significant change in the observed default rate. This caused the historical data not to be representative for the current economic environment and thereby distorting the relationship between economic drivers and credit risk. One possible solution is to exclude the COVID-19 period, but this will result in the loss of data. However, including the COVID-19 period has a significant impact on the modelling relations. He also touched on the inclusion of dummy variables, but the exact manner on how to do so remains difficult.

Dimitar echoed these concerns, which are also present in the UK. He proposed using the COVID-19 period as an out-of-sample validation to assess model performance without government interventions. He also talked about the problems with the boundaries of IFRS 9 models. Namely, he questioned whether models remain reliable when data exceeds extreme values. Furthermore, he mentioned it also has implications for stress testing, as COVID-19 is a real life stress scenario, and we might need to think about other modelling techniques, such as regime-switching models.

Jakob found the dummy variable approach interesting and also suggested the Kalman filter or a dummy variable that can change over time. He pointed out that we need to determine whether the long term trend is disturbed or if we can converge back to this trend. He also mentioned the need for a common data pipeline, which can also be used for IRB models. Pieter and Tobia agreed, but stressed that this is difficult since IFRS 9 models include macroeconomic variables and are typically more complex than IRB.

Significant Increase in Credit Risk

The second topic covered the significant increase in credit risk (SICR). Jakob discussed the complexity of assessing SICR and the lack of comprehensive guidance. He stressed the importance of looking at the origination, which could give an indication on the additional risk that can be sustained before deeming a SICR.

Tobia pointed out that it is very difficult to calibrate, and almost impossible to backtest SICR. Dimitar also touched on the subject and mentioned that the SICR remains an accounting concept that has significant implications for the P&L. The UK has very little regulations on this subject, and only requires banks to have sufficient staging criteria. Because of these reasons, he mentioned that he does not see the industry converging anytime soon. He said it is going to take regulators to incentivize banks to do so. Dimitar, Jakob, and Tobia also touched upon collective SICR, but all agreed this is difficult to do in practice.

Post Model Adjustments

The third topic covered post model adjustments (PMAs). The results from the poll question implied that most banks still have PMAs in place for their IFRS 9 provisions. Dimitar responded that the level of PMAs has mostly reverted back to the long term equilibrium in the UK. He stated that regulators are forcing banks to reevaluate PMAs by requiring them to identify the root cause. Next to this, banks are also required to have a strategy in place when these PMAs are reevaluated or retired, and how they should be integrated in the model risk management cycle. Dimitar further argued that before COVID-19, PMAs were solely used to account for idiosyncratic risk, but they stayed around for longer than anticipated. They were also used as a countercyclicality, which is unexpected since IFRS 9 estimations are considered to be procyclical. In the UK, banks are now building PMA frameworks which most likely will evolve over the coming years.

Jakob stressed that we should work with PMAs on a parameter level rather than on ECL level to ensure more precise adjustments. He also mentioned that it is important to look at what comes before the modelling, so the weights of the scenarios. At Handelsbanken, they first look at smaller portfolios with smaller modelling efforts. For the larger portfolios, PMAs tend to play less of a role. Pieter added that PMAs can be used to account for emerging risks, such as climate and environmental risks, that are not yet present in the data. He also stressed that it is difficult to find a balance between auditors, who prefer best estimate provisions, and the regulator, who prefers higher provisions.

Linking IFRS 9 with Stress Testing Models

The final topic links IFRS 9 and stress testing. The poll revealed that most participants use the same models for both. Tobia discussed that at UBS the IFRS 9 model was incorporated into their stress testing framework early on. He pointed out the flexibility when integrating forecasts of ECL in stress testing. Furthermore, he stated that IFRS 9 models could cope with stress given that the main challenge lies in the scenario definition. This is in contrast with others that have been arguing that IFRS 9 models potentially do not work well under stress. Tobia also mentioned that IFRS 9 stress testing and traditional stress testing need to have aligned assumptions before integrating both models in each other.

Jakob agreed and talked about the perfect foresight assumption, which suggests that there is no need for additional scenarios and just puts a weight of 100% on the stressed scenario. He also added that IFRS 9 requires a non-zero ECL, but a highly collateralized portfolio could result in zero ECL. Stress testing can help to obtain a loss somewhere in the portfolio, and gives valuable insights on identifying when you would take a loss.

Pieter pointed out that IFRS 9 models differ in the number of macroeconomic variables typically used. When you are stress testing variables that are not present in your IFRS 9 model, this could become very complicated. He stressed that the purpose of both models is different, and therefore integrating both can be challenging. Dimitar said that the range of macroeconomic scenarios considered for IFRS 9 is not so far off from regulatory mandated stress scenarios in terms of severity. However, he agreed with Pieter that there are different types of recessions that you can choose to simulate through your IFRS 9 scenarios versus what a regulator has identified as systemic risk for an industry. He said you need to consider whether you are comfortable relying on your impairment models for that specific scenario.

This topic concluded the webinar on differences and similarities across European countries regarding IFRS 9. We would like to thank the panelists for the interesting discussion and insights, and the more than 100 participants for joining this webinar.

Interested to learn more? Contact Kasper Wijshoff, Michiel Harmsen or Polly Wong for questions on IFRS 9.

Navigating SAP’s GROW and RISE Products: The Impact of Cloud Solutions on Treasury Operations

Explore how ridge backtesting addresses the intricate challenges of Expected Shortfall (ES) backtesting, offering a robust and insightful approach for modern risk management.

As organizations continue to adapt to the rapidly changing business landscape, one of the most pivotal shifts is the migration of enterprise resource planning (ERP) systems to the cloud. The evolution of treasury operations is a prime example of how cloud-based solutions are revolutionizing the way businesses manage their financial assets. This article dives into the nuances between SAP’s GROW (public cloud) and RISE (private cloud) products, particularly focusing on their impact on treasury operations.

The "GROW" product targets new clients who want to quickly leverage the public cloud's scalability and standard processes. In contrast, the "RISE" product is designed for existing SAP clients aiming to migrate their current systems efficiently into the private cloud.

Public Cloud vs. Private Cloud

The public cloud, exemplified by SAP's "GROW" package, operates on a shared infrastructure hosted by providers such as SAP, Alibaba, or AWS. Public cloud services are scalable, reliable, and flexible, offering key business applications and storage managed by the cloud service providers. Upgrades are mandatory and occur on a six-month release cycle. All configuration is conducted through SAP Fiori, making this solution particularly appealing to upper mid-market net new customers seeking to operate using industry-standard processes and maintain scalable operations.

In contrast, the private cloud model, exemplified by the “RISE” package, is used exclusively by a single business or organization and must be hosted at SAP or an SAP-approved hyperscaler of their choice. The private cloud offers enhanced control and security, catering to specific business needs with personalized services and infrastructure according to customer preferences. It provides configuration flexibility through both SAP Fiori and the SAP GUI. This solution is mostly preferred by large enterprises, and many customers are moving from ECC to S/4HANA due to its customizability and heightened security.

Key Differences in Cloud Approaches

Distinguishing between public and private cloud methodologies involves examining factors like control, cost, security, scalability, upgrades, configuration & customization, and migration. Each factor plays a crucial role in determining which cloud strategy aligns with an organization's vision for treasury operations.

- Control: The private cloud model emphasizes control, giving organizations exclusive command over security and data configurations. The public cloud is managed by external providers, offering less control but relieving the organization from day-to-day cloud management.

- Cost: Both the public and private cloud operate on a subscription model. However, managing a private cloud infrastructure requires significant upfront investment and a dedicated IT team for ongoing maintenance, updates, and monitoring, making it a time-consuming and resource-intensive option. Making the public cloud potentially a more cost-effective option for organizations.

- Security: Both GROW and RISE are hosted by SAP or hyperscalers, offering strong security measures. There is no significant difference in security levels between the two models.

- Scalability: The public cloud offers unmatched scalability, allowing businesses to respond quickly to increased demands without the need for physical hardware changes. Private clouds can also be scaled, but this usually requires additional hardware or software and IT support, making them less dynamic.

- Upgrades: the public cloud requires mandatory upgrades every six months, whereas the private cloud allows organizations to dictate the cadence of system updates, such as opting for upgrades every five years or as needed.

- Configuration and Customization: in the public cloud configuration is more limited with fewer BAdIs and APIs available, and no modifications allowed. The private cloud allows for extensive configuration through IMG and permits SAP code modification, providing greater flexibility and control.

- Migration: the public cloud supports only greenfield implementation, which means only current positions can be migrated, not historical transactions. The private cloud offers migration programs from ECC, allowing historical data to be transferred.

Impact on Treasury Operations

The impact of SAP’s GROW (public cloud) and RISE (private cloud) solutions on treasury operations largely hinges on the degree of tailoring required by an organization’s treasury processes. If your treasury processes require minimal or no tailoring, both public and private cloud options could be suitable. However, if your treasury processes are tailored and structured around specific needs, only the private cloud remains a viable option.

In the private cloud, you can add custom code, modify SAP code, and access a wider range of configuration options, providing greater flexibility and control. In contrast, the public cloud does not allow for SAP code modification but does offer limited custom code through cloud BADI and extensibility. Additionally, the public cloud emphasizes efficiency and user accessibility through a unified interface (SAP Fiori), simplifying setup with self-service elements and expert oversight. The private cloud, on the other hand, employs a detailed system customization approach (using SAP Fiori & GUI), appealing to companies seeking granular control.

Another important consideration is the mandatory upgrades in the public cloud every six months, requiring you to test SAP functionalities for each activated scope item where an update has occurred, which could be strenuous. The advantage is that your system will always run on the latest functionality. This is not the case in the private cloud, where you have more control over system updates. With the private cloud, organizations can dictate the cadence of system updates (e.g., opting for yearly upgrades), the type of updates (e.g., focusing on security patches or functional upgrades), and the level of updates (e.g., maintaining the system one level below the latest is often used).

To accurately assess the impact on your treasury activities, consider the current stage of your company's lifecycle and identify where and when customization is needed for your treasury operations. For example, legacy companies with entrenched processes may find the rigidity of public cloud functionality challenging. In contrast, new companies without established processes can greatly benefit from the pre-delivered set of best practices in the public cloud, providing an excellent starting point to accelerate implementation.

Factors Influencing Choices

Organizations choose between public and private cloud options based on factors like size, compliance, operational complexity, and the degree of entrenched processes. Larger companies may prefer private clouds for enhanced security and customization capabilities. Startups to mid-size enterprises may favor the flexibility and cost-effectiveness of public clouds during rapid growth. Additionally, companies might opt for a hybrid approach, incorporating elements of both cloud models. For instance, a Treasury Sidecar might be deployed on the public cloud to leverage scalability and innovation while maintaining the main ERP system on-premise or on the private cloud for greater control and customization. This hybrid strategy allows organizations to tailor their infrastructure to meet specific operational needs while maximizing the advantages of both cloud environments.

Conclusion

Migrating ERP systems to the cloud can significantly enhance treasury operations with distinct options through SAP's public and private cloud solutions. Public clouds offer scalable, cost-effective solutions ideal for medium-to upper-medium-market enterprises with standard processes or without pre-existing processes. They emphasize efficiency, user accessibility, and mandatory upgrades every six months. In contrast, private clouds provide enhanced control, security, and customization, catering to larger enterprises with specific regulatory needs and the ability to modify SAP code.

Choosing the right cloud model for treasury operations depends on an organization's current and future customization needs. If minimal customization is required, either option could be suitable. However, for customized treasury processes, the private cloud is preferable. The decision should consider the company's lifecycle stage, with public clouds favoring rapid growth and cost efficiency and private clouds offering long-term control and security.

It is also important to note that SAP continues to offer on-premise solutions for organizations that require or prefer traditional deployment methods. This article focuses on cloud solutions, but on-premises remains a viable option for businesses that prioritize complete control over their infrastructure and have the necessary resources to manage it independently.

If you need help thinking through your decision, we at Zanders would be happy to assist you.

Unlocking the Hidden Gems of the SAP Credit Risk Analyzer

Explore how ridge backtesting addresses the intricate challenges of Expected Shortfall (ES) backtesting, offering a robust and insightful approach for modern risk management.

While many business and SAP users are familiar with its core functionalities, such as limit management applying different limit types and the core functionality of attributable amount determination, several less known SAP standard features can enhance your credit risk management processes.

In this article, we will explore these hidden gems, such as Group Business Partners and the ways to manage the limit utilizations using manual reservations and collateral.

Group Business Partner Use

One of the powerful yet often overlooked features of the SAP Credit Risk Analyzer is the ability to use Group Business Partners (BP). This functionality allows you to manage credit and settlement risk at a bank group level rather than at an individual transactional BP level. By consolidating credit and settlement exposure for related entities under a single group business partner, you can gain a holistic view of the risks associated with an entire banking group. This is particularly beneficial for organizations dealing with banking corporations globally and allocating a certain amount of credit/settlement exposure to banking groups. It is important to note that credit ratings are often reflected at the group bank level. Therefore, the use of Group BPs can be extended even further with the inclusion of credit ratings, such as S&P, Fitch, etc.

Configuration: Define the business partner relationship by selecting the proper relationship category (e.g., Subsidiary of) and setting the Attribute Direction to "Also count transactions from Partner 1 towards Partner 2," where Partner 2 is the group BP.

Master Data: Group BPs can be defined in the SAP Business Partner master data (t-code BP). Ensure that all related local transactional BPs are added in the relationship to the appropriate group business partner. Make sure the validity period of the BP relationship is valid. Risk limits are created using the group BP instead of the transactional BP.

Reporting: Limit utilization (t-code TBLB) is consolidated at the group BP level. Detailed utilization lines show the transactional BP, which can be used to build multiple report variants to break down the limit utilization by transactional BP (per country, region, etc.).

Having explored the benefits of using Group Business Partners, another feature that offers significant flexibility in managing credit risk is the use of manual reservations and collateral contracts.

Use of Manual Reservations

Manual reservations in the SAP Credit Risk Analyzer provide an additional layer of flexibility in managing limit utilization. This feature allows risk managers to manually add a portion of the credit/settlement utilization for specific purposes or transactions, ensuring that critical operations are not hindered by unexpected credit or settlement exposure. It is often used as a workaround for issues such as market data problems, when SAP is not able to calculate the NPV, or for complex financial instruments not yet supported in the Treasury Risk Management (TRM) or Credit Risk Analyzer (CRA) settings.

Configuration: Apart from basic settings in the limit management, no extra settings are required in SAP standard, making the use of reservations simpler.

Master data: Use transaction codes such as TLR1 to TLR3 to create, change, and display the reservations, and TLR4 to collectively process them. Define the reservation amount, specify the validity period, and assign it to the relevant business partner, transaction, limit product group, portfolio, etc. Prior to saving the reservation, check in which limits your reservation will be reflected to avoid having any idle or misused reservations in SAP.

While manual reservations provide a significant boost to flexibility in limit management, another critical aspect of credit risk management is the handling of collateral.

Collateral

Collateral agreements are a fundamental aspect of credit risk management, providing security against potential defaults. The SAP Credit Risk Analyzer offers functionality for managing collateral agreements, enabling corporates to track and value collateral effectively. This ensures that the collateral provided is sufficient to cover the exposure, thus reducing the risk of loss.

SAP TRM supports two levels of collateral agreements:

- Single-transaction-related collateral

- Collateral agreements.

Both levels are used to reduce the risk at the level of attributable amounts, thereby reducing the utilization of limits.

Single-transaction-related collateral: SAP distinguishes three types of collateral value categories:

- Percentual collateralization

- Collateralization using a collateral amount

- Collateralization using securities

Configuration: configure collateral types and collateral priorities, define collateral valuation rules, and set up the netting group.

Master Data: Use t-code KLSI01_CFM to create collateral provisions at the appropriate level and value. Then, this provision ID can be added to the financial object.

Reporting: both manual reservations and collateral agreements are visible in the limit utilization report as stand- alone utilization items.

By leveraging these advanced features, businesses can significantly enhance their risk management processes.

Conclusion

The SAP Credit Risk Analyzer is a comprehensive tool that offers much more than meets the eye. By leveraging its hidden functionalities, such as Group Business Partner use, manual reservations, and collateral agreements, businesses can significantly enhance their credit risk management processes. These features not only provide greater flexibility and control but also ensure a more holistic and robust approach to managing credit risk. As organizations continue to navigate the complexities of the financial landscape, unlocking the full potential of the SAP Credit Risk Analyzer can be a game-changer in achieving effective risk management.

If you have questions or are keen to see the functionality in our Zanders SAP Demo system, please feel free to contact Aleksei Abakumov or any Zanders SAP consultant.

Default modelling in an age of agility

Explore how ridge backtesting addresses the intricate challenges of Expected Shortfall (ES) backtesting, offering a robust and insightful approach for modern risk management.

In brief:

- Prevailing uncertainty in geopolitical, economic and regulatory environments demands a more dynamic approach to default modelling.

- Traditional methods such as logistic regression fail to address the non-linear characteristics of credit risk.

- Score-based models can be cumbersome to calibrate with expertise and can lack the insight of human wisdom.

- Machine learning lacks the interpretability expected in a world where transparency is paramount.

- Using the Bayesian Gaussian Process Classifier defines lending parameters in a more holistic way, sharpening a bank’s ability to approve creditworthy borrowers and reject proposals from counterparties that are at a high risk of default.

Historically high levels of economic volatility, persistent geopolitical unrest, a fast-evolving regulatory environment – a perpetual stream of disruption is highlighting the limitations and vulnerabilities in many credit risk approaches. In an era where uncertainty persists, predicting risk of default is becoming increasingly complex, and banks are increasingly seeking a modelling approach that incorporates more flexibility, interpretability, and efficiency.

While logistic regression remains the market standard, the evolution of the digital treasury is arming risk managers with a more varied toolkit of methodologies, including those powered by machine learning. This article focuses on the Bayesian Gaussian Process Classifier (GPC) and the merits it offers compared to machine learning, score-based models, and logistic regression.

A non-parametric alternative to logistic regression

The days of approaching credit risk in a linear, one-dimensional fashion are numbered. In today’s fast paced and uncertain world, to remain resilient to rising credit risk, banks have no choice other than to consider all directions at once. With the GPC approach, the linear combination of explanatory variables is replaced by a function, which is iteratively updated by applying Bayes’ rule (see Bayesian Classification With Gaussian Processes for further detail).

For default modelling, a multivariate Gaussian distribution is used, hence forsaking linearity. This allows the GPC to parallel machine learning (ML) methodologies, specifically in terms of flexibility to incorporate a variety of data types and variables and capability to capture complex patterns hidden within financial datasets.

A model enriched by expert wisdom

Another way GPC shows similar characteristics to machine learning is in how it loosens the rigid assumptions that are characteristic of many traditional approaches, including logistic regression and score-based models. To explain, one example is the score-based Corporate Rating Model (CRM) developed by Zanders. This is the go-to model of Zanders to assess the creditworthiness of corporate counterparties. However, calibrating this model and embedding the opinion of Zanders’ corporate rating experts is a time-consuming task. The GPC approach streamlines this process significantly, delivering both greater cost- and time-efficiencies. The incorporation of prior beliefs via Bayesian inference permits the integration of expert knowledge into the model, allowing it to reflect predetermined views on the importance of certain variables. As a result, the efficiency gains achieved through the GPC approach don’t come at the cost of expert wisdom.

Enabling explainable lending decisions

As well as our go-to CRM, Zanders also houses machine learning approaches to default modelling. Although this generates successful outcomes, with machine learning, the rationale behind a credit decision is not explicitly explained. In today’s volatile environment, an unexplainable solution can fall short of stakeholder and regulator expectations – they increasingly want to understand the reasoning behind lending decisions at a forensic level.

Unlike the often ‘black-box’ nature of ML models, with GPC, the path to a decision or solution is both transparent and explainable. Firstly, the GPC model’s hyperparameters provide insights into the relevance and interplay of explanatory variables with the predicted outcome. In addition, the Bayesian framework sheds light on the uncertainty surrounding each hyperparameter. This offers a posterior distribution that quantifies confidence in these parameter estimates. This aspect adds substantial risk assessment value, contrary to the typical point estimate outputs from score-based models or deterministic ML predictions. In short, an essential advantage of the GPC over other approaches is its ability to generate outcomes that withstand the scrutiny of stakeholders and regulators.

A more holistic approach to probability of default modelling

In summary, if risk managers are to tackle the mounting complexity of evaluating probability of default, they need to approach it non-linearly and in a way that’s explainable at every level of the process. This is throwing the spotlight onto more holistic approaches, such as the Gaussian Process Classifier. Using this methodology allows for the incorporation of expert intuition as an additional layer to empirical evidence. It is transparent and accelerates calibration without forsaking performance. This presents an approach that not only incorporates the full complexity of credit risk but also adheres to the demands for model interpretability within the financial sector.

Are you interested in how you could use GPC to enhance your approach to default modelling? Contact Kyle Gartner for more information.

SAP Commodity Management: The Power of an Integrated Solution

Explore how ridge backtesting addresses the intricate challenges of Expected Shortfall (ES) backtesting, offering a robust and insightful approach for modern risk management.

The recent periods of commodity price volatility have brought commodity risk management to the spotlight in numerous companies, where commodities constitute a substantial component of the final product, but pricing arrangements prevented a substantial hit of the bottom line in the past calm periods.

Understanding Commodity Risk Management is ingrained in the individual steps of the whole value chain, encompassing various business functions with different responsibilities. Purchasing is responsible for negotiating with the suppliers: the sales or pricing department negotiates the conditions with the customers; and Treasury is responsible for negotiating with the banks to secure financing and eventually hedge the commodity risk on the derivatives market. Controlling should have clarity about the complete value chain flow and make sure the margin is protected. Commodity risk management should be a top item on the CFO's list nowadays.

SAP's Solution: A Comprehensive Overview

Each of these functions need to be supported with adequate information system functionality and integrated well together, bridging the physical supply chain flows with financial risk management.

SAP, as the leading provider of both ERP and Treasury and risk management systems, offers numerous functionalities to cover the individual parts of the process. The current solution is the result of almost two decades of functional evolution. The first functionalities were released in 2008 on the ECC 6.04 version to support commodity price risk in the metal business. The current portfolio supports industry solutions for agriculture, oil, and gas, as well as the metal business. Support for power trading is considered for the future. In the recent releases of S/4HANA, many components have been redeveloped to reflect the experience from the existing client implementations, to better cover the trading and hedging workflow, and to leverage the most recent SAP technological innovations, like HANA database and the ABAP RESTful Application Programming Model (RAP).

Functionalities of SAP Commodity Management

Let us take you on a quick journey through the available functionalities.

The SAP Commodity Management solution covers commodity procurement and commodity sales in an end-to-end process, feeding the data for commodity risk positions to support commodity risk management as a dedicated function. In the logistics process, it offers both contracts and orders with commodity pricing components, which can directly be captured through the integrated Commodity Price Engine (CPE). In some commodity markets, products need to be invoiced before the final price is determined based on market prices. For this scenario, provisional and differential invoicing are available in the solution.

The CPE allows users to define complex formulas based on various commodity market prices (futures or spot prices from various quotation sources), currency exchange translation rules, quality and delivery condition surcharges, and rounding rules. The CPE conditions control how the formula results are calculated from term results, e.g., sum, the highest value, provisional versus final term. Compound pricing conditions can be replicated using routines: Splitting routines define how the formula quantity will be split into multiple terms, while Combination routines define how multiple terms will be combined together to get the final values.

Pricing conditions from active contracts and orders for physical delivery of commodities constitute the physical exposure position. Whether in procurement, in a dedicated commodity risk management department, or in the treasury department, real-time recognition and management of the company’s commodity risk positions rely on accurate and reliable data sources and evaluation functionalities. This is provided by the SAP Commodity Risk Management solution. Leveraging the mature functionalities and components of the Treasury and Risk Management module, it allows for managing paper trades to hedge the determined physical commodity risk position. Namely, listed and OTC commodity derivatives are supported. In the OTC area, swaps, forwards, and options, including the Asian variants with average pricing periods, are well covered. These instruments fully integrate into the front office, back office, and accounting functionalities of the existing mature treasury module, allowing for integrated and seamless processing. The positions in the paper deals can be included within the existing Credit Risk Analyser for counterparty risk limit evaluation as well as in the Market Risk Analyser for complex market risk calculations and simulations.

Managing Commodity Exposures

Physical commodity exposure and paper deals are bundled together via the harmonized commodity master data Derivative Contract Specification (DCS), representing individual commodities traded on specific exchanges or spot markets. It allows for translating the volume information of the physical commodity to traded paper contracts and price quotation sources.

In companies with extensive derivative positions, broker statement reconciliation can be automated via the recent product SAP Broker Reconciliation for Commodity Derivatives. This cloud-based solution is natively integrated into the SAP backend to retrieve the derivative positions. It allows for the automatic import of electronic brokers' statements and automates the reconciliation process to investigate and resolve deviations with less human intervention.

To support centralized hedging with listed derivatives, the Derivative Order and Trade execution component has been introduced. It supports a workflow in which an internal organizational unit raises a Commodity Order request, which in turn is reviewed and then fully or partially fulfilled by the trader in the external market.

Innovations in SAP Commodity Management

Significant innovations were released in the S/4HANA 2022 version.

The Commodity Hedge Cockpit supports the trader view and hedging workflow.

In the area of OTC derivatives (namely commodity swaps and commodity forwards), the internal trading and hedging workflow can be supported by Commodity Price Risk Hedge Accounting. It allows for separating various hedging programs through Commodity Hedging areas and defining various Commodity Hedge books. Within the Hedge books, Hedge specifications allow for the definition of rules for concluding financial trades to hedge commodity price exposures, e.g., by defining delivery period rules, hedge quotas, and rules for order utilization sequence. Individual trade orders are defined within the Hedge specification. Intercompany (on behalf of) trading is supported by the automatic creation of intercompany mirror deals, if applicable.

Settings under the hedge book allow for automatically designating cash flow hedge relationships in accordance with IFRS 9 principles, documenting the hedge relationships, running effectiveness checks, using valuation functions, and generating hedge accounting entries. All these functions are integrated into the existing hedge accounting functionalities for FX risk available in SAP Treasury and Risk Management.

The underlying physical commodity exposure can be uploaded as planned data reflecting the planned demand or supply from supply chain functions. The resulting commodity exposure can be further managed (revised, rejected, released), or additional commodity exposure data can be manually entered. If the physical commodity exposure leads to FX exposure, it can be handed over to the Treasury team via the automated creation of Raw exposures in Exposure Management 2.0.

Modelled deals allow for capturing hypothetical deals with no impact on financial accounting. They allow for evaluating commodity price risk for use cases like exposure impact from production forecasts, mark-to-intent for an inventory position (time, location, product), and capturing inter-strategy or late/backdated deals.

Even though a separate team can be responsible for commodity risk management (front office) - and it usually is - bundling together the back office and accounting operations under an integrated middle and back office team can help to substantially streamline the daily operations.