…in times of crises.

Much has been written about COVID-19, its related shutdowns, financial impact and high levels of disruption caused. Although the direct effects of the virus have probably come to a halt, corporates are still facing its (in)direct consequences to this day.

As the economy attempts to return to business as usual, their approach around working capital management will require more in terms of planning, forecasting and measures.

The aftermath of a global shock and its impact on supplies can be catastrophic for certain industries. The pandemic exposed the risks of highly concentrated supplier bases. Rapidly changing supply and demand in the semiconductor industry, that caused global shortages and delays in many related supply chains and ongoing lockdowns in China have demonstrated this.

Reviewing supply management strategies

In addition to the ongoing U.S.-China trade war, which reignited under the Trump administration, the war in Ukraine and associated sanctions and embargoes further exposed the vulnerability of global supply chains. Structural shortages in commodity markets, and especially energy prices, are increasing inflationary pressures. These impacts are rapidly building downstream in many supply chains as can be seen in the increasing producer price indices and can be felt by anyone who recently visited a supermarket. Rising prices further increases uncertainty which impacts interest rates and the availability of funds.

It is unlikely that any significant unclogging of global supply chains will happen very soon. Many corporates are reviewing their supply management strategies and practices. The longer a supply chain, the higher the risk of disruption. Western economies have become overly reliant on goods produced and sourced in Asia and with these rising geopolitical tensions, we have already seen countries and multinationals shift their sourcing to mitigate these risks. A great example is Intel’s investments in semiconductor plants in Germany.

Increased requirement

From a working capital perspective, a higher interest rate environment calls for a more efficient working capital performance – corporates would like to offset the higher cost of borrowing by improving their cash flows and reduce their capital requirements. Borrowing funds is becoming more expensive for both short- and long-term loans. Many lines of credit are repriced monthly and therefore higher interest rates hit corporates almost immediately.

Higher interest rates raise businesses’ cost of capital and negatively impact cash flow. Even if a corporate is not highly leveraged, upstream and downstream operational debt, accounts receivables and payables, will have an impact on its liquidity. With higher inflation, increased sales figures, even at constant quantities, means a higher level of balance in trade receivables. Higher purchase prices have a similar effect on the total inventory on the company’s balance sheet. For companies with a positive net working capital, where the sum of accounts receivables and inventory are greater than accounts payables, the extra investment needed in current assets means an increased requirement of working capital financing.

These are some of the main reasons why in these challenging times, focusing on working capital practices, preserving liquidity and cash flow optimization are paramount.

Working Capital Policies

Not in all corporates Treasury is responsible for working capital management. However, to ensure sufficient cash to fulfill all obligations, the impact lies with Treasury.

The working capital management concept pertains to how firms manage their current assets and liabilities to ensure continuous day-to-day operations. An important aspect of Working Capital Management is setting the policy, or set of rules, that best suits a specific corporate or industry.

The working capital policy comprised of two elements: (1) the level of investment in current assets and (2) the means of financing current assets. When selecting the most suitable policy, firms try to obtain an optimal level of working capital dependent on the trade-off between risk and return.

When examining these Working Capital Policies, three general approaches can be distinguished:

- The conservative policy, where firms aim to maintain high levels of working capital (high investment in working capital), as they rely more on long-term financing compared with short-term financing, decreasing both risk and return.

- The aggressive working capital approach, where the financing mix of a corporate leans more to the use of short-term capital to finance its investments, which indicates lower structural investment in working capital. Increasing risk and return.

- The hedging or matching policy, where short-term assets are matched with short-term liabilities and the permanent amount of short-term assets is financed by long-term financing resources. Thus, the investment in working capital may increase or decrease according to the firm’s activity.

Corporates that employ the conservative or hedging approach are least likely to be affected by the recent challenges of higher interest rates as the cost of capital has been locked in for a longer period of time. Companies that have taken a more aggressive stance are among the first to witness the inflationary impact in their overall cost of capital. Although the Working Capital Policies are mainly driven by business strategy and industry complexity, it might be worthwhile for businesses to reconsider these policies now capital structure is becoming a more important factor.

Working capital is often measured by the Cash Conversion Cycle (CCC): Days Sales Outstanding (DSO) + Days Inventory Outstanding (DIO) – Days Payables Outstanding (DPO). It indicates how long each net input currency is tied up in the production and sales process before it gets converted into cash received. It considers the time needed to collect receivables and the time it has to pay its bills without incurring interest or penalties. The cash conversion cycle is optimized (lowered) by increasing DPO and decreasing DSO and DIO.

While profitability is often the key focus point of many companies and managers, working capital and cash conversion are both the heart and the blood of a company. It is an undeniable truth for many Treasurers and CFOs: ‘Cash is king’. Businesses need cash to soldier on, build strategic alliances, propose items that will elevate its competitive stature over time and increase future profitability.

Unique times

Over the course of the last decade, corporates have grown accustomed to low, and even negative, interest rates and high availability of cash in the market and many have taken an aggressive stance in their working capital investment strategies. Especially corporates that operate in the lower margin and highly competitive industries. These recent challenges impact the way a corporate should manage its working capital and to maybe reconsider its working capital policy.

To mitigate the risk of disrupted supply chains, a corporate might first choose to have higher inventory levels that increase their DIO in their cash conversion cycle and their respective capital requirements. Although generally suboptimal, for companies with an aggressive stance, short-term and flexible credit were sensible temporary alternatives to overcome these challenges. In times of rising interest rates however, a corporate’s natural reaction is to decrease its overall capital requirements and reduce the effects of the interest component in their margin metrics.

Now both the challenges of disrupted supply chains and rising interest rates collide, their unique combination presents extra complications to corporates and their Treasurers as typical solutions for one problem are now counterproductive in tackling the second challenge. Furthermore, corporates tied to leverage covenants are faced with a double challenge as these circumstances simultaneously lower the profitability and extend the balance sheet, negatively impacting their leverage metric and consequently increasing interest charges.

What should the treasurer do?

Where in high times working capital management and its financing is ‘just’ a component in a business and corporates can concentrate on the happy flow, crises challenge us to think differently and creatively to overcome our problems. Instead of pushing the issues up and down the supply chain on the short term, a corporate should re-assess its capital structure and financing mix to check its robustness. It is the role of the Treasurer, during times like these, to raise company broad awareness of its impact, gain improved insight in all related processes and to find alternatives.

Businesses are looking for solutions to free up additional funding for their working capital. It is vital that Treasurers look to leverage every tool available to convert sales into cash. The longer that cash is uncollected, the longer it is effectively funding another business rather than the creditors.

Improve on data gather and information

The starting point of successful working capital management is improved insight in cash flows. Treasurers should invest time and resources to optimize cash flow forecasting using the correct data and metrics, which is a challenge on its own. In many instances, existing tools deployed do not take into account deviations from payment terms at a client’s level and the actual flows of cash. Corporates should cross-check credit terms captured in their systems against actual flows to improve their forecasting. Under normal circumstances, reconciliation between the two is already important, but even more so during times of uncertainty that could impact your counterparties and their payment behavior.

While standard practice for a credit insurer, continuously reassessing credit risk of clients and suppliers is something overlooked quite often by corporates. Shipping that order across the globe to your once best customer can become a costly affair when you are unaware of its most recent financial challenges. It is important to have this insight in your supply chain to avoid unpleasant surprises.

Without having a precise and complete insight when cash is coming in and needs to be reinvested, pinpointing your future capital requirement, and where to find these funds, is no simple matter.

Supply Chain Finance solutions to consider

While improving insights, Treasurers should also investigate flexible solutions specifically designed to overcome short-term funding shortages such as factoring and reverse factoring programs.

Factoring programs are initiated from the seller’s perspective within a supply chain and enable the corporate to sell its accounts receivable balances to a financial institution or an investing firm (the factor) for cash advances. This immediately improves the cash conversion cycle by decreasing the DSO component.

Reverse factoring caters to the other side of the supply chain and is focused on financing the downstream flow, initiated from a buyer’s perspective. Invoices to the supplier are paid early or against the originally negotiated credit terms by the factor party. Leveraging the creditworthiness of the buyer, smaller suppliers might benefit from these programs as the discount on the funds received will be lower than factoring programs initiated from their side and extend credit terms could be given. Using reverse factoring solutions increases the effective DPO for the buyer while simultaneously decreasing the DSO from the supplier’s perspective lowering their overall cash conversion cycle.

Whether factoring or reverse factoring is put into practice, the highest mutual benefits can be achieved by initiating the programs by the party with the highest credit rating as their cost of capital is generally lower.

As for everything in this world, it holds in both cases that there is no such thing as a free lunch. Payments are discounted and the costs of finance are similar to short-term loans, but offer increased flexibility on top of existing financial debt. Many of these programs are very user-friendly and most accompanying portals allow for direct integration with many ERP systems to automatically upload invoices to be factored.

Collaborate with key partners in your supply chain

Seek collaboration within your supply chain and adjust planning and mutual initiatives. When considering supply chain finance solutions, do not only investigate initiating your own programs, but reach out to your key suppliers and customers to see whether they are open for collaboration. It is possible that your company can be included in already existing (reverse) factoring programs initiated by your business partners.

Apart from programs involving a third party to finance the supply chain, static and dynamic discounting are other solutions to be investigated. It allows a corporate that is less cash constrained to make direct payments to their supplier at a discounted purchasing price. With static discounting, the discount rate is set in advance, whereas with the dynamic variant, the discount is adjusted to the actual date of payment.

These solutions not only optimize your cash inflow and/or honor the payment terms negotiated, it could also strengthen business relationships in the supply channels through improved cooperation.

Conclusion

In times where both disrupted supply chains and rising interest rates present corporates and their Treasurers with a unique combination of challenges, it is important to gain insight and knowledge about the particular position and needs of your company. Although the solutions to overcome the supply chain disruption by increasing inventory might naturally be counterproductive to lower capital requirements and financing costs, many flexible supply chain finance solutions exist to help a corporate with their cash constraints and to optimize its financing mix.

To gain a better understanding of your company’s working capital position, the potential risks and financing possibilities, Zanders can help you gain insight, explore supply chain finance solutions and make educated decisions.

On 20 October 2022, the European Banking Authority (EBA) published the final package of guidelines for the management of Interest Rate Risk in the Banking Book (IRRBB) and the Credit Spread Risk in the Banking Book (CSRBB). The package includes:

- Final guidelines for IRRBB and CSRBB (link)i

- Regulatory Technical Standards (RTS) on the IRRBB supervisory outlier tests (SOT), which updates the SOT for the Economic Value of Equity (EVE) and introduces an SOT for Net Interest Income (NII) (link)ii.

- RTS on the IRRBB standardized approach, which should for example be applied when a competent authority deems the internal model for IRRBB management of a bank not satisfactory (link).

Compared to the draft versions, published in December 2021, the most notable topics and changes are:

- CSRBB: Despite of significant concerns raised by many banks in response to the consultation papers, no significant changes have been made to the draft CSRBB guidelines. Hence, compared to the CSRBB guidelines of 2019, the scope of CSRBB is extended to the whole balance sheet unless it can be argued by the bank that a certain portfolio is not subject to credit spread risk.

- IRRBB: The EBA still expects banks to measure NII at risk including the market value change for positions that are accounted at fair value on the balance sheet.

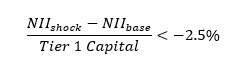

The EBA relaxed the constraint on the maximum weighted average repricing date (of 5 years) for retail and non-financial non maturing deposits (NMD) as introduced in the draft guidelines. Retail deposits with economic or fiscal constraints to withdrawal are now exempted from this specific guideline. The guideline on deposits taken from financial institutions is also relaxed. The maximum weighted average repricing date for operational deposits is changed from overnight to 5 years in the final guidelines (following the definition in the LCR regulation). The weighted average repricing date for other deposits taken from financial institutions remains constrained to an overnight repricing. - NII SOT: In the consultation paper, the EBA requested feedback on different approaches to report the NII SOT. In the final document the EBA made the choice to define the NII SOT as:

- implying that the decline in the 1-year NII must be below 2.5% of the Tier 1 capital.

Further, the EBA decided that the NII SOT should be calculated based on a narrow definition, where NII is defined as the difference between interest income and interest expense. Market value changes in instruments accounted for at Fair Value, and interest rate sensitive fees and commissions are excluded from the scope of the NII SOT. - EVE SOT: In the consultation paper, the EBA used the swap curve as an example for the risk-free curve that can be used for discounting. This has now been changed to OIS curve. The EBA indicates, however, that the bank can choose the risk-free yield curve according to their business model, provided that it is deemed appropriate. This leaves room for banks to keep using a non-overnight swap curve.

- IRRBB Standardized approach: The most notable change compared to the consultation paper is the fact that the EBA decided to not include the impact of instruments accounted at fair value in the standardized NII (at risk). Next to this, some minor clarifications have been included.

The IRRBB guidelines are effective as of 30 June 2023 and the CSRBB guidelines are effective as of 31 December 2023. When banks must apply the RTS on SOT will depend on the approval by the European Commission.

i Earlier, Zanders published an article describing the main changes for the measurement of IRRBB and CSRBB based on the draft publication.

ii Earlier, Zanders published an article describing the main changes for reporting the EVE and NII SOT based on the draft publication.

ESG-related derivatives: regulation & valuation

The most popular financial instruments in this regard are sustainability-linked loans and bonds. But more recently, corporates also started to focus on ESG-related derivatives. In short, these derivatives provide corporates with a financial incentive to improve their ESG performance, for instance by linking it to a sustainable KPI. This article aims to provide some guidance on the impact of regulation around ESG-related derivatives.

As covered in our first ESG-related derivatives article, a broad spectrum of instruments is included in this asset class, the most innovative ones being emission trading derivatives, renewable energy and fuel derivatives, and sustainability-linked derivatives (SLDs).

Currently, market participants and regulatory bodies are assessing if, and how new types of derivatives fit into existing derivatives regulation. In this regard, European and UK regulators are at the forefront of the regulatory review to foster activity and ensure safety of financial markets. Since it’s especially challenging for market participants to comprehend the impact of these regulations and the valuation implications of SLDs, we aim to provide guidance to corporates on these matters, with a special focus on the implications for corporate treasury.

Categorization & classification

When issuing an SLD, it’s important to understand which category the respective SLD falls in. That is, whether the SLD incorporates KPIs and the impact of cashflows in the derivatives instrument (category 1), or if the KPIs and related cashflows are stated in a separate agreement, in which the underlying derivatives transaction is mentioned for setting the reference amount to compute the KPI-linked cashflow (category 2). This categorization makes it easier to understand the regulations applying to the SLD, and the implications of those regulations.

In general, a category 1 SLD will be classified as derivative under European and UK regulations, and swap under US regulations, if the underlying financial contract is already classified as such. The addition of KPI elements to the underlying financial instrument is unlikely to change that classification.

Whether a category 2 SLD is classified as a derivative or swap is somewhat more complicated. In Europe, this type of SLD is classified as a derivative if it falls within the MIFID II catch-all provision, which must be determined on a case-by-case basis.

Overall, instruments that are classified as derivatives in Europe will also be classified as such in the UK. But to elaborate, a category 2 SLD will classified as a derivative in the UK if the payments of the financial instrument vary based on fluctuations in the KPIs.

When a category 2 SLD is issued in the US, it will only be classified as a swap if KPI-linked payments within the financial agreement go in two directions. Even if that is the case, the SLD may still be eligible for the status as commercial agreement outside of swaps regulation, but that is specific to facts and circumstances.

Apart from the classification as derivative or swap, it is also helpful to determine whether an SLD could be considered a hedging contract, so that it is eligible for hedging exemptions. The requirements for this are similar in Europe, the UK, and the US. Generally, category 1 SLDs are considered hedging contracts if the underlying instruments still follow the purpose of hedging commercial risks, after the KPI is incorporated. Category 2 SLDs are normally issued to meet sustainability goals, instead of hedging purposes. Therefore, it is unlikely that this category of SLDs will be classified as hedging contracts.

Regulation & valuation implications

When issuing an SLD that is classified as a derivative or swap, there are several regulatory and valuation implications relevant to treasury. These implications can be split up in six types which we will now explain in more detail. The six types (risk management, reporting, disclosure, benchmark-related considerations, prudential requirements, and valuation) are similar for corporates across Europe, the UK, and the US, unless otherwise mentioned.

Risk management

As is the case for other derivatives and swaps, corporate treasuries must meet confirmation requirements, undertake portfolio reconciliation, and perform portfolio compression for SLDs. Additionally, regulated companies are required to construct effective risk procedures for risk management, which includes documenting all risks associated with KPI-linked cashflows. While these points might be business as usual, it must also be determined if and how KPI-linked cashflows should be modeled for valuation obligations that apply to derivatives and swaps. For instance, initial margin models might need to be adjusted for SLDs, so they capture KPI-linked risks accurately.

Reporting

Corporate treasuries must report SLDs to trade repositories in Europe and the UK, and to swap data repositories in the US. Since these repositories require companies to report in line with prescriptive frameworks that do not specifically cover SLDs, it should be considered how to report KPI-linked features. As this is currently not clearly defined, issuers of SLDs are advised to discuss the establishment of clear reporting guidelines for this financial instrument with regulators and repositories. A good starting point for this could be the mark-to-market or mark-to-model valuation part of the EMIR reporting regulations.

Disclosure

Only Treasuries of European financial entities will be involved in meeting disclosure requirements of SLDs, as the legislation in the UK and US is behind on Europe in this respect, and non-financial market participants are not as strictly regulated. From January 2023, the second phase of the Sustainable Finance Disclosure Regulation (SFDR) will be in place, which requires financial companies to report periodically, and provide pre-contractual disclosures on SLDs. Treasuries of investment firms and portfolio managers are ought to contribute to this by reporting on sustainability-related impact of the SLDs compared to the impact of reference index and broad market index with sustainability indicators. In addition, they could leverage their knowledge of financial instruments to evaluate the probable impacts of sustainability risks on the returns of the SLDs.

Benchmark-related implications

In case the KPI of an SLD references or includes an index, it could be defined as a benchmark under European and UK legislation. In such cases, treasuries are advised to follow the same policy they have in place for benchmarks incorporated in other brown derivatives. Specific benchmark regulations in the US are currently non-existent, however, many US benchmark administrators maintain policies in compliance with the same principles as where the European and UK benchmark legislation is built on.

Prudential requirements

Since treasury departments of corporates around the world are required to calculate risk-weighted exposures for derivatives transactions as well as non-derivatives transactions, this is not different for SLDs. While there is currently little guidance on this for SLDs explicitly, that may change in the near future, as US prudential regulators are assessing the nature of the risk that is being assumed with in-scope market participants.

Valuation

The SLD market is still in its infancy, with SLD contracts being drawn up are often specific to the company issuing it, and therefore tailor made. The trading volume must go up, trade datasets are to be accurately maintained, and documentation should be standardized on a global scale for the market to reach transparency and efficiency. This will lead to the possibility of accurate pricing and reliable cashflow management of this financial instrument and increases the ability to hedge the ESG component.

To conclude

As aforementioned, the ESG-related derivatives market and the SLD market within it are still in the development phase. Therefore, regulations and their implications will evolve swiftly. However, the key points to consider for corporate treasury when issuing an SLD presented in this article can prove to be a good starting point for meeting regulatory requirements as well as developing accurate valuation methodology. This is important, since these derivatives transactions will be crucial for facilitating the lending, investment and debt issuance required to meet the ESG ambitions of Europe, the UK, and the US.

For more information on ESG issues, please contact Joris van den Beld or Sander van Tol.

It is without a grain of doubt that cash positioning is at the forefront of successful cash & liquidity management.

There are various sources from which treasurers obtain information that forms accurate cash position within the company’s ERP or Treasury Management System. The most important of these sources is the current available balance, most often obtained from bank statements which are reported by various banks through numerous protocols.

Depending on the system an enterprise is using, the cash position can be updated in two main ways; directly from the balance which has been reported on the bank statement by the bank, or through making appropriate accounting postings of bank statement items to relevant GL accounts. For a long time, in SAP only the latter was possible. The very least that needed to happen was the posting in so-called posting area 1, whereby the bank statements were posted to the Bank GL Balance Sheet account, with opposing entry posted to the Bank GL Clearing Account.

New feature in SAP: Cash positioning without posting

A new functionality has been introduced as of SAP S/4HANA 2111 (Cloud version) and SAP S/4HANA 2021 (On-Premises version) releases. With the new functionality it is no longer required to post the items from the bank statements to have the cash position updated within a selection of Fiori Applications. It is important to note that the feature only applies to the bank EOD (end of day) bank statements, with Intraday bank statements still relying on creating memo records to update cash position.

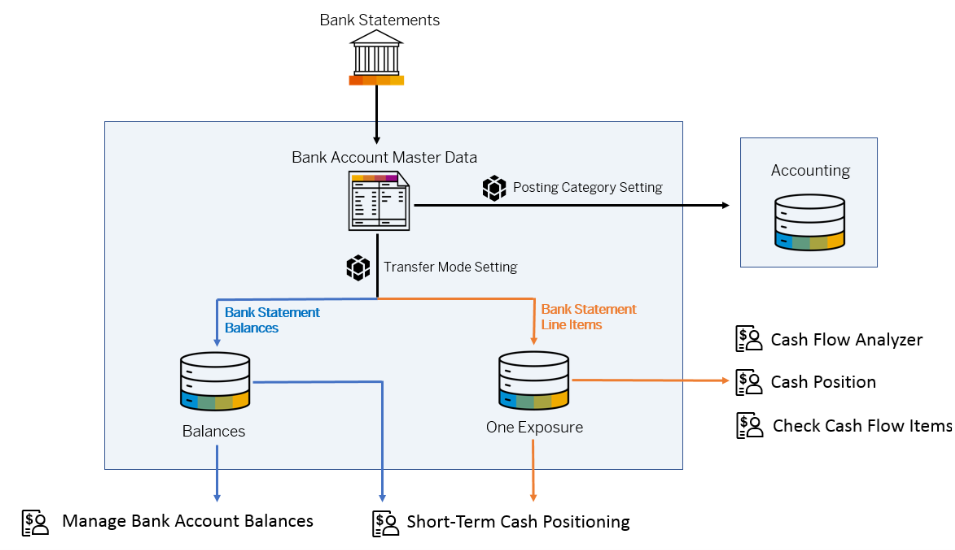

Depending on the settings maintained within the S/4 environment, one can then view the cash position in either of the 5 Fiori apps. It is possible to use only the balance that is reported by the bank on the bank statement to update the cash position, or use the individual bank statement line items, or both. The picture below shows the sources of information that can be used to update the cash position, as well as which Fiori apps can be used to view the cash position.

Figure 1: How bank statement data can be integrated and processed in cash management. Source

The functionality can prove especially useful in a scattered entity landscape, where multiple accounting systems are used, but only one central system should be used for cash positioning. With this new functionality, where bank statements do not need to be posted, and a minimal setup is sufficient on company code level (no GL accounts are even needed), the enterprise will be able to achieve a consolidated cash position overview in one SAP S/4 environment.

Updating the cash position

How important is it to base the cash position on reconciled accounting entries rather than what the bank has reported on the bank statement? One might argue that using cash position that was updated via the means of the latter process can carry a risk of an unaccounted transaction making its way onto a bank statement, and thus skewing the cash position. But how many times as a treasurer have you actually seen that the bank statement contained transactions that should not be there? What is more, within the current bank connectivity landscape, whereby bank statements are delivered in a secure manner via either SWIFT, H2H, EBICS, instead of a manual upload, the risk of bank statements being tampered with is very low, or even non-existent. So, there we have it, a new means of updating your cash position in SAP.

Supportive API

What is in store for the future for Cash & Liquidity Management within SAP S/4HANA? Do we even need the bank statements if the goal is only to update the cash position? With the increasing presence of APIs within the treasury world, SAP has been also making efforts to allow the cash position to be updated via means other than bank statements. With an API, one would be able to connect to the bank to obtain the information on the current available balance, either on a schedule, or whenever desired, with a click on a button. With the ever-increasing need for up-to-date immediate cash position information, this seems like a logical way forward.

Integrating treasury and supply chain processes via SAP Trade finance functionalities helps companies with strong international trade business exposure to improve transparency and increase efficiency in their order-to-cash, and also purchase-to-pay processes.

Initial functionalities to support trade finance were introduced in SAP Treasury Transaction manager relatively recently in 2016, within the ECC EhP 8 version. Covering the obtained and provided guarantees and letters of credit, they offer a seamless integration between the processes in treasury and the purchase-to-pay, order-to-cash area.

Trade finance covers financial products which help importers and exporters to reduce credit risk and support financing of the goods flow. Almost 90% of world trade relies on trade finance (source: WTO).

What is covered?

Most instruments used in trade finance are supported by the available functionality, namely:

- Guarantees

- Issued – (direct or indirect through correspondent bank) to support the purchase-to-pay process of the buyer.

- Received – to support the order-to-cash process of the seller.

- Commercial and Standby letter of credit (L/C)

Commercial L/C represents direct payment method, while Standby L/C is secondary payment method, used to pay the beneficiary only when the holder fails.- Issued – to pay (on behalf) of the buyer (purchase-to-pay process)

- Received – collect the payment by the seller (order-to-cash process)

How is the functionality integrated with supply chain functionalities?

Once activated, the trade finance financial products are fully integrated to the existing framework of the Treasury transaction manager: you can manage them using the existing transaction codes in front office, back office (settlement, payments, correspondence framework for MT message exchange), accounting, reporting and risk management functions. In case of received guarantees and L/C, the contracts can be included in the respective credit risk limits for the issuing bank. This excellent integration helps the treasury team integrate the trade finance flows to their existing operations with which they are already familiar.

Beyond that, issued L/Cs can be connected with an existing bank facility and (advance) loan contract, in order to be correctly included in its utilisation and to be integrated in the corresponding facility fee calculation. In case of L/C, when conditions to release the payment are fulfilled, a new financing deal can be created or an existing one assigned to the trade finance contract.

Beyond the treasury functionality, the solution offers two unique features to support the trade process:

- Support of Trade documentation management

Fields are available, for example, to note L/C document number, shipment period, places of receipt and delivery, ports of loading and discharge, incoterms. Further it can be defined, which documents are needed to be presented to release the payment, and their scans can be attached to the trade finance deals. Accounting documents can be generated based on flow types, when payment conditions are fulfilled (for off-balance sheet recognition). - Integration with Material management and Sales and distribution modules

One or multiple related sales order numbers (received L/C, guarantee) or purchase order numbers (issued L/C, guarantee) can be maintained in the trade finance contracts. Related FI Customer (applicant) or FI Vendor (beneficiary) can be maintained as business partner.

Integration with the sales module

In case of received trade finance instruments, a tight integration with the sales module is possible. The system is able to check the relevant data in the sales order against one or multiple assigned trade finance transaction for compliance. On the condition that the applicant in the letter of credit is identical to the payer in the sales order. The checks are triggered when:

(a) User saves a sales order after updating the assigned trade finance transactions;

(b) User saves the trade finance transaction after changing the risk-check relevant fields;

(c) User processes goods delivery (e.g. outbound delivery, picking, posting goods issue).

The checks make sure that the sales order amount does not exceed the total of trade finance transactions, considering the tolerance. The currency needs to be identical in both documents. The schedule lines of the goods issues must be within the trade finance document term period.

In case of commercial (standard) L/C, shipment period, partial shipment, places of delivery, ports, shipping methods can be checked. The result of the consistency check is displayed in a dedicated report and can be used as a guide for further action.

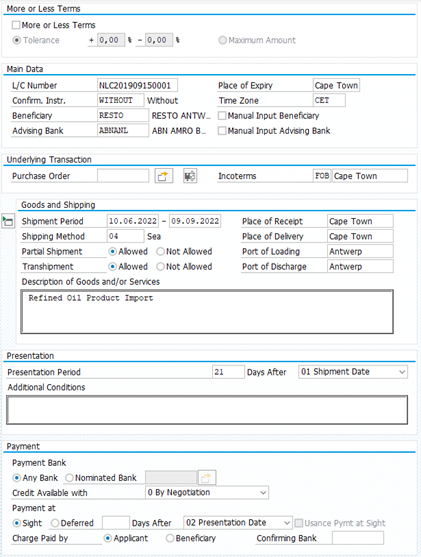

Figure 1: Example available fields for L/C in SAP Trade Finance

In some deployment scenarios, the SAP Treasury is not located in the same system instance as logistic functionalities. For purpose of system integration, APIs (BAPIs) are available for creation and update of trade finance transactions from external systems.

Conclusion

In many companies, trade finance is often an area still managed in separate solutions or in Excel. The SAP S/4HANA Trade finance management helps you to cover the whole lifecycle of trade finance instruments in your SAP Treasury management system and can integrate well with your supply chain functionalities, especially with the sales management.

What does Taulia offer to clients using SAP?

Efficiently managing working capital becomes more and more important for corporates in the current challenging economic conditions and disruptions in the supply chain. As a result, the market has seen an increased demand for early payments of receivables from corporates. Managing working capital is essential in maintaining the health or even the survival of the business, especially in difficult economic times. Furthermore, efficient working capital management could benefit the growth of the company.

Working capital management includes the act of improving the cash conversion cycle. The cash conversion cycle expresses the length in days that it takes to convert cash outflow from purchasing supplies into cash inflow from sales. The cash conversion cycle (CCC) is defined as Days Sales (Receivable) Outstanding + Days Inventory Outstanding – Days Payables Outstanding (CCC = DSO + DIO – DPO). Decreasing DSO and DIO and increasing DPO lowers the cash conversion cycle, accelerates cash flow and improves a company’s liquidity position.

What is Supply Chain Finance?

The most popular method to manage working capital efficiently is using Supply Chain Finance (SCF). We distinguish the following SCF solutions:

- Static discounting: Option for the buyer (using the buyer’s own funds) to get a discount on the invoice if it is paid early. If the option is used, the supplier receives its money earlier than the due date. The discount for the supplier is determined upfront and fixed for a specific number of days.

- Dynamic Discounting: Similar to static discounting using the buyer’s own funds. The difference is that the discount rate is not fixed for a specific number of days. The buyer decides when it wants to pay the invoice. The earlier the invoice is paid, the higher the discount will be.

- Factoring: The supplier is selling its account receivables to a third party. The funding party pays the invoices early (i.e. well before the due date) to the supplier, benefiting the supplier. The interest paid on this solution is based on the credit rating of the supplier.

- Reverse Factoring: The buyer offers the supplier the opportunity to sell its receivables on its SCF platform, so the supplier will receive its money earlier than the due date (from the SCF party). This benefits both the supplier and the buyer, as the buyer will try to extend payment terms with the supplier and therefore pay later than the original due date.

An SCF program is financed by a third-party funder, which is usually a bank or an investing company. Additionally, SCF is often facilitated by technology to facilitate the selling of the supplier’s receivable in an automated fashion.

Reverse factoring

An example of reverse factoring is an automobile manufacturer that is buying various automobile parts from various suppliers. The automobile manufacturer will use a reverse factoring solution to pay the part suppliers earlier and extend the payment terms with these suppliers. Reverse factoring works best when the buyer has a better credit rating than the seller, as the costs of receiving the payment in advance is based on the credit rating of the buyer (which is higher than the credit rating of the seller), the seller receives funding at a more favorable rate than it would receive in the external capital market. This advantage gives the buyer the opportunity to negotiate better payment terms with the seller (higher DPO), while the seller could receive payments of the sale transaction in advance (lower DSO), decreasing the cash conversion cycle of both parties. Effectively, reverse factoring encourages collaboration between the buyer and the seller and potentially leads to a true win-win between buyers and suppliers. The buyer succeeds in its desire to delay payments, while the seller will be satisfied with advanced payments. However, the win for the supplier depends on how the costs of the program are split between the corporate (buyer) and its supplier. Usually, the costs are borne solely by the supplier. Therefore, the program is only desirable for a supplier if the program costs (interest to pay on the SCF funding based on the buyer’s credit rating and the program fee) is lower than the opportunity costs of the supplier. The opportunity costs are defined as the costs of lending funds against an interest rate which is based on its own credit rating.

Benefits and Risks

The benefits of SCF are the following:

- Suppliers can control their incoming cash flows with prepayment of invoices;

- Quick access to funding for the supplier in case of a liquidity crisis;

- Buyer-seller relationship is strengthened due to the collaboration in an SCF program;

- Reduced need of traditional (trade) finance;

- With reverse factoring, the buyer can negotiate extended payment terms with the supplier, providing the prepayment option to the supplier;

- With reverse factoring, suppliers have access to funding with lower interest rates as the pricing of the financing is based on the buyer’s credit rating.

Supply Chain Finance also has risks:

- Reporting ambiguity: Although corporates are obliged under IFRS to disclose additional information about their SCF arrangements (such as Terms and Conditions and carrying amounts of liabilities that are part of the SCF program), reverse factoring can mask true overall debt levels for the supplier when significant amounts of factoring does not have to be classified as debt but as trade payables. Accounting principles IFRS and US GAAP are regularly updated to reflect the latest guidelines around the classification of SCF programs as either trade payables or debt.

- Dependency on SCF: If the SCF program is of a substantial size, a withdrawal of the SCF facility can have dramatic consequences for liquidity and create terminal collateral damage through the supplier network. The US securities regulators warn that reverse factoring is not cycle-tested, which means that it is unclear what might happen in an economic downturn. However, a multi-funder structure makes it easy to replace or add funders in case of a facility withdrawal without disruption to suppliers.

What is Taulia?

Zanders sees a lot of movement in the SCF market. One interesting development is the acquisition of Taulia by SAP. Taulia is a leading supply chain software provider founded in 2009 with over 2 million business users. The rationale behind this acquisition is to expand SAP’s business network further and strengthen the SAP solutions in the financial area. The takeover of Taulia is understandable as more than 80% of the customer base of Taulia runs SAP as their ERP system. Taulia will both be tightly integrated into the SAP software as well as continue to be available as a standalone solution. It will operate as an independent company with its own brand within the SAP Group. Unique in the software business is that Taulia earns a percentage fee of each prepaid invoice that flows through the platform, instead of a fixed fee per transaction or a monthly fee, which is what we usually observe in the market of software vendors. When an invoice is selected for prepayment, the vendor receives a lower amount than the amount of the original invoice. The difference between these amounts is partly compensation for the investor and partly income for Taulia. Taulia offers solutions for all SCF options mentioned earlier: static discounting, dynamic discounting, factoring, and reverse factoring. It is a multi-funder platform on which any and as many banks as desired can be engaged, including the relationship banks of a corporate.

Supply Chain Finance Example

Original situation

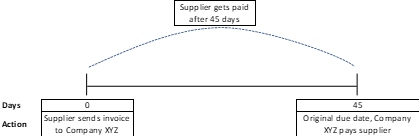

In this example, the supplier and Company XYZ have a payment term of 45 days in place. This is the ‘original situation’:

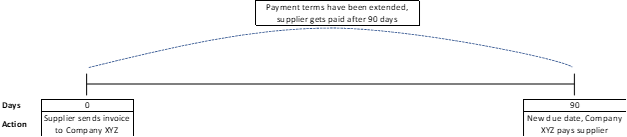

Payment term extension (optional)

Company XYZ starts negotiations with the supplier to extend the payment terms. They agree on a new payment term of 90 days. This step is optional.

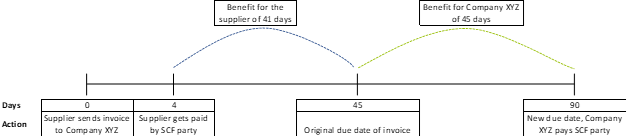

Supplier joins SCF program

The supplier joins the SCF program. Due to the SCF program, the supplier benefits from early payment. The interest that the supplier needs to pay is based on the (strong) credit rating of Company XYZ. In other words, the supplier can finance at lower interest rates.

Features of a Supply Chain Finance program

The features of a best practice SCF program such as Taulia are the following:

- A self-service portal with the branding of your company is provided to your suppliers. Suppliers can be onboarded on this portal, where they select invoices that they would like to receive in advance. When selecting an invoice, the supplier will be quoted with the prepayment costs immediately.

- Automated and near real-time integration with your ERP system, ensuring manual adjustments are redundant and data integrity is maintained. Integration is possible via multiple connectivity solutions or middleware applications. The integration of Taulia with SAP ERP is fully automated. Taulia has its own name space within SAP, although there are no changes in the core SAP code. In this name space, it is amongst others possible to run reconciliation reports. For more information about integration of a SCF platform to SAP, please read this article that we published earlier this year.

- Automatic netting of credit notes against future early payments or block early payments when a credit note is outstanding.

- Leverage real-time private and public data with machine learning in a dashboard to track performance and to make informed decision on your SCF program. This could include scenario analysis of different SCF rates and the effect on adoption rate of your suppliers. Additionally, the dashboard provides benchmarking of payment terms to industry standards.

- Automated solution to automatically accept early payments for suppliers (called ‘CashFlow’ in the Taulia solution). This solution will accept the early payment automatically if the discount is better or equivalent to a pre-set rate curve.

Accounts Receivable solution

Next to the reversed factoring solution, Taulia offers a solution for accounts receivable (AR) financing, also known as factoring. This solution works slightly differently than the reversed factoring solution as your customers do not need to be onboarded on the platform. AR invoices can be sold to a third-party funder, who pays the face value of the invoice less the proposed discount. The actual AR invoice payment from the buyer at maturity date will be collected in a collection account and send back to the investor.

To conclude

The ultimate goal of an SCF program is to unlock working capital for your company. With the choice for an appropriate SCF solution, and a successful implementation including integration to your ERP, the benefits of an SCF program can be achieved. Taulia could be the appropriate solution for you.

If you would like to know more about Supply Chain Finance and/or SAP Taulia, contact Mart Menger at +31 88 991 02 00.

Learning to manage sustainability risks has been one of the key challenges for financial organizations.

This topic is gaining momentum because of the European Commission’s Sustainable Finance Action Plan and associated regulatory changes.

One of the new requirements is that asset managers must incorporate sustainability risks in their risk management and reporting as of August 2022. This means that these risks must be measured, assessed and mitigated. However, this is not an easy task due to a lack of uniformity in risk management approaches and lagging data quality.

This prompted AF Advisors and Zanders to organize a round-table session on the subject. The large session turnout showed the importance of managing sustainability risks for the asset management sector. Parties that manage a total of no less than EUR 2.5 trillion in assets joined the session, including a broad selection of the largest asset managers active in the Netherlands. This attendance led to good, in-depth discussions. The discussion was preceded and inspired by a presentation from one of the expertized asset managers in the field of sustainability on how they mitigate, assess and monitor sustainability risks. Two hours of lively discussion is difficult to summarize but we would like to share a few interesting takeaways. Note that these takeaways do not necessarily represent the views of all the participants, though are merely an overview of the topics that were discussed.

Key takeaways

Financial risk management departments increasingly in the lead

While a few years ago, sustainability risks and the management of these risks were still the task of responsible investing teams in many organizations, this task is increasingly being taken up by financial risk managing departments as these are increasingly capable to quantify sustainability risks. This shift leads to new techniques and new requirements for data. Where previously exclusions were an important method for many parties, an integrated portfolio approach is emerging.

Lack of uniformity in the assessment of sustainability risks

The two main problems in managing sustainability risks are a lack of uniformity in approaches and a limited data quality or availability. Limited data quality is a well-known topic, especially for alternative asset classes. Specialized data vendors will be required to address these issues.

Important to realize, however, is that sustainability risk is such a broad and young concept that it is open to many interpretations. This means that the way in which sustainability risks are assessed can still differ considerably between parties. The benefit is that the different approaches help to speed up the evolvement of this new area. In the longer term it is expected that the assessments converge to a best market practice. Until then, there will be little standardization and different use of terminology. This is especially problematic in a multi-client environment with varying clients’ needs. Enforced communication by the regulator can therefore lead to outcomes that are hard to compare and interpret for clients. Listing definitions used and an explanation of the methodologies used is vital in communication on sustainability risks to clients.

ESG risk ratings are most popular concept despite drawbacks

The most frequently mentioned way in which sustainability risks are monitored is by means of environmental, social and governance (ESG) risk ratings. For example, by comparing a portfolio’s ESG scores with the scores of a corresponding benchmark and by limiting deviations. By using these ratings, environmental, social and governance factors are included. The major drawback of this approach is that it is partly backward-looking. Participants agreed, due to the long horizon over which most risks materialize, traditional (backward-looking) risk models may not be the most suited.

Most forward-looking data is available for climate risks. In addition to the use of ESG scores, a climate risk methodology is therefore desirable.

Not only European legislation matters

Next to European regulation, it is also important to consider emerging global initiatives and other regulation and reporting frameworks. US regulations such as US SDR can impact organizations and the approaches to sustainability risks to some extent. Global initiatives such as TCFD and TNFD are likely to influence and affect organizations’ risk management processes as well. Potential overlap must be analyzed so that an asset managers can face the challenges efficiently.

Internal organization

Sustainability risks can be defined and monitored at various levels of an organization. Portfolio managers should take them into account in the selection of investments. Second line monitoring and independent assessments must be in place. It is important to realize that this is not a topic that only affects the investment and risk management teams. The legislation explicitly places responsibility for managing sustainability risks on the board level and requires internal reporting, controls and sufficient internal knowledge of the topic.

Conclusion

Sustainability risk management is an important topic that asset managers will need to be working on in the coming years. It is expected that this field will evolve over time, it was even referred to as a ‘journey’. The deadline of MiFID, AIFMD and UCITS in August 2022 – date on which amendments of these regulations to incorporate sustainability risks come into effect – is an important first regulatory milestone but will certainly not be the last. With the organization of the round table, we hope to have assisted parties in getting a better understanding of the topic and to have contributed to their journey.

Financial institutions (FIs) play an important role in the transition towards a more sustainable economy in which Environmental, Social and Governance (ESG) factors are properly addressed.

But to seize the opportunities ESG must become an integrated part of a bank’s strategy, risk management and disclosure regimes. High-quality data is instrumental to identify and measure ESG risks, but it can be lacking. FIs need to improve their internal data and use of external private and public vendors like Moody’s or the IMF, while developing a framework that plugs any data gaps.

The lack of appropriate ESG data is considered one of the main challenges for many FIs, but proxies, such as using a building’s energy rating to work out its carbon emissions, can be used.

FIs need climate change-related data that isn’t always available if you don’t know where to look. This article will give you an overview of the most relevant data vendors and provide suggestions on how to treat missing data gaps in order to get a comprehensive ESG framework for the green future where carbon measurement, assessment, reporting and trading will be vital

The data challenge

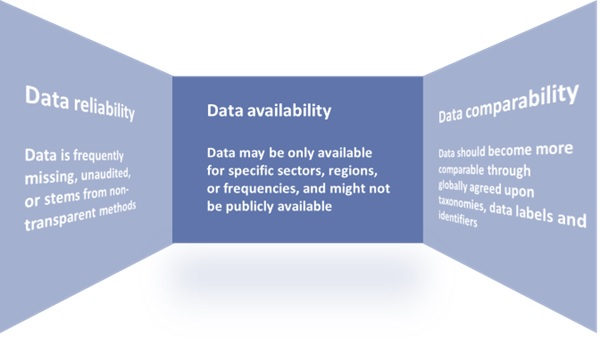

In May 2021, the Network for Greening the Financial System (NGFS) published a ‘Progress report on bridging data gaps’. In this report, the NGFS writes that meeting climate-related data needs is a challenge that can be described along the following three dimensions:

- data availability,

- reliability,

- & comparability.

A further breakdown of the challenges related to these dimensions can be found in Figure 1.

Figure 1: The dimensions of the climate-related data challenge.

Source: Graphic adapted by Zanders from a NGFS report entitled: ‘Progress report on bridging data gaps’ (2021).

Key financial metrics

The NGFS writes that a mix of policy interventions is necessary to ensure climate-related data is based on three building blocks:

- Common and consistent global disclosure standards.

- A minimally accepted global taxonomy.

- Consistent metrics, labels, and methodological standards.

EU Taxonomy, CSRD & EBA’s 3 ESG risk disclosure standards

Several initiatives have started to ignite these needed policy interventions. For example, the EU Taxonomy, introduced by the European Commission (EC), is a classification system for environmentally sustainable activities. In addition, the recently approved Corporate Sustainability Reporting Directive (CSRD) provides ESG reporting rules for large listed and non-listed companies in the EU, including several FIs. The aim of the CSRD is to prevent greenwashing and to provide the basis for global sustainability reporting standards. Another example of a disclosure standard is the binding standards on Pillar 3 disclosures on ESG risks developed by the European Banking Authority (EBA).

Even though policy, law and regulation makers have a big part to play in the data challenge, there are also steps that individual institutions could and should take to improve their own ESG data gaps. Regulatory bodies such as the EBA and the European Central Bank (ECB) have shared their expectations and recommendations on the management of ESG data with FIs.

To illustrate, the EBA recommends FIs “[identify] the gaps they are facing in terms of data and methodologies and take remedial action” and the ECB expects institutions to “assess their data needs in order to inform their strategy-setting and risk management, to identify the gaps compared with current data and to devise a plan to overcome these gaps and tackle any insufficiencies”

Collecting data

Collecting ESG data is a challenging exercise. A distinction can be made between collecting data for large market cap companies, and small cap companies and retail clients. Although large cap companies tend to be more transparent, the data often is dispersed over multiple reports – for example, corporate sustainability reports, annual reports, emissions disclosures, company websites, and so on.

For small cap companies and retail clients, the data is more difficult to acquire. Data that is not publicly available could be gathered bilaterally from clients. For example, one European bank has developed an annual client questionnaire to collect data from its clients.

Gathering data from various reports or bilaterally from clients might not always be the best option, however, because it is time consuming or because the data is not available, reliable, or comparable. Two alternatives are:

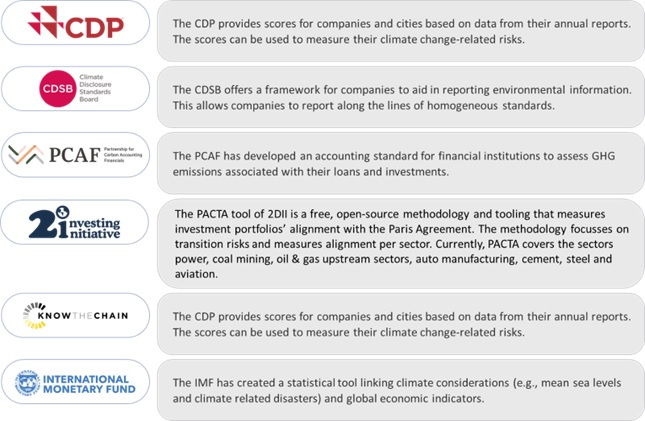

- Use tools to collect the data. For example, using open-source tooling from the Two Degrees Investing Initiative (2DII) to calculate Paris Agreement Capital Transition Assessment (PACTA) portfolio alignment.

- Collect data from other external data sources, such as S&P Global.

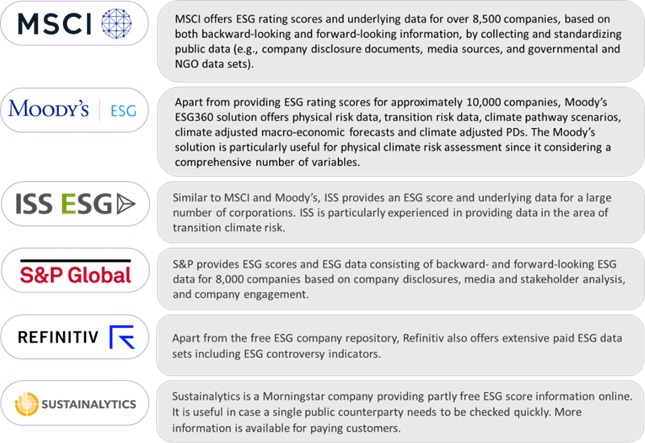

This could be forward-looking external data on macro-economic expectations, international climate scenarios, financial market data or sectoral climate developments. Below we discuss some sources for external ESG and climate change-related data.

External data

Some of Zanders’ clients resort to vendor solutions for acquiring their ESG data. The most commonly observed solutions, in random order, are:

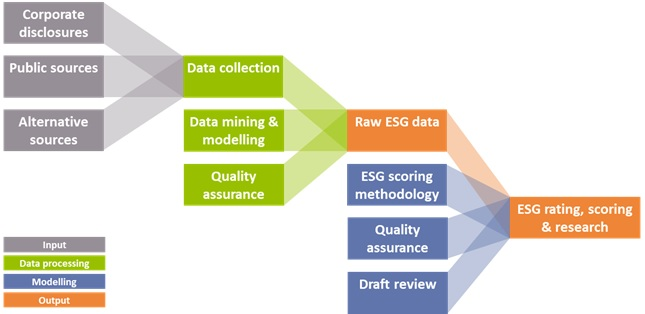

All the solutions above provide an aid to determine if climate related performance data is lacking, or can assist in reporting comparable and reliable data. They all apply a similar process of collecting the data and determining ESG scores, which is illustrated in Figure 2.

Figure 2: Data collection process for ESG data solutions (Source: Zanders).

Additionally, public and non-commercial data and solution providers are available, such as:

Missing data

Given the data challenges, it is nearly impossible to create a complete data set. Until that is possible, there are several (temporary) methods to deal with missing data:

- Find a comparable loan, asset, or company for which the required data is available.

- Distribute sector data based on market share of individual companies. For example, assign 10% of the estimated emission of sector X to company Y based on its market share of 10%.

- Find a proxy, comparable or second-best metric. For example, by taking the energy label as a proxy for CO2 emission related to properties, or by excluding scope 3 emissions and focusing on scope 1 and 2 emissions.

- Change the granularity level. For example, by gathering data on sector level rather than on individual positions.

- Fill in the gaps with statistical or machine learning techniques.

Conclusion

The increased attention to integrating ESG risks into existing risk frameworks has led to a need for FIs to collect and disclose meaningful data on ESG factors. However, there is still a lack of data availability, reliability, and comparability.

Several regulatory and political efforts are ongoing to tackle this data challenge, such as the EU taxonomy. More policy interventions, however, are required. Examples are additional mandatory disclosure requirements, an audit and validation framework for ESG data, and social and governance taxonomies that classify economic activities that contribute to social and governance goals.

In the meantime, FIs have to find ways to produce meaningful insights and comply with regulatory requirements related to ESG risks. Zanders has experienced that there is no one-size-fits-all solution for defining, selecting, implementing, and disclosing relevant data and metrics. It is dependent on the composition of the asset and loan portfolio, the use of the data, and the data that is (already) available. Regardless of how the lack of data is solved, it is important that FIs are transparent about their choices and methodologies, and that the related metrics and scorings are explainable and intuitive.

Sources:

https://www.ngfs.net/sites/default/files/medias/documents/progress_report_on_bridging_data_gaps.pdf

https://ec.europa.eu/info/business-economy-euro/banking-and-finance/sustainable-finance/eu-taxonomy-sustainable-activities_en

https://www.europarl.europa.eu/news/en/press-room/20220620IPR33413/new-social-and-environmental-reporting-rules-for-large-companies

https://zandersgroup.com/en/insights/blog/ebas-binding-standards-on-pillar-3-disclosures-on-esg-risks

https://www.eba.europa.eu/sites/default/documents/files/document_library/Publications/Reports/2021/1015656/EBA%20Report%20on%20ESG%20risks%20management%20and%20supervision.pdf

https://www.bankingsupervision.europa.eu/ecb/pub/pdf/ssm.202011finalguideonclimate-relatedandenvironmentalrisks~58213f6564.en.pdf

https://2degrees-investing.org/resource/pacta/

On Friday 8 July, the European Central Bank (ECB) published the results of the climate risk stress test that was performed in the first half of this year.

In total 104 banks participated in the stress test that was intended as a learning exercise, for the ECB and the participating banks alike. In this article we provide a brief overview of the main results.

The ECB’s goal with the climate risk stress test was to assess the progress banks have made in developing climate risk stress-testing frameworks and the corresponding projections, as well as understanding the exposures of banks with respect to both transition and physical climate change risks. The stress test therefore consisted of three modules: 1) a qualitative questionnaire to assess the bank’s climate risk stress testing capabilities, 2) two climate risk metrics showing the sensitivity of the banks’ income to transition risk and their exposure to carbon emission-intensive industries, and 3) constrained bottom-up stress test projections for four scenarios specified by the ECB1. The third module only had to be completed by 41 directly supervised banks to limit the burden for some of the smaller banks included in the climate risk stress test.

An understatement of the true risk

The constrained bottom-up stress test projections show that the combined market and credit risk losses for the 41 banks in the sample amount to approximately EUR 70 billion in the short-term disorderly transition scenario. The ECB emphasizes that this probably is an understatement of the true risk, because it does not consider the scenarios underlying the stress test to be ‘adverse’. Second round economic effects from climate risk changes have, for example, not been factored in. Furthermore, only a third of the total exposures of the 41 banks were in scope and, on top of that, the ECB considers the banks’ modeling capabilities to be ‘rudimentary’ in this stage: they report that around 60% of the banks do not yet have a well-integrated climate risk stress testing framework in place, and they expect that it will take several years before banks achieve this. Even though banks are not meeting the ECB’s expectations yet, the ECB does conclude that banks have made considerable progress with respect to their climate stress testing capabilities.

Be aware of clients’ transition plans

A further analysis of the results shows that the share of interest income related to the 22 most carbon-intensive industries amounts to more than 60% of the total non-financial corporate interest income (on average for the banks in the sample). Interestingly, this is higher than the share of these sectors (around 54%) in the EU economy in terms of gross added value. The ECB argues that banks should be very much aware of the transition plans of their clients to manage potential future transition risks in their portfolio. The exposure to physical risks is much more varied across the sample of banks. It primarily depends on the geographical location of their lending portfolios’ assets.

The ECB points out that only a few banks account for climate risk in their credit risk models. In many cases, the credit risk parameters are fairly insensitive to the climate change scenarios used in the stress test. They also report that only one in five banks factor climate risk into their loan origination processes. A final point of attention is data availability. In many cases, proxies instead of actual counterparty data have been used to measure (for example) greenhouse gas emissions, especially for Scope 3. Consequently, the ECB is also promoting a higher level of customer engagement to improve in this area.

Many deficiencies, data gaps and inconsistencies

The outcome of the climate risk stress test will not have direct implications for a bank’s capital requirements, but it will be considered from a qualitative point of view as part of the Supervisory Review and Evaluation Process (SREP). This will be complemented by the results from the ongoing thematic review that is focused on the way banks consider climate-related and environmental risks into their risk management frameworks. The combination will indicate to the ECB how well a bank is meeting the expectations laid down in the ‘Guide on climate-related and environmental risks’ that was published by the ECB in November 2020.

The ECB notes that the exercise revealed many deficiencies, data gaps and inconsistencies across institutions and expects banks to make substantial further progress in the coming years. Furthermore, the ECB concludes that banks need to increase customer engagement to obtain relevant company-level information on greenhouse gas emissions, as well as to invest further in the methodological assumptions that are used to arrive at proxies.

If you are looking for support with the integration of Environmental, Social, and Governance risk factors into your existing risk frameworks, please reach out to us.

Notes

1) See our earlier article on the ECB’s climate stress test methodology for more details.

On Thursday 9 June, we hosted a roundtable in our head office in Utrecht titled ’Integrating ESG risks into a bank’s credit risk framework’. The roundtable was attended by credit and climate risk managers, as well as model validators, working for Dutch banks of different sizes. In this article we briefly describe Zanders’ view on this topic and share the key insights of the roundtable.

The last three to four years have seen a rapid increase in the number of publications and guidance from regulators and industry bodies. Environmental risk is currently receiving the most attention, triggered by the alarming reports from the Intergovernmental Panel on Climate Change (IPCC). These reports show that it is a formidable, global challenge to shift to a sustainable economy in order to reduce environmental impact.

Zanders’ view

Zanders believes that banks have an important role to play in this transition. Banks can provide financing to corporates and households to help them mitigate or adapt to climate change, and they can support the development of new products such as sustainability-linked derivatives. At the same time, banks need to integrate ESG risk factors into their existing risk processes to prepare for the new risks that may arise in the future. Banks and regulators so far have mostly focused on credit risk.

We believe that the nature and materiality of ESG risks for the bank and its counterparties should be fully understood, before making appropriate adjustments to risk models such as rating, pricing, and capital models. This assessment allows ESG risks to be appropriately integrated into the credit risk framework. To perform this assessment, banks may consider the following four steps:

- Step 1: Identification. A bank can identify the possible transmission channels via which ESG risk factors can impact the credit risk profile of the bank. This can be through direct exposures, or indirectly via the credit risk profile of the bank’s counterparties. This can for example be done on portfolio or sector level.

- Step 2: Materiality. The materiality of the identified ESG risk factors can be assessed by assigning them scores on impact and likelihood. This process can be supported by identifying (quantitative) internal and external sources from the Network for Greening the Financial System (NGFS), governmental bodies, or ESG data providers.

- Step 3: Metrics. For the material ESG risk factors, relevant and feasible metrics may be identified. By setting limits in line with the Risk Appetite Statement (RAS) of the bank, or in line with external benchmarks (e.g., a climate science-based emission path that follows the Paris Agreement), the exposure can be managed.

- Step 4: Verification. Because of the many qualitative aspects of the aforementioned steps, it is important to verify the outcomes of the assessment with portfolio and credit risk experts.

Key insights

Prior to the roundtable, Zanders performed a survey to understand the progress that Dutch banks are marking with the integration of ESG risks into their credit risk framework, and the challenges they are facing.

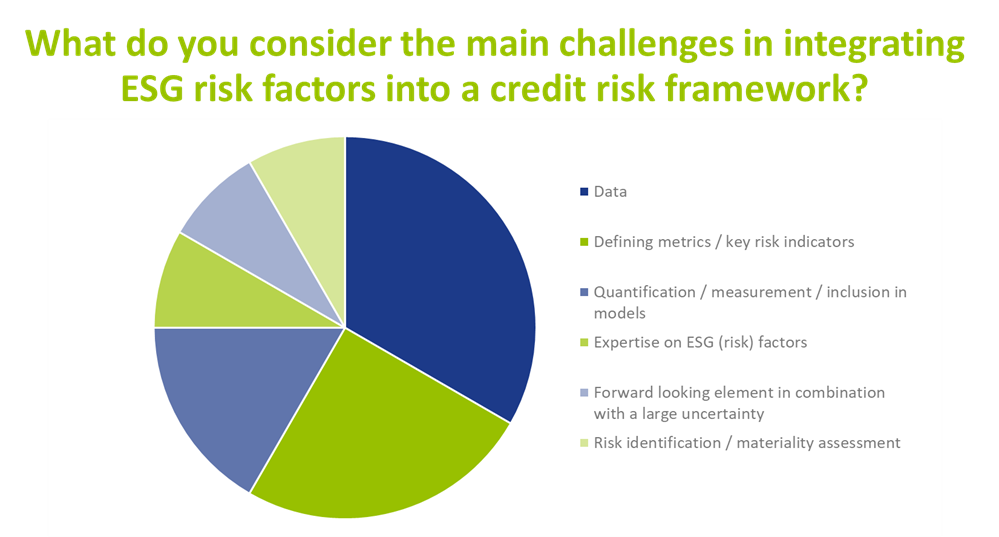

Currently, when it comes to incorporating ESG risks in the credit risk framework, banks are mainly focusing their attention on risk identification, the materiality assessment, risk metric definition and disclosures. The survey also reveals that the level of maturity with respect to ESG risk mitigation and risk limits differs significantly per bank. Nevertheless, the participating banks agreed that within one to three years, ESG risk factors are expected to be integrated in the key credit risk management processes, such as risk appetite setting, loan origination, pricing, and credit risk modeling. Data availability, defining metrics and the quantification of ESG risks were identified by banks as key challenges when integrating ESG in credit risk processes, as illustrated in the graph below.

In addition to the challenges mentioned above, discussion between participating banks revealed the following insights:

- Insight 1: Focus of ESG initiatives is on environmental factors. Most banks have started integrating environmental factors into their credit risk management processes. In contrast, efforts for integrating social and governance factors are far less advanced. Participants in the roundtable agreed that progress still has to be made in the area of data, definitions, and guidelines, before social and governance factors can be incorporated in a way that is similar to the approach for environmental factors.

- Insight 2: ESG adjustments to risk models may lead to double counting. The financial market still needs to gain more understanding to what extent ESG risk factor will manifest itself via existing risk drivers. For example, ESG factors such as energy label or flood risk may already be reflected in market prices for residential real estate. In that case, these ESG factors will automatically manifest itself via the existing LGD models and separate model adjustments for ESG may lead to double counting of ESG impact. Research so far shows mixed signals on this. For example, an analysis of housing prices by researchers from Tilburg University in 2021 has shown that there is indeed a price difference between similar houses with different energy labels. On the other hand, no unambiguous pricing differentiation was found as part of a historical house price analysis by economists from ABN AMRO in 2022 between similar houses with different flood risks. Participating banks agreed that further research and guidance from banks and the regulators is necessary on this topic.

- Insight 3: ESG factors may be incorporated in pricing. An outcome of incorporating ESG in a credit risk framework could be that price differentiation is introduced between loans that face high versus low climate risk. For example, consideration could be given to charging higher rates to corporates in polluting sectors or ones without an adequate plan to deal with the effects of climate change. Or to charge higher rates for residential mortgages with a low energy label or for the ones that are located in a flood-prone area. Participating banks agreed that in theory, price differentiation makes sense and most of them are investigating this option as a risk mitigating strategy. Nevertheless, some participants noted that, even if they would be able to perfectly quantify these risks in terms of price add-ons, they were not sure if and how (e.g., for which risk drivers and which portfolios) they would implement this. Other mitigating measures are also available, such as providing construction deposits to clients for making their homes more sustainable.

Conclusion

Most participating banks have made efforts to include ESG risk factors in their credit risk management processes. Nevertheless, many efforts are still required to comply with all regulatory expectations regarding this topic. Not only efforts by the banks themselves but also from researchers, regulators, and the financial market in general.

Zanders has already supported several banks and asset managers with the challenges related to integrating ESG risks into the risk organization. If you are interested in discussing how we can help your organization, please reach out to Sjoerd Blijlevens or Marije Wiersma.